2020-06-12

Back to listAuthors: Wei Ping, Kainan Peng, Kexin Zhao, Zhao Song

Audio synthesis has a variety of applications, including text-to-speech (TTS), music generation, virtual assistant, and digital content creation. In recent years, deep neural network has obtained noticeable successes for synthesizing raw audio in high-fidelity speech and music generation. One of the most successful examples are autoregressive models (e.g., WaveNet). However, they sequentially generate high temporal resolution of raw waveform (e.g., 24 kHz) at synthesis, which are prohibitively slow for real-time applications.

Many researchers from various organizations have spent considerable effort to develop parallel generative models for raw audio. Parallel WaveNet and ClariNet could generate high-fidelity audio in parallel, but they require distillation from a pretrained autoregressive model and a set of auxiliary losses for training, which complicates the training pipeline and increases the cost of development. GAN-based model can be trained from scratch, but it provides inferior audio fidelity than WaveNet. WaveGlow can be trained directly with maximum likelihood, but the model has huge number of parameters (e.g., 88M parameters) to reach the comparable fidelity of audio as WaveNet.

Today, we’re excited to announce WaveFlow (paper, audio samples), the latest milestone of audio synthesis research at Baidu. It features: 1) high-fidelity & ultra-fast audio synthesis, 2) simple likelihood-based training, and 3) small memory footprint, which could not be achieved simultaneously in previous work. Our small-footprint model (5.91M parameters) can synthesize high-fidelity speech (MOS: 4.32) more than 40x faster than real-time on a Nvidia V100 GPU. WaveFlow also provides a unified view of likelihood-models for raw audio, which includes both WaveNet and WaveGlow as special cases and allow us to explicitly trade inference parallelism for model capacity.

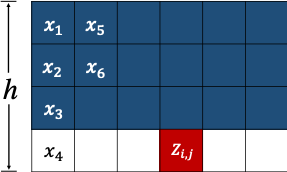

Figure 1. WaveFlow defines invertible mapping between audio x and latent z in a 2-D matrix manner.

WaveFlow is a flow-based generative model, which defines an invertible mapping between audio x and latent z and enables closed-form computation of the likelihood through the change of variable formula. In particular, it handles the long-range structure of waveform with a dilated 2-D convolutional architecture by reshaping the 1-D waveform samples into a 2-D matrix (see Figure 1 for an illustration). In contrast to WaveGlow, it processes the local adjacent samples with powerful autoregressive function without losing temporal order information, which lead to 15X fewer parameters and much less artifacts in synthesized audio (see Figure 2 (a) and (b) for an illustration).

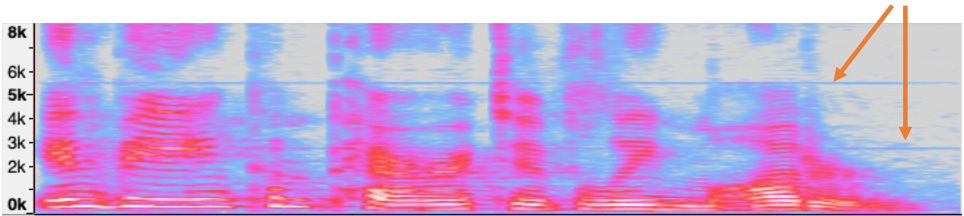

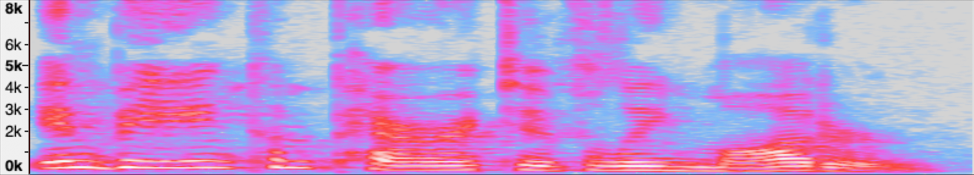

(a)

(b)

Figure 2. (a) The spectrogram of the audio synthesized from WaveGlow has the signature constant frequency noises (horizontal lines on spectrograms). One has to apply heuristic denoising function to remove that. (b) WaveFlow synthesize much cleaner audio.

Our paper will be presented at ICML 2020.

For more details of WaveFlow, please check out our paper: https://arxiv.org/abs/1912.01219

Audio samples are in: https://waveflow-demo.github.io/

The implementation can be accessed in Parakeet, which is a text-to-speech toolkit building on PaddlePaddle: https://github.com/PaddlePaddle/Parakeet/tree/develop/examples/waveflow