2018-07-20

Back to listBy Wei Ping, Kainan Peng, Jitong Chen

Speech synthesis, also called text-to-speech (TTS), has a variety of applications, such as smart home devices and intelligent personal assistants.

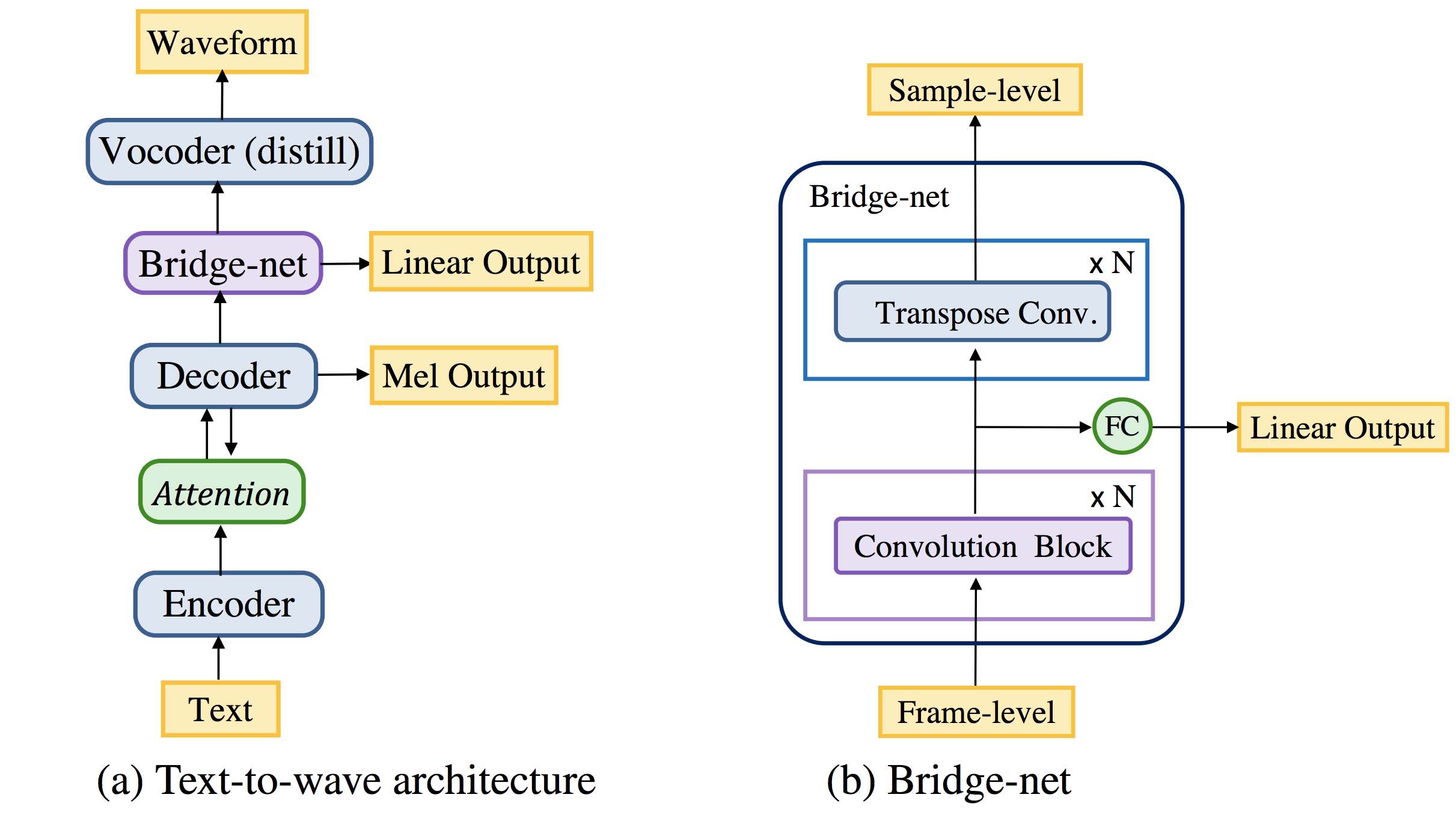

Today, we're excited to announce ClariNet the latest milestone of TTS research at Baidu. Previous neural network-based TTS employs separately optimized text-to-spectrogram and waveform synthesis models, which may result in suboptimal performance. In contrast, ClariNet, the first fully end-to-end TTS model, directly converts text to a speech waveform in a single neural network. Its fully convolutional architecture enables fast training from scratch. ClariNet significantly outperforms separately trained pipelines in terms of voice naturalness. The architecture of ClariNet is illustrated in Figure 1. It uses an encoder-decoder module to learn the alignment between text and mel-spectrogram. The hidden representation of the decoder is fed to a bridge-net for temporal processing and upsampling. Then, the upsampled hidden representation is used as a conditioner for the neural waveform synthesizer.

Figure 1

Furthermore, ClariNet proposes a novel parallel wave generation method based on Gaussian inverse autoregressive flow (IAF). It generates all samples of an audio waveform in parallel, which dramatically speeds up waveform synthesis (e.g., an order of magnitude faster than real time) as compared to the state-of-the-art autoregressive methods. To teach a parallel waveform synthesizer, we distill a non-autoregressive student-net (Gaussian IAF) from an autoregressive teacher-net. In contrast to Deepmind's parallel WaveNet, our method simplifies and stabilizes the training procedure by providing a closed-form computation of a regularized KL divergence during knowledge distillation.

For more details of ClariNet, please check out our paper and audio samples.