2019-12-10

Back to list

Achieving human-level versatile locomotion is the holy grail for designing humanoid robots. It remains a top research challenge, as human movement is an innate ability that’s achieved through a complex and highly coordinated interaction between hundreds of muscles, skeletons, tendons, joints, and more.

We’re excited to present our recent research results in this area, which we believe will advance the field: our Baidu research team trained a 3D human musculoskeletal model that can walk and run when given velocity commands.

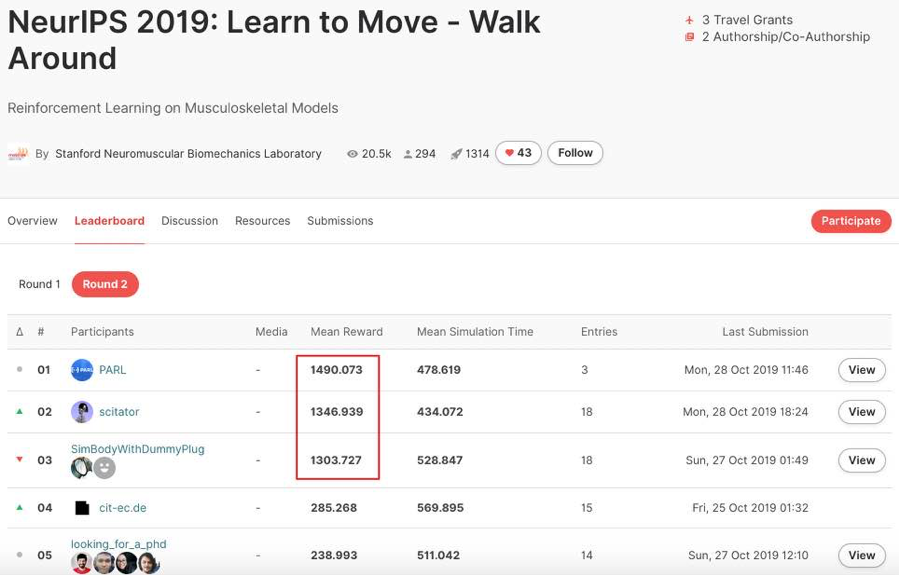

Our results topped the NeurIPS 2019: Learn to Move Challenge in both the Best Performance Track and the Machine Learning Track. We accomplished this using popular reinforcement learning techniques and our home-grown framework PARL, which contributed to a significant performance improvement and played a key role in winning the challenge.

The NeurIPS 2019: Learn to Move Challenge

To understand human movements, scientists have been running physics-based, biomechanical simulations that capture muscle-tendon dynamics. Although previous simulated controllers were able to reproduce stereotypical human-like movements, such as normal walking, they were mostly limited in generating steady behaviors leaving unexplained how humans produce diverse and transitional movements to navigate in complex and dynamic environments.

That’s why the annual Learn to Move challenge (previously known as Learning to Run) has seen increasing interest among the machine learning community over the past few years. Researchers believe reinforcement learning, a powerful trial-and-error AI algorithm, can train simulated physics-based biomechanical models to produce human-like behaviors in complex environments and help understanding how humans exhibit a rich repertoire of locomotion behaviors."

Learn to Move has been organized by the Stanford Neuromuscular Biomechanics Laboratory since 2017. It’s a well-recognized competition aimed at testing how well a computer can simulate muscle controls and is one of the official competition tracks at NeurIPS, a top conference in computational neuroscience and machine learning.

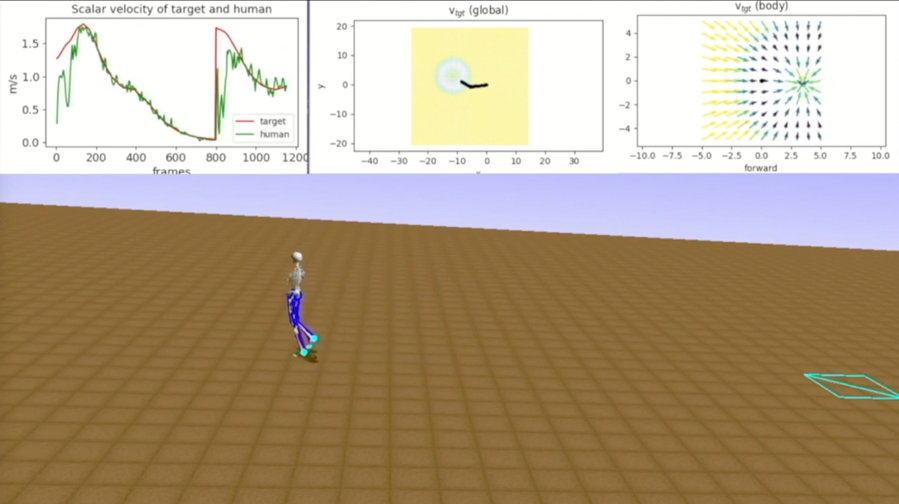

This year, the Learn to Move challenge tasked participants to develop a controller for a physiologically plausible 3D human model to move in OpenSim, a physics-based simulation environment. The challenge comprised two rounds: in the first-round contestants had to train their models to change velocity in real-time; the second round raised the difficulty by requiring the model to change direction within 360 degrees.

While the challenge has become more difficult year-over-year, through our research into reinforcement learning and our home-grown PARL framework we’ve achieved top results two years running. Our model can perform movements that are even difficult for humans, such as suddenly turning around from a standing position and then walking at a specific speed.

“Baidu's solution demonstrates that it is possible to produce rapid turns with no internal yaw motions (the human model does not have internal yaw degrees of freedom; i.e. no internal or external rotation). To my knowledge, this has not been shown before and has biomechanical implications in how humans produce rapid turning motions,” says Seungmoon Song, the lead organizer of Learn to Move.

Teaching AI to Move Like a Human

Curriculum learning, a popular training method to improve learning performance by gradually raising the difficulty, is a key technique our team used in this challenge. To train a model that walks and runs like a human, we started with a relatively simple scenario in which the model learns posture in high-speed running, and then gradually lowered its speed while improving its stability.

The well-trained posture allows the model to maintain balance while flexibly handling any changes in speed and direction. We compared videos of different models walking in simulated environments submitted by participants and discovered that our model walks more naturally and closer to humans than others.

Most algorithms we used in this challenge are from PARL, our home-grown reinforcement learning framework. PARL provides algorithms that can consistently reproduce the result of many popular models, such as Reinforce, DDPG/PPO, and other model-based algorithms. Developers can access these complex algorithms by simply importing the code.

Our team also leveraged PARL’s distributed computing feature to train the model in parallel. To achieve high-performance parallel computing, we multithreaded our code and then added PARL’s parallel modifiers to use computing resources from different machines.

Reinforcement learning has been one of our major research focuses since 2012 when we applied our results in the multi-armed bandit problem to the Baidu search engine and other recommendation products. This January, we officially open-sourced PARL, aiming to power more developers with easy-to-use frameworks.

With a mission of making the complicated world simpler through technology, we hope our solution to this challenge will not only facilitate the development of humanoid robots, but also advance our understanding of human-robot interactions – a key technology to many tech-for-good use cases, such as designing prothesis aiming to help rehabilitate people with limb amputations.

Our models and code are publicly available on GitHub. The performance video of our model is available on YouTube.

Note: if you are at NeurIPS on Dec 14, we are encouraging you to attend the Deep Reinforcement Learning workshop where our researchers will present their solution at 4 pm.