2019-12-11

Back to list

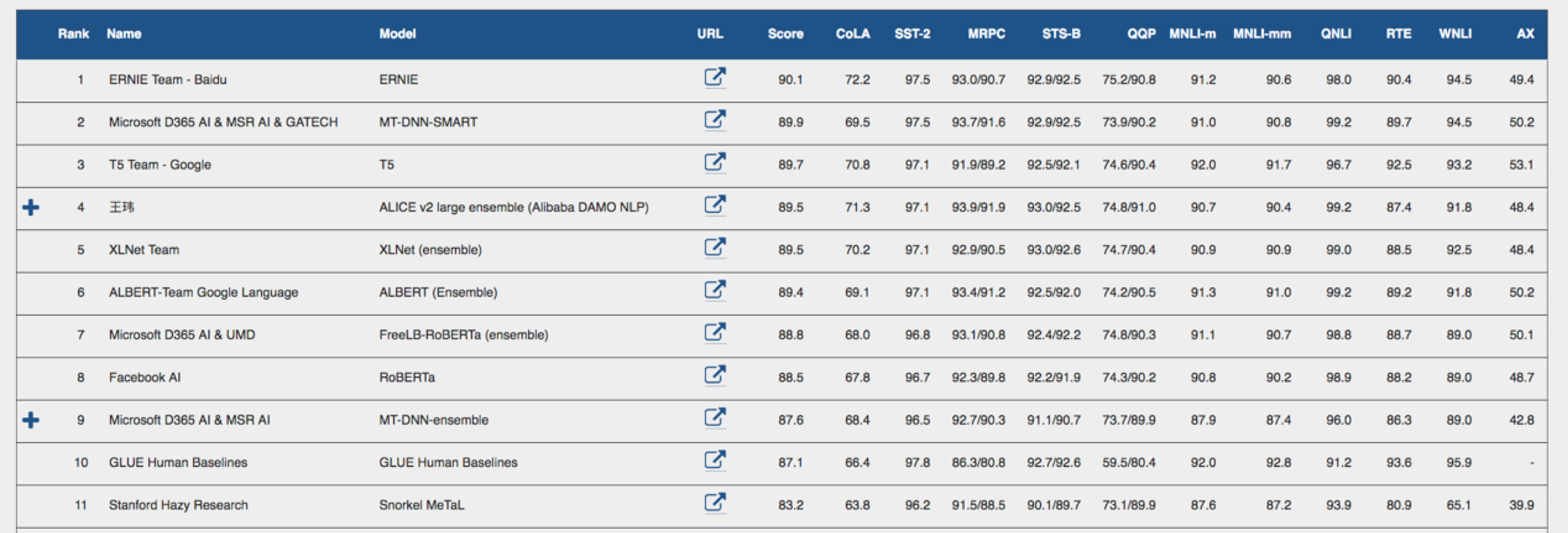

With natural language processing widely being adopted across a growing number of use cases from search engines to mobile smart assistants, leading pre-training language models like Baidu’s ERNIE (Enhanced Representation through kNowledge IntEgration) are receiving strong attention within the machine learning community due to their advancements. After substantial progress since its release earlier this year, today we are excited to announce that ERNIE has achieved new state-of-the-art performance on GLUE and become the world’s first model to score over 90 in terms of the macro-average score (90.1).

GLUE (General Language Understanding Evaluation) is a widely-recognized multi-task benchmark and analysis platform for natural language understanding (NLU). It comprises multiple NLU tasks including question answering, sentiment analysis, textual entailment, and an associated online platform for model evaluation, comparison, and analysis.

ERNIE is a continual pre-training framework that builds and learns incrementally by pre-training tasks through sequential multi-task learning. We introduced ERNIE 1.0 early this year and released the improved ERNIE 2.0 model in July. The latter outperformed Google’s BERT and Carnegie Mellon University’s XLNet – competing pre-training models – in 16 NLP tasks in both Chinese and English.

This time, the well-trained ERNIE model topped the public GLUE leaderboard, followed by Microsoft's MT-DNN-SMART and Google’s T5. Specifically, ERNIE has achieved SOTA on CoLA, SST-2, QQP, and WNLI, and outperforms Google’s BERT with a significant 10-point improvement.

Why does ERNIE perform so well?

The major contribution of ERNIE 2.0 is continual pre-training. Our researchers create different kinds of unsupervised pre-training tasks with the available big data and prior knowledge, and incrementally update the framework via multi-task learning.

On top of ERNIE 2.0, our researchers made a few improvements on knowledge masking and application-oriented tasks, with the aim to advance the model's general semantic representation capability.

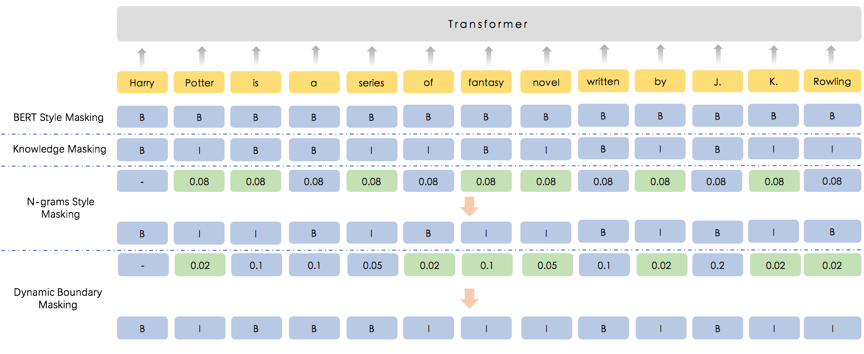

In order to improve the knowledge masking strategy, we proposed a new mutual information based dynamic knowledge masking algorithm. It can effectively solve the problems arising from the low diversity of masked semantic units in ERNIE 1.0, which has a high dependency on phrase and named entity recognition tools.

First, the dynamic knowledge masking algorithm extracts semantic units with high confidence from massive data sets through hypothesis testing and calculates their mutual information. Based on this information and its statistics, the model calculates a probability distribution of these semantic units, which is used as the sampling probability when masking. This dynamic knowledge masking algorithm not only maintains ERNIE 1.0' s ability to model knowledge units but also improves the diversity of prior knowledge for masking.

The following figure shows the difference between different masking algorithms, where B (Begin) represents the beginning of a text span, and I (Inside) represents that the current position should form a span with the word marked as B. Taking the sentence in the figure below as an example, the dynamic knowledge masking algorithm samples the semantic units according to the probability distribution and dynamically constructs the semantic units to be masked.

To improve the performance of ERNIE on application tasks, we also constructed pre-training tasks that are specific for different applications. For example, our team added a coreference resolution task to identify all expressions in a text that refer to the same entity. On an unsupervised corpus, our team masked some expressions of the same entity in a text, randomly replaced them with different expressions, and trained the model to predict whether the replaced text is the same as the original one.

Additionally, we augmented the training data and optimized the model structure. In the pre-training dataset, we further use dialogue data to improve semantic similarity calculation. In dialogue data, utterances that correspond to the same reply are usually semantically similar. By leveraging this kind of semantic relations in the dialogue data, we trained ERNIE to better model the semantic correlation, which results in improved performance on semantic similarity tasks such as QQP.

We have been applying ERNIE’s semantic representation to our real-world application scenarios. For example, our third quarter 2019 financial results highlighted that the percentage of user queries satisfied by our top 1 search results has been improved 16% absolutely. This improvement was due to the fact that we adopted ERNIE for question answering in our search engine.

While language understanding remains a difficult challenge, our results on GLUE indicate that pre-training language models with continual training and multi-task learning are a promising direction for NLP research. We will keep improving the performance of the ERNIE model via the continual pre-training framework.

Read the full paper ERNIE 2.0: A Continual Pre-training Framework for Language Understanding on arXiv. The paper has been accepted by AAAI 2020. The models and code are available on GitHub.