2019-07-30

Back to listBaidu today released ERNIE 2.0 (Enhanced Representation through kNowledge IntEgration), a brand-new natural language understanding framework. Based on this framework, Baidu also open sourced the ERNIE 2.0 model, a pre-trained language understanding model which achieved state-of-the-art (SOTA) results and outperformed BERT and the recent XLNet in 16 NLP tasks in both Chinese and English.

Unsupervised pre-trained language representation models like BERT, XLNet and ERNIE 1.0 have made significant breakthroughs in various natural language understanding tasks, including natural language inference, semantic similarity, named entity recognition, sentiment analysis and question-answer matching. This indicates that pre-training on large-scale corpora could play a crucial role in natural language processing.

The pre-training procedures of the SOTA pre-trained models such as BERT, XLNet and ERNIE 1.0 are mainly based on several simple tasks modeling the co-occurrence of words or sentences. For example, BERT constructed a bidirectional language model task and a next sentence prediction task to capture the co-occurrence information of words and sentences; XLNet constructed a permutation language model task to capture the co-occurrence information of words.

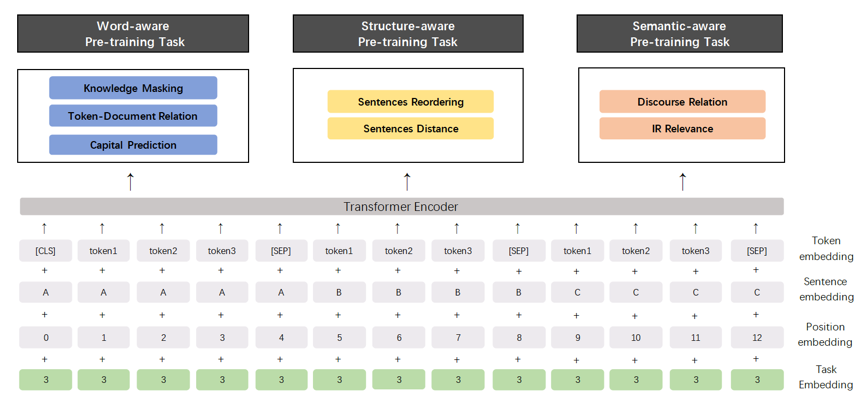

However, besides co-occurrence, there is other valuable lexical, syntactic and semantic information in training corpora. For example, named entities, such as names, locations and organizations, could contain conceptual information. Sentence order and proximity between sentences would allow models to learn structure-aware representations. What’s more, semantic similarity at the document level or discourse relations among sentences could train the models to learn semantic-aware representations. Hypothetically speaking, would it be possible to further improve performance if the model was trained to constantly learn a larger variety of tasks?

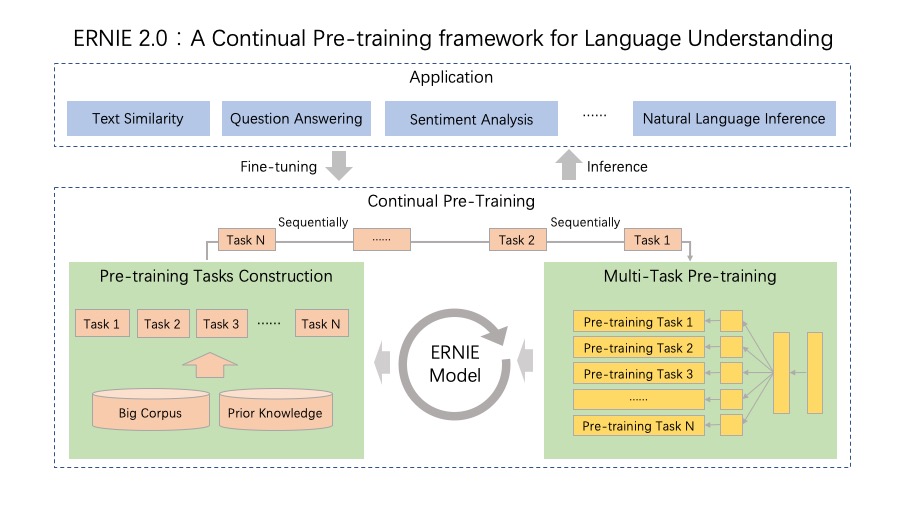

Based on this idea, Baidu proposed a continual pre-training framework for language understanding in which pre-training tasks can be incrementally built and learned through constant multi-task learning. In this framework, different customized tasks can be incrementally introduced at any time and are trained through multi-task learning that permits the encoding of lexical, syntactic and semantic information across tasks. When given a new task, our framework can incrementally train the distributed representations without forgetting the parameters of previous tasks.

The Structure of Newly-Released ERNIE 2.0 Model

As a brand-new continual pre-training framework for language understanding, ERNIE 2.0 not only achieves SOTA performance, but also provides a feasible scheme for developers to build their own NLP models. The source codes for ERNIE 2.0 fine-tuning and pre-trained models in English can be downloaded at https://github.com/PaddlePaddle/ERNIE/.

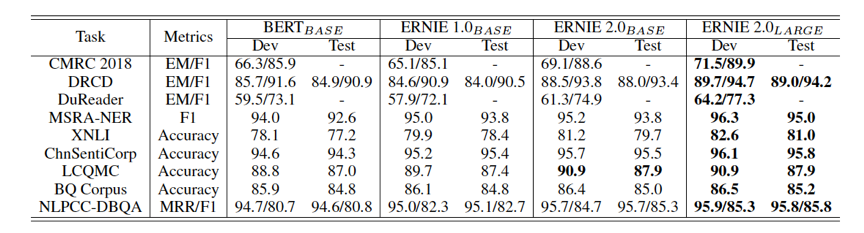

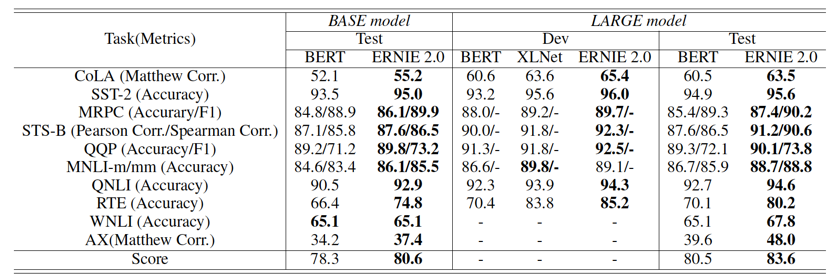

Baidu compared the performance of the ERNIE 2.0 model with the existing SOTA pre-training models on the English dataset GLUE and 9 popular Chinese datasets separately. The results show that the ERNIE 2.0 outperforms BERT and XLNet on 7 GLUE language understanding tasks and beats BERT on all 9 of the Chinese NLP tasks, such as machine reading comprehension built on the DuReader dataset, sentiment analysis and question answering.

Specifically, according to test results on GLUE datasets, we observed that ERNIE 2.0 outperforms BERT and XLNET on English tasks, whether the base model or the large model. Furthermore, the ERNIE 2.0 large model achieves the best performance and creates new state-of-the-art results on these Chinese NLP tasks.

![]()