2019-08-19

Back to listHere we announce DuTongChuan (abbreviation of Baidu simultaneous interpreting in Chinese Pinyin), the first context-aware translation model for simultaneous interpreting. The model achieves comparable performance to human interpreters in delivering high-quality simultaneous speech translation with low latency.

The model is able to constantly read streaming ASR output while simultaneously determining the boundaries of Information Units (IUs) one after another. The idea is inspired by human simultaneous interpreters who translate an incoming speech by splitting it into meaningful information units waiting until the end of a whole sentence to balance quality and latency. Each detected IU is translated into a fluent translation with two simple yet effective decoding strategies: partial decoding and context-aware decoding. Specifically, IUs at the beginning of each sentence are sent to the partial decoding module. Other IUs, either appearing in the middle or at the end of a sentence, are translated by the context-aware decoding module. This module is able to exploit additional context from the history so that the model can generate coherent translation.

Figure 1: The context-aware translation model consists of three important parts, the IU detector, the partial decoding, and the context-aware decoding.

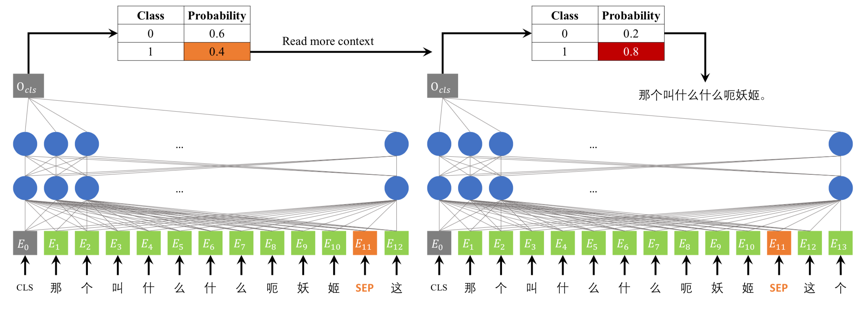

We consider the IU boundary detection as a classification problem and propose a novel dynamic context based method.

Figure 2: The IU detector utilizes dynamic context to make a reliable decision.

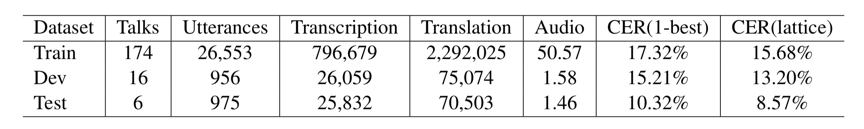

We also release Baidu Speech Translation Corpus (BSTC) for open research. This dataset covers speeches in a wide range of domains, including IT, economy, culture, biology, arts, etc. We transcribe the talks carefully and have professional translators to produce the English translations. This procedure is extremely difficult due to the large number of domain-specific terminologies, speech redundancies and speakers’ accents. This dataset is expected to help researchers develop robust NMT models on speech translation.

Table 1: The summary of BSTC.

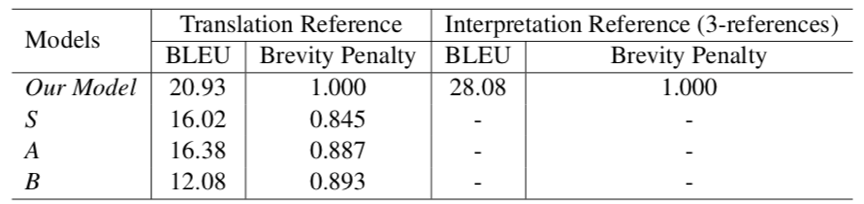

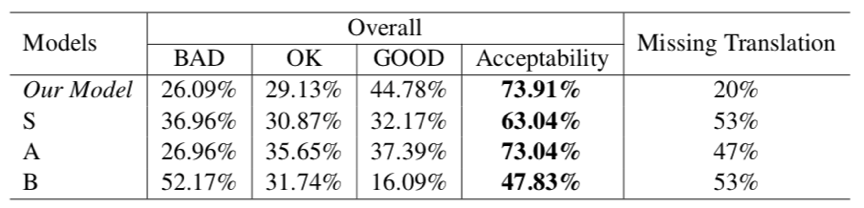

We ask three interpreters (S, A, B) with 3-7 years SI experience to simultaneously interpret the test speech from BSTC in a mock conference setting. Experiments show that our model outperforms human interpreters in terms of BLEU score (20.93 V.S. 16.38) and manually assessed translation acceptability (73.91% V.S. 73.04%).

It is worth mentioning that both automatic and human evaluation criteria are designed for evaluating written translation and have a special emphasis on adequacy and faithfulness. But in simultaneous interpreting, human interpreters routinely omit less-important information to overcome their limitations in working memory. As the last column in Table 3 shows, human interpreter A makes more omissions than our model, but still achieves comparable acceptability. Tireless machine translators, which stick to high adequacy, show great potential for simultaneous interpreting. Although the proposed method achieves very good results, it doesn’t mean that machines have now exceeded human interpreters in simultaneous interpreting. Obviously, it is necessary to design new evaluation metrics which consider both translation quality and latency to suit simultaneous interpreting. Moreover, apart from adequacy and fluency, we need new indicators, for example, discourse coherency, to assess translation quality.

Table 2-3: Comparison between our model and human interpretation. The interpretation reference consists of a collection of interpretations from S, A and B. Our model is trained on the large-scale corpus.

DuTongChuan,the first industrial speech-to-speech translation system has been deployed and successfully used in Baidu Create 2019 (Baidu AI Developer Conference). It achieves an acceptability of 85.71% for Chinese-English translation, and 86.36% for English-Chinese translation. Moreover, the output speech lags behind the source speech by an average of less than 3 seconds, which is comparable to human interpreters' latency and brings a better user experience.

Table 4: Results of DuTongChuan. C→E represents Chinese-English translation task, and E→C represents the English-Chinese translation task.

Demo for DuTongChuan (Baidu Speech-to-Speech Simultaneous Interpreting System):

https://fanyi-api.baidu.com/api/trans/product/simultaneous

Baidu Speech Translation Corpus (BSTC):

http://ai.baidu.com/broad/subordinate?dataset=bstc

For the details of the proposed method, please refer to our paper here:https://arxiv.org/abs/1907.12984