2017-03-29

Back to list

Despite tremendous progress, artificial intelligence is still limited in many ways. For example, in computer games, if an AI agent is not pre-programmed with game rules, it must try millions of times before figuring out the right moves to win. Humans can accomplish the same feat in a much shorter time, because we are good at transferring past knowledge to new tasks by using language.

In a game in which you must kill a dragon to win, an AI agent would need to try many other actions (firing at wall, a patch of flowers, etc) before understanding that it must kill the dragon. However, if the AI agent understood language, a human could simply use language to instruct it to: “kill the dragon to win the game.”

Language, grounded in visuals, plays an important role in how we generalize skills and applying them to new tasks, an abilitythat remains a major challenge for machines. Developing a sophisticated language system is crucial for machines to become truly intelligent and gain the ability to learn like humans.

As the first step towards this goal, using a combination of supervised learning and reinforcement learning, we developed a system that allowed a virtual teacher to teach language to a virtual AI agent from scratch by connecting the language with perceptions and actions, just like a parent would teach their baby.

After the training, our results show the AI agent is able to correctly interpret the teacher’s commands in natural language and take action accordingly. More importantly, the agent developed what we call a “zero-shot learning ability,” meaning the agent is able to understand unseen sentences. The research, we believe, brings us one step closer to teaching machines to learn like humans do.

Study Overview

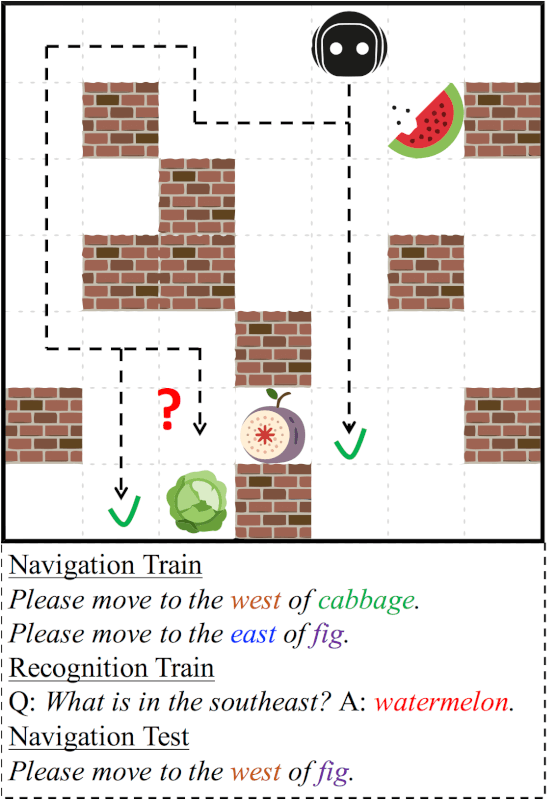

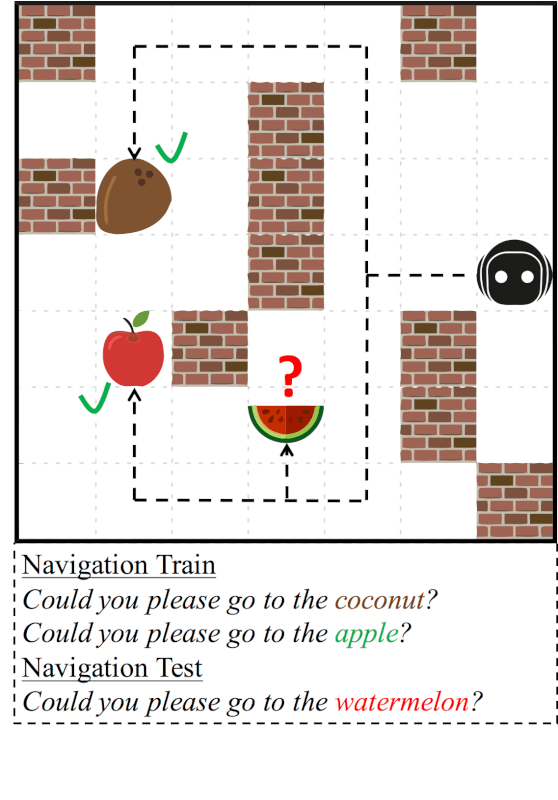

The study takes place in an 2D maze-like environment called XWORLD, where our virtual baby agent needs to navigate under the natural language command issued by a virtual teacher. In the beginning, the agent knows nothing about the language: every word is equally meaningless. However, as it explores the environment, the teacher gives positive (or negative) rewards if it succeeds (or fails) in executing a command. To help it learn faster, the teacher also asks some simple questions about the environment surroundings while the agent is navigating. The agent needs to correctly answer the questions. By encouraging correct actions/answers and penalizing incorrect ones for navigation/QA, the teacher trains the agent to understand natural language after many trials and errors.

Some example commands include:

Please navigate to the apple.

Can you move to the grid between the apple and the banana?

Could you please go to the red apple?

Some example Q&A pairs are:

Q:What is the object in the north? A:Banana.

Q:Where is the banana? A:North.

Q:What is the color of the object in the west of the apple? A:Yellow.

Results

In the end, the agent is able to correctly interpret the teacher’s commands and navigate to the right places. More importantly, the agent develops what we call a “zero-shot learning ability.” This means that even for a completely new command that was not previously seen, it is still able to correctly execute the task if enough sentences of a similar form were seen before. In other words, the agent is able to understand a new sentence assembled with known words in a known way (grammar).

For example, a human who has learned how to cut an apple with a knife will likely then know how to cut a dragon fruit with a knife. Applying past knowledge to a new task is very easy for humans but still difficult for current end-to-end learning machines. Although machines may know what a “dragon fruit” looks like, they can’t perform the task “cut the dragon fruit with a knife” unless they have been explicitly trained with the dataset containing this command. By contrast, our agent demonstrated the ability to transfer what they knew about the visual appearance of a dragon fruit as well as the task of “cut X with a knife” successfully, without explicitly being trained to perform “cut the dragon fruit with a knife.”

In the figures below, our agent successfully executed the commands in the navigation tests.

Our next steps are two-fold: one is to teach the agent more abilities with natural language commands in the current 2D environment, and the other is to migrate it to a virtual 3D environment. A virtual 3D environment poses more challenges, and it is more like the realistic environment we live in. Our ultimate goal is to train a physical robot in a realistic environment by a human teacher with natural language.

To learn more, please read our paper.