2017-05-09

Back to listBy Chao Li, Ajay Kannan and Zhenyao Zhu

Speaker recognition algorithms seek to determine the identity of a speaker from audio. Two common recognition tasks are verification (determining whether speakers are who they claim to be) and speaker identification (classifying the identity of an unknown voice among a set of speakers).

There are a variety of applications for this technology. For example, a voiceprint can be used to login into a device. Speaker verification can also serve as an extra step of security for financial transactions. In addition, shared devices like smart home assistants can leverage this technology to personalize services based on the current user.

Recent papers using neural networks for speaker recognition have improved upon the traditional i-vector approach (see the original paper or slides from an Interspeech tutorial). The i-vector approach assumes any utterance can be decomposed into one component that depends on the speaker and channel variabilities and another component that is invariant to those factors. I-vector speaker recognition is a multi-step process that involves estimating a Universal Background Model (usually a Gaussian Mixture Model) using data from multiple speakers, collecting sufficient statistics, extracting the i-vectors, and finally using a classifier for the recognition task.

Some papers have replaced pieces of the i-vector pipeline with neural networks, while others have trained end-to-end speaker recognition models for either the text-dependent regime (users must say the same utterance, e.g. a wake word) or the text-independent regime (the model is agnostic to the words in an utterance). We introduce Deep Speaker, an end-to-end neural speaker recognition system that works well for both text-dependent and text-independent scenarios. This means that the same system can be trained to recognize who is speaking either when you say a wake word to activate your home assistant or when you’re speaking in a meeting.

Deep Speaker consists of deep neural network layers to extract features from audio, with temporal pooling and triplet loss based on cosine similarity. We explore both ResNet-inspired convolution models and recurrent models to extract acoustic features.

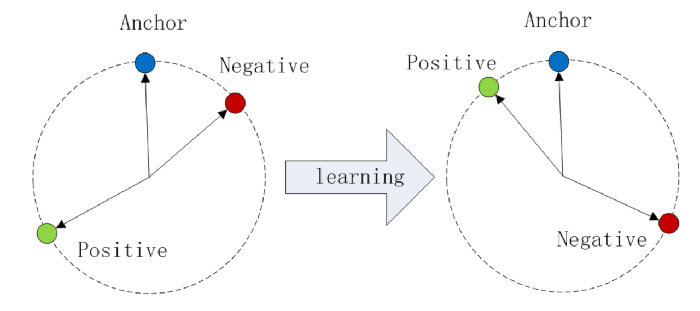

Figure 1: we use triplet loss, which has previously been used for face recognition. During training, we choose an utterance by a speaker, and calculate an embedding (labeled “Anchor”). Then we generate two more embeddings, one by the same speaker (labeled “Positive”) and one from a different speaker (labeled “Negative”). During training, we seek to make the cosine similarity between the anchor and the positive embeddings higher than the cosine similarity between the anchor and the negative embeddings.

We demonstrate the effectiveness of Deep Speaker on three distinct datasets, including both text-dependent and text-independent tasks. One of them (UIDs) includes around 250,000 speakers, which is the largest in the literature to our best knowledge. Experiments suggest that Deep Speaker significantly outperforms a DNN-based i-vector approach. For example, Deep Speaker achieves an equal error rate (EER) of 1.83% for speaker verification and an accuracy of 92.58% for speaker identification between 100 randomly sampled candidates in a text-independent dataset. This is a 50% EER reduction and 60% accuracy improvement relative to the DNN-based i-vector approach.

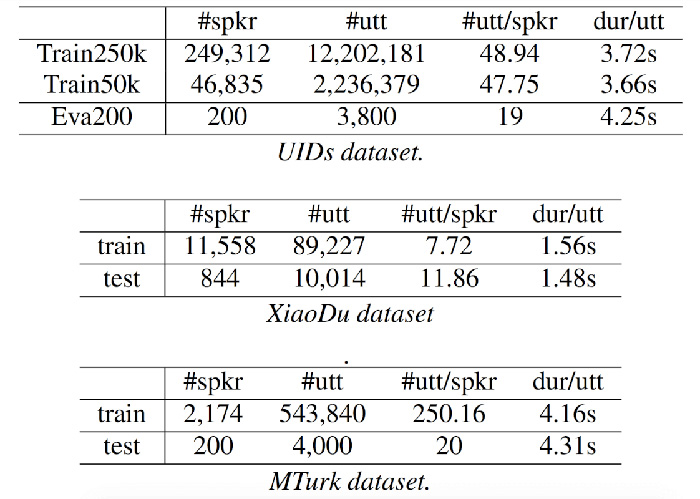

Table 1: the three datasets we use in experimentation are UIDs, XiaoDu, and MTurk. UIDs and XiaoDu are Mandarin datasets, and MTurk is an English dataset. UIDs and MTurk are text-independent datasets, while XiaoDu is text-dependent, based on Baidu’s wake word. To experiment with different training set sizes, we use the full UIDs dataset (Train250k) and a subset of around fifty thousand speakers (Train50k). During evaluation, we choose an anchor, and then randomly choose one anchor positive sample and 99 anchor negatives from the test partition.

We also find that Deep Speaker learns language-independent features. When trained solely with Mandarin speech, Deep Speaker achieves an EER of 5.57% and an accuracy of 88% for English verification and identification, respectively. In addition, training the model with Mandarin first and then continuing to train with English improves English recognition accuracy compared to no Mandarin pre-training. These results suggest that Deep Speaker learns cross-language speaker-discriminative acoustic features, even if the languages sound very different. These results parallel Deep Speech 2 findings that the same architecture can learn to recognize speech in vastly different languages.

More details about the Deep Speaker model, training techniques, and experimental results can be found in the paper.