2018-02-20

Back to listAt Baidu Research, we aim to revolutionize human-machine interfaces with the latest artificial intelligence techniques. Our Deep Voice project was started a year ago , which focuses on teaching machines to generate speech from text that sound more human-like.

Beyond single-speaker speech synthesis, we demonstrated that a single system could learn to reproduce thousands of speaker identities, with less than half an hour of training data for each speaker. This capability was enabled by learning shared and discriminative information from speakers.

We were motivated to push this idea even further, and attempted to learn speaker characteristics from only a few utterances (i.e., sentences of few seconds duration). This problem is commonly known as “voice cloning.” Voice cloning is expected to have significant applications in the direction of personalization in human-machine interfaces.

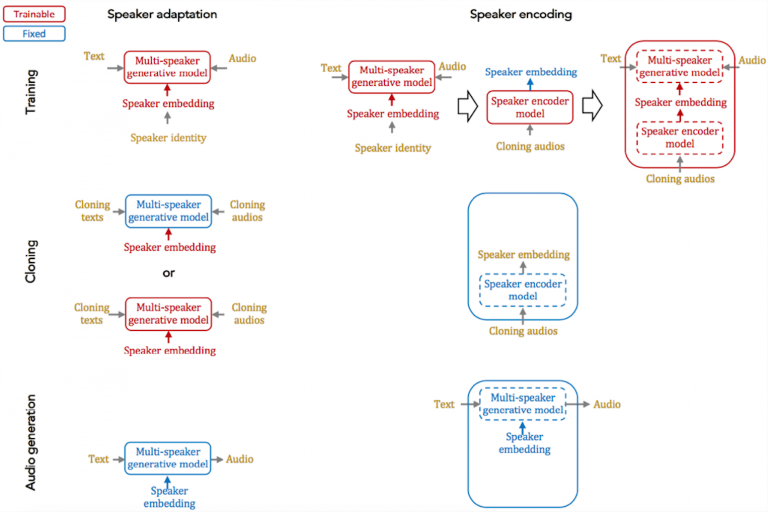

In this study, we focus on two fundamental approaches for solving the problems with voice cloning: speaker adaptation and speaker encoding (please see the above figure above for more details). Both techniques can be adapted to a multi-speaker generative speech model with speaker embeddings, without degrading its quality. In terms of naturalness of the speech and similarity to the original speaker, both demonstrate good performance, even with very few cloning audios. Please find the cloned audio samples here.

Speaker adaptation is based on fine-tuning a multi-speaker generative model with a few cloning samples, by using backpropagation-based optimization. Adaptation can be applied to the whole model, or only the low-dimensional speaker embeddings. The latter enables a much lower number of parameters to represent each speaker, albeit it yields a longer cloning time and lower audio quality.

Speaker encoding is based on training a separate model to directly infer a new speaker embedding from cloning audios that will ultimately be used with a multi-speaker generative model. The speaker encoding model has time-and-frequency-domain processing blocks to retrieve speaker identity information from each audio sample, and attention blocks to combine them in an optimal way. The advantages of speaker encoding include fast cloning time (only a few seconds) and low number of parameters to represent each speaker, making it favorable for low-resource deployment.

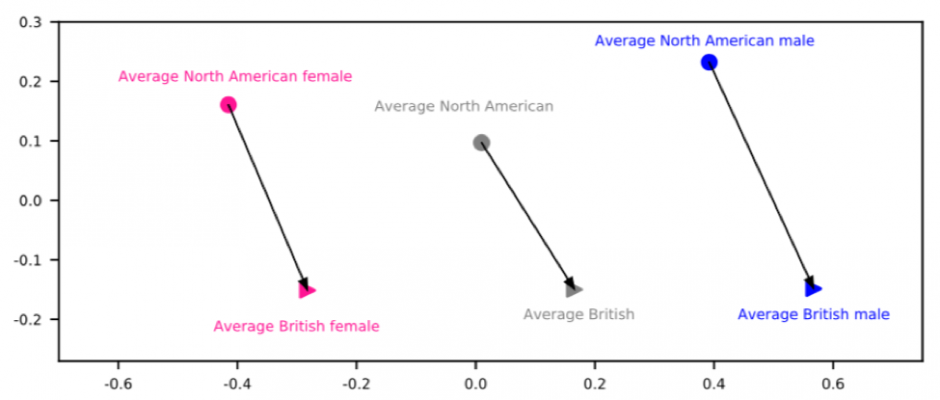

Besides accurately estimating the speaker embeddings, we observe that speaker encoders learn to map different speakers to embedding space in a meaningful way. For example, different genders or accents from various regions are clustered together. This was created by applying operations in this learned latent space, to convert the gender or region of accent of one speaker. Our results demonstrate that this approach is highly effective when generating speech for new speakers and morphing speaker characteristics.

For more details about voice cloning, please check out our paper.