2018-05-02

Back to listOver the past few years, there has been a rapid evolution in deep learning algorithms. Deep learning has been playing an integral part in improving the accuracy of several different applications including speech recognition, text to speech, image recognition, and machine translation. Deep learning models used in these applications are continuously evolving due to fast-paced nature of Artificial Intelligence (AI) research. In order to support the ever-growing computing needs of deep learning, large companies and new startups are building innovative hardware and software systems. Building hardware systems is a time-consuming and expensive process. The dynamic nature of deep learning research makes it hard for hardware manufacturers to build systems that will meet the needs of applications that use deep learning.

DeepBench

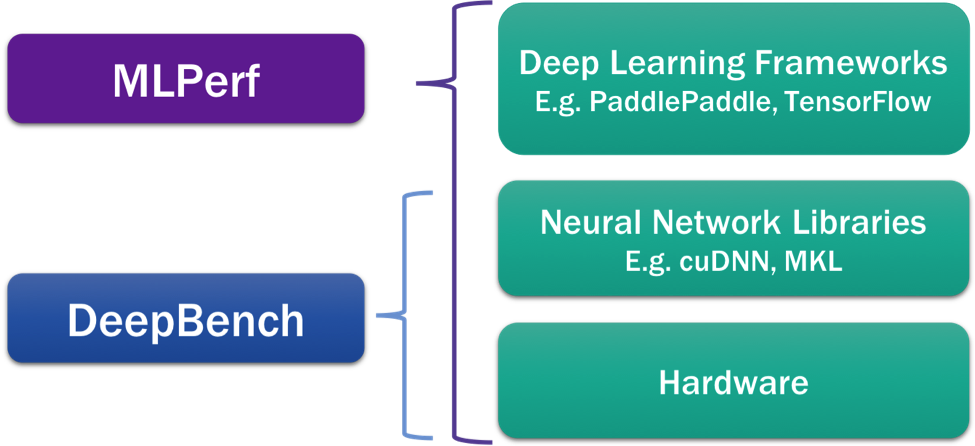

In 2016, we released DeepBench, an open source benchmarking tool that measures the performance of basic operations involved in training and inference of deep neural networks. The DeepBench platform communicates deep learning requirements to hardware manufacturers and makes it easier for them to build hardware suited for deep learning. In addition, DeepBench also answers the question, “Which hardware provides the best performance on the basic operations used for training deep neural networks?” DeepBench answers this question by providing performance results on various hardware platforms for basic deep learning operations. This helps determine the best available hardware for deep learning training and inference.

We've received a lot of positive feedback for DeepBench from the hardware industry. DeepBench has been adopted by several different companies including Nvidia, Intel, AMD, and Graphcore. DeepBench has helped improve the rate of progress in hardware systems for deep learning training and inference.

Model Level Benchmark

DeepBench has set the standard for measuring performance at the kernel level. However, kernel-level benchmarking doesn't provide a complete picture of performance at the application level. AI researchers and engineers want to know the performance of a given hardware system for training or inference of an end-to-end deep learning model. In order to do that, we need a benchmark to measure the performance of the entire deep learning model instead of individual kernels.

In conjunction with a consortium of researchers and engineers, we have released a new framework-level benchmark called MLPerf. MLPerf measures the time required to achieve a given accuracy of a deep learning model trained on a fixed dataset. This approach enables us to benchmark model performance for various hardware systems. The effort is supported by a broad coalition of tech companies and startups including AMD, Baidu, Google, Intel, SambaNova, and Wave Computing and researchers from educational institutions including Harvard, Stanford, University of California Berkeley, University of Minnesota, and University of Toronto.

MLPerf benchmarks seven different applications including speech recognition, image recognition, object detection, sentiment analysis, reinforcement learning–based minigo, recommendation systems, and neural machine translation. Reference implementations for these applications are available on the github repository. Initially, reference implementations exist in four different frameworks i.e. Caffe2, PaddlePaddle, PyTorch, and TensorFlow. In collaboration with our partners, we hope that MLPerf will soon have implementations for all applications in every deep learning framework.

DeepBench and MLPerf enable us to cover the entire spectrum of benchmarking for deep learning training and inference. By measuring performance at the kernel-level, DeepBench makes it easier for hardware vendors and neural network library developers to optimize the performance for the right set of kernels and workloads. In addition, it also enables systems and high performance computing researchers to better understand the performance of a given deep learning model. On the other hand, MLPerf makes it easy for AI researchers and engineers to determine the best hardware and framework to train a deep learning model for a given application. DeepBench already includes most of the kernels from the applications in MLPerf. In the coming weeks, we will update DeepBench to ensure all the kernels from MLPerf models are present in DeepBench. Thus, MLPerf will benchmark complete application training while DeepBench will benchmark the kernels of these applications. We believe that both these benchmarks together will accelerate the rate of improvements in hardware systems and deep learning software.

MLPerf has adopted an "agile" benchmarking philosophy. We are hoping to engage the broad community of AI researchers and engineers, framework and software developers, and hardware designers and architects and iterate quickly after launch. The initial deadline for new set of implementations and results is July 31. We encourage contributions from the community for implementations of applications in a new framework or results on different hardware platforms. We are also happy to receive any feedback regarding the benchmarking philosophy and approach. We'd also love to learn about any applications that we may have missed in the initial release. More details can be found on the MLPerf website.

Authors: Sharan Narang, Greg Diamos, Siddharth Goyal