2021-04-01

Back to list

We are excited to announce that PaddlePaddle 2.0 is now available. The deep learning platform's latest version features dynamic (computational) graphs, a new API system, distributed training for trillion-parameter models, and better hardware support.

Since the release in 2016, both PaddlePaddle and Baidu Brain, our open AI platform and core AI technology engine, have attracted over 2.65 million developers to join in as of December 31st, 2020, and we have owned the largest AI developer community among Chinese companies.

GitHub: https://github.com/PaddlePaddle

Gitee: https://gitee.com/paddlepaddle

Dynamic graphs

PaddlePaddle’s earlier versions added support of dynamic graphs. Compared with static graphs, dynamic graphs demonstrate more flexible programming and better debug mechanisms. In version 2.0, PaddlePaddle has switched its default programming paradigm from static graphs to dynamic graphs, allowing developers to check inputs and outputs of tensors at any time and to quickly debug their codes. Dynamic graphs in version 2.0 support automatic mixed precision and quantization aware training.

PaddlePaddle 2.0 also provides a smooth conversion of dynamic graphs to static graphs with unified interfaces to accelerate model training and deployment, as static graphs enable optimized training and inference performance once a graph is already defined. To use the static graph programming paradigm, developers can switch to static graph mode by running paddle.enable_static().

PaddlePaddle's development kits have received a dynamic graph upgrade to fully facilitate industrial AI applications. For example, PaddleSeg, PaddlePaddle's image segmentation dev kit, has collected numerous high-precision and light-weight segmentation models, powering both configuration and API driven development through a modular structure.

Dynamic graphs: https://github.com/PaddlePaddle/models/tree/develop/dygraph

New API upgrade

In version 2.0, we have improved the API namespaces to help developers quickly locate target API, allowing them to build their neural networks with stateful class APIs and stateless functional APIs. 217 new APIs have been added to the system, while 195 APIs have been reoptimized.

PaddlePaddle also provides high-level APIs in data augmentation, data pipeline, batch training, and more tasks tailored for low-code programming. Thanks to these high-level APIs, developers can train a model with only 10 lines of code. Most importantly, the hierarchical design of high-level and low-level APIs leads to seamless programming, when both types of APIs are used, allowing developers to simply leverage these two API types and to promptly switch between simple implementation and detailed customization. The new API system is fully compatible with previous versions. PaddlePaddle also provides upgrade tools to help developers reduce upgrade and migration costs.

PaddlePaddle API Documentation:

https://www.paddlepaddle.org.cn/documentation/docs/en/api/index_en.html

Large-scale model training

The acronym “Paddle” stands for “PArallel Distributed Deep LEarning”. PaddlePaddle is designed to perform large-scale deep network training, using data sources distributed across hundreds of nodes. PaddlePaddle 2.0 introduces a new hybrid parallel mode, with which developers can combine data-parallel, model-parallel, and pipeline-parallel training. As a result, each layer of a network's parameters can be distributed to multiple GPUs, making the training of a trillion-parameter model computationally effective.

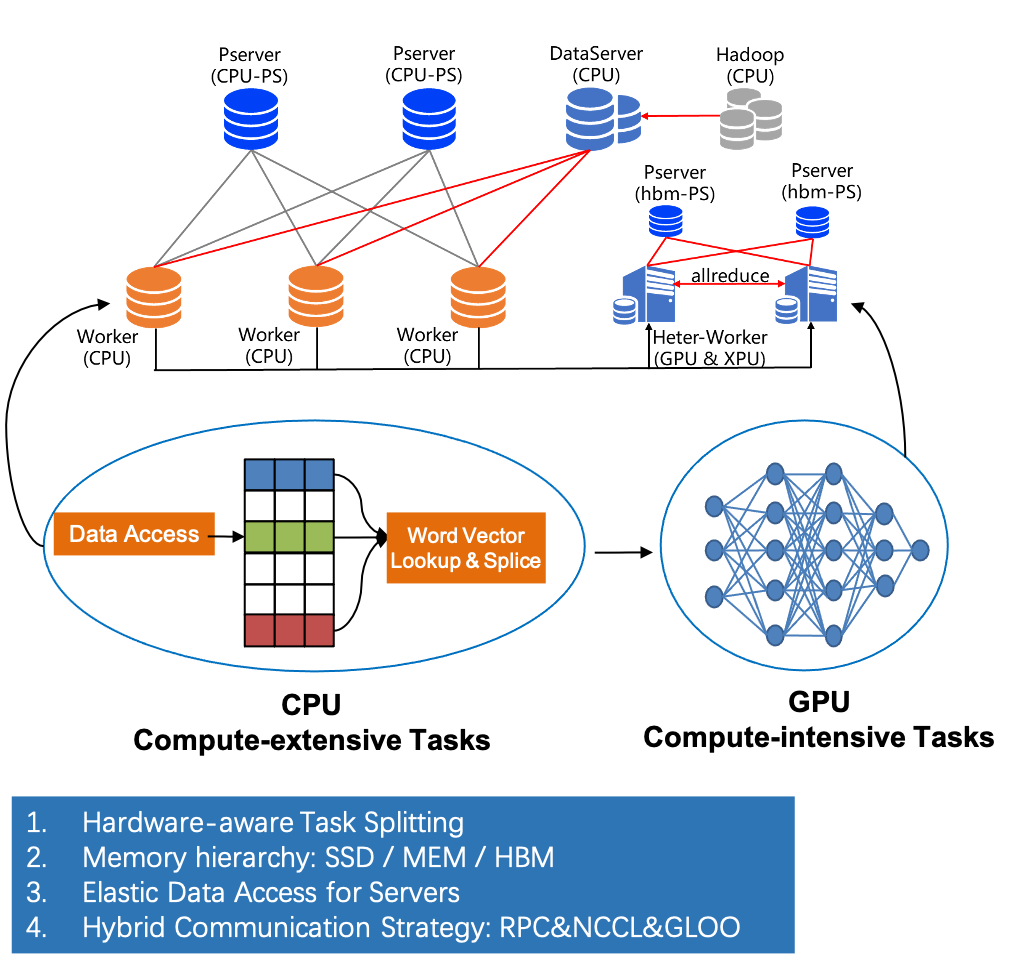

General-purpose heterogeneous parameter server

PaddlePaddle 2.0 has released the industry's first general-purpose heterogeneous parameter server technology, which supports different hardware types – CPU, GPU, and AI chips – efficiently in a single training job. This tackles the inefficiency of computing resources’ utilization, caused by high IO usage, while large-scale sparse-feature models of search and recommendation applications are trained. Developers can deploy distributed training tasks on heterogeneous hardware clusters and leverage different kinds of chips efficiently.

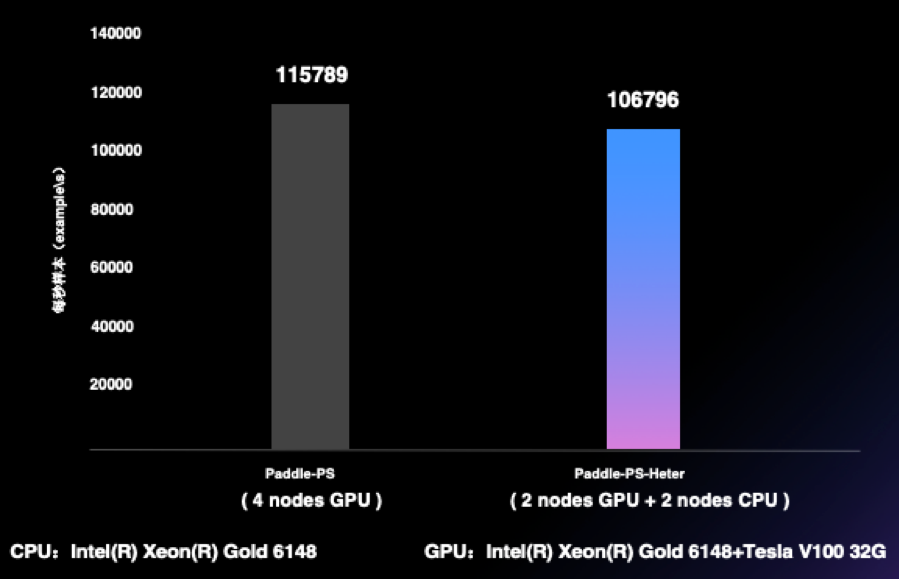

The general-purpose heterogeneous parameter server architecture is compatible with traditional parameter servers composed of CPUs, parameter servers consisting of GPUs or other AI accelerators, and heterogeneous parameter servers that mix CPUs with GPUs or AI accelerators. It has also been proven to be cost-effective. As shown in the figure below, two CPUs and two GPUs can achieve a training speed similar to 4 GPUs, while saving at least 35% of the cost.

New Hardware Support

There are 29 categories of processors from semiconductor manufacturers like Intel, NVIDIA, and ARM, which have already been compatible with or are in the process of being compatible with PaddlePaddle 2.0.