2021-09-27

Back to listIt has always been a great challenge for AI bots to conduct coherent, informative, and engaging conversations as human beings. For robots to serve as emotional companions or intelligent assistants it is essential that they develop high-quality open-domain dialogue systems. As pre-training technology further promotes models’ ability to learn from large-scale unannotated data, mainstream research is focusing on making more efficient and full use of massive data to improve open-domain dialogue systems. To this end, Baidu releases the PLATO-XL with up to 11 billion parameters, achieving new breakthroughs in Chinese and English conversations.

· PLATO-XL: Exploring the Large-scale Pre-training of Dialogue Generation

· https://arxiv.org/abs/2109.09519

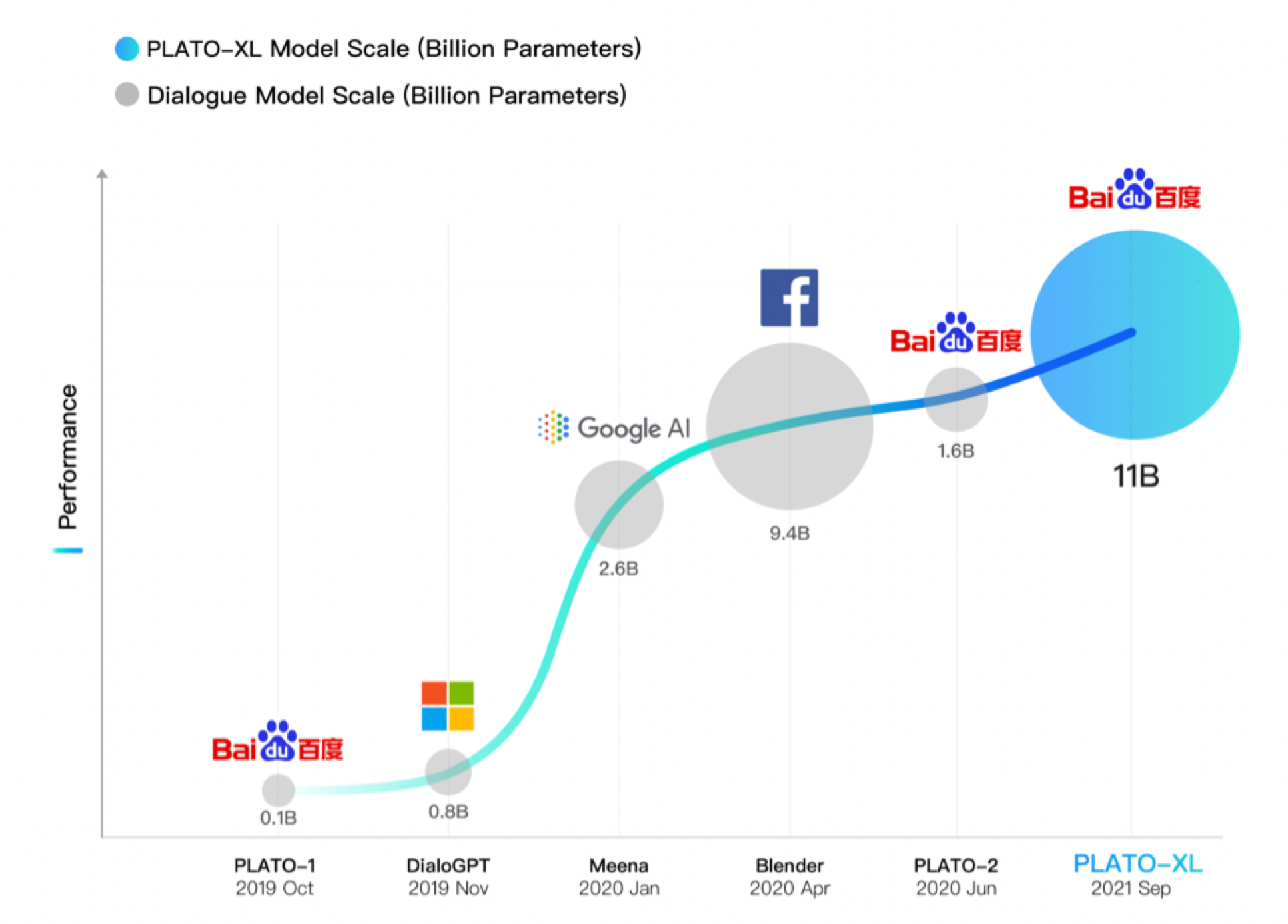

In recent years we have witnessed constant progress in the field of open-domain conversation, from Google’s Meena and Facebook’s Blender to Baidu’s PLATO. In DSTC-9, the top dialog system technology challenge, Baidu PLATO-2 broke a record by winning the first place in five different dialogue tasks.

Now Baidu PLATO-2 has been upgraded to PLATO-XL. Over ten billion parameters make it the world’s largest Chinese and English dialogue generation model. Achieving superior performance in open-domain conversation, PLATO-XL raises our expectation of what hundred-billion or even trillion parameter dialogue models could do.

Introduction to PLATO-XL

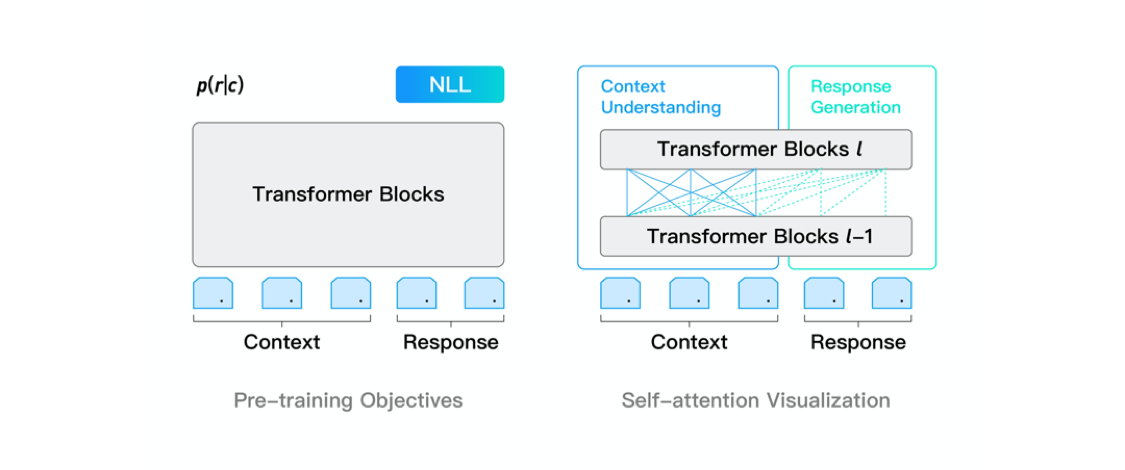

PLATO-XL adopts the unified transformer architecture that allows simultaneous modeling of dialogue understanding and response generation, which is more parameter efficient. A flexible mechanism of self-attention mask enables bidirectional encoding of dialogue history and unidirectional decoding of responses. In addition, the unified transformer architecture proves to be efficient in the training of dialogue generation. As we know, given the variable lengths of conversation samples, a large amount of invalid computations is caused by padding in the training process. The unified transformer is able to greatly improve the training efficiency through effective sorting on the input samples.

To alleviate the inconsistency problem in multi-turn conversations, the multi-party aware pre-training is carried out in PLATO-XL. Most of the pre-training data used are collected from social media, in which multiple users are involved to exchange their ideas. The learned models tend to mix information from multiple participants in the context, thus having difficulties generating consistent responses. To tackle this problem, PLATO-XL introduces the multi-party aware pre-training, which helps the model distinguish the information in the context and maintain the consistency in dialogue generation.

The 11 billion parameter PLATO-XL includes two dialogue models, one for Chinese and the other for English. 100 billion tokens of data are used for pre-training. PLATO-XL is implemented on PaddlePaddle, the deep learning platform developed by Baidu. To train such a large model, PLATO-XL adopts the techniques of gradient checkpoint and sharded data parallelism provided by FleetX, PaddlePaddle's distributed training library. PLATO-XL is trained on a high-performance GPU cluster with 256 Nvidia Tesla V100 32G GPU cards.

Superior performance on various conversational tasks

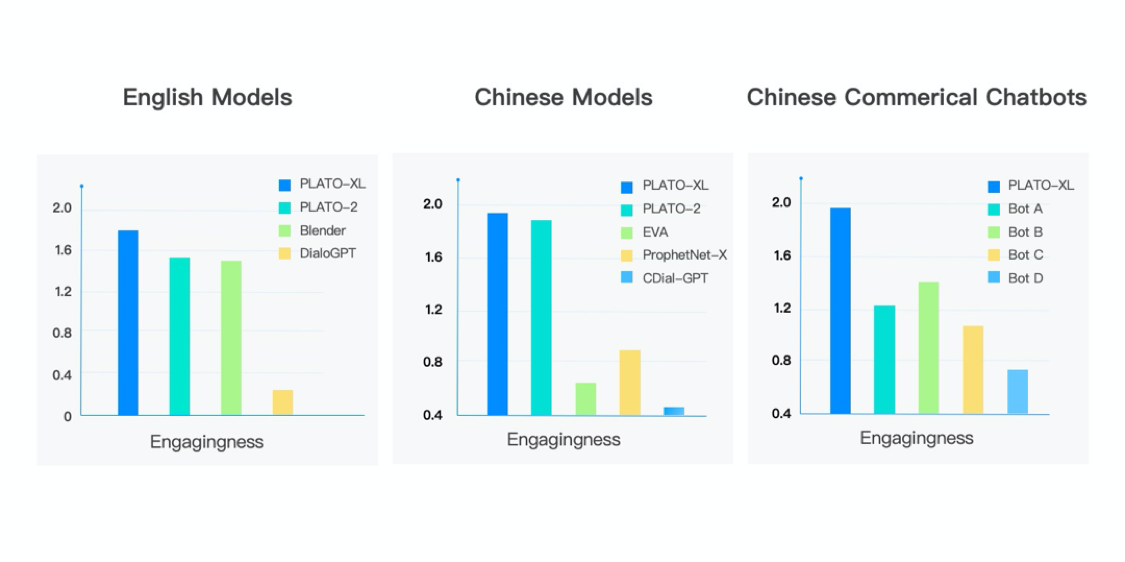

For the comprehensive evaluation, PLATO-XL is compared with other open-source Chinese and English dialogue models. As shown in the following figure, PLATO-XL outperforms Blender, DialoGPT, EVA, PLATO-2, etc. Besides, PLATO-XL proves significantly better performance than the current mainstream commercial chatbots.

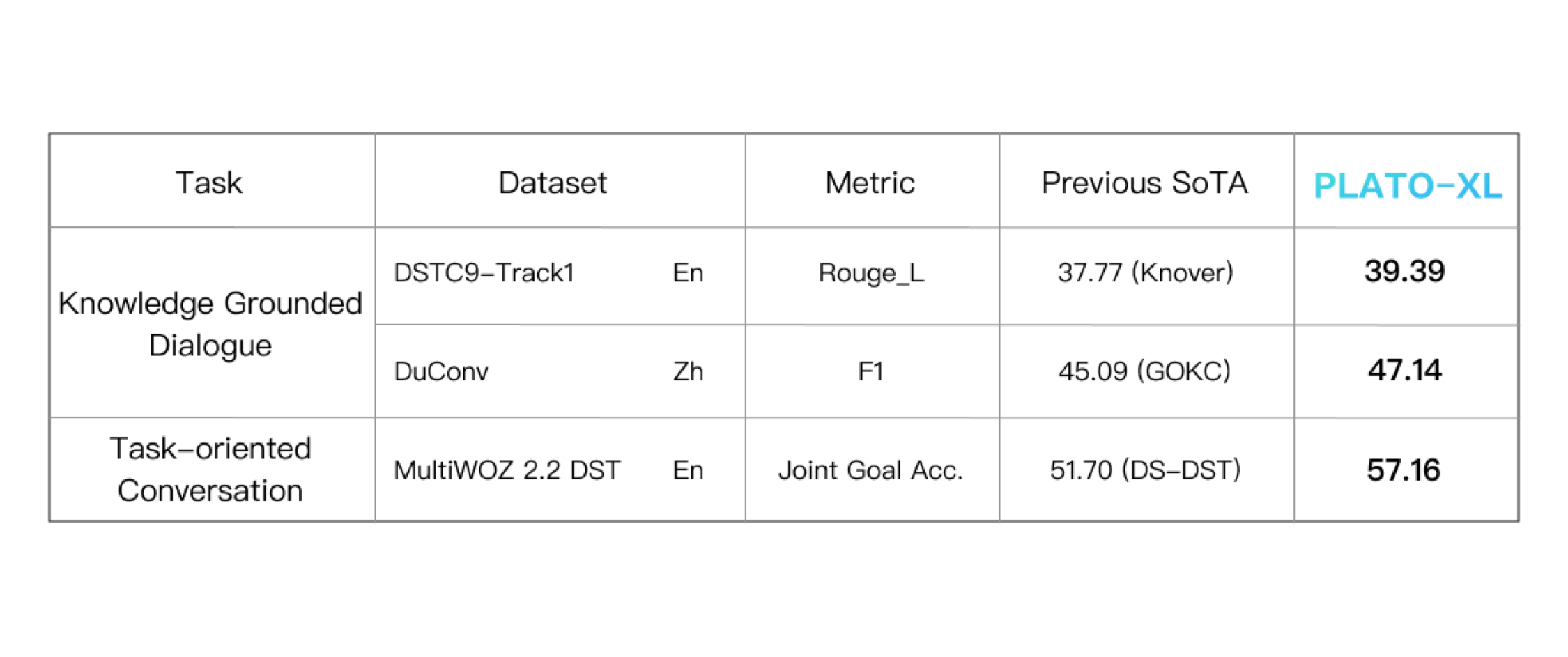

In addition to open-domain conversation, PLATO-XL strongly supports knowledge grounded dialogue and task-oriented conversation with proven superior performance.

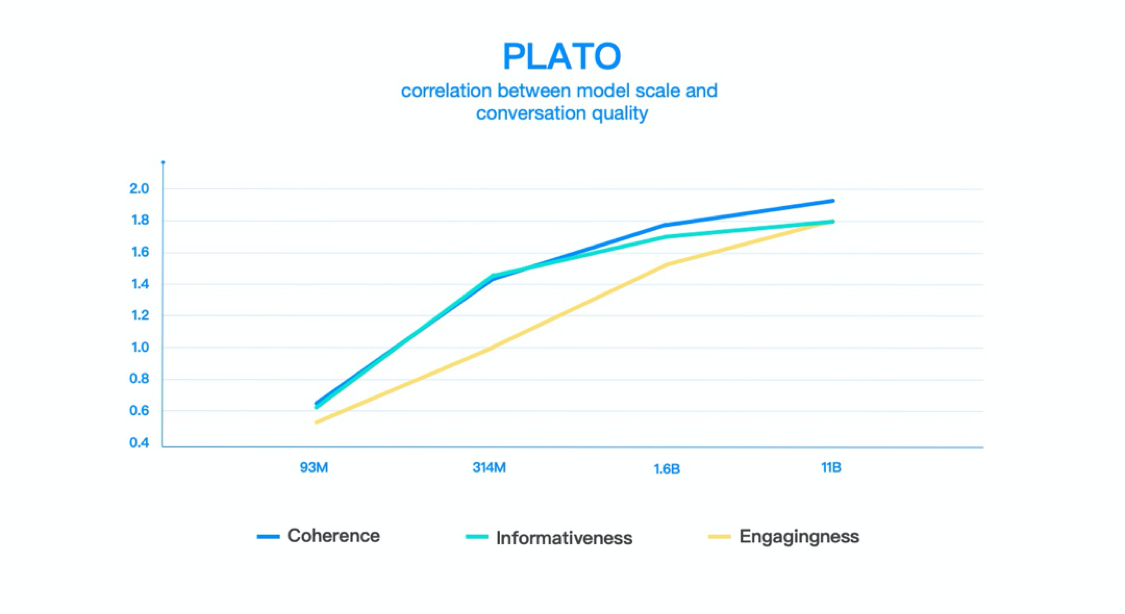

The PLATO series covers dialogue models of different sizes, from 93M to 11B parameters. The figure below shows a relatively stable positive correlation, suggesting that the increase of model size has a significant impact on model performance improvement.

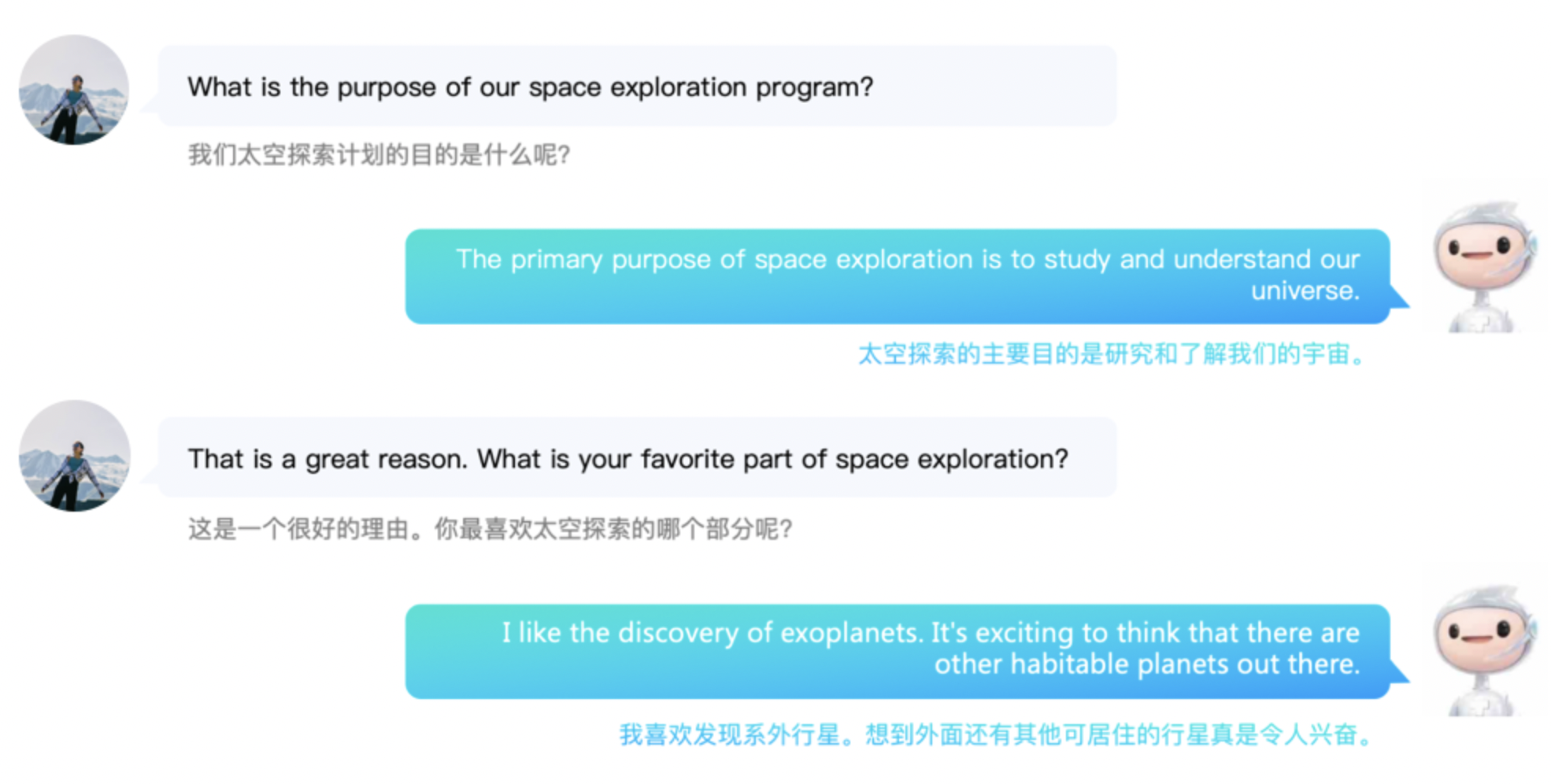

PLATO-XL is able to have logical, informative and interesting multi-turn conversations with users in English and Chinese.

Baidu’s PLATO-XL expands new horizons in open-domain conversations, one of the most challenging tasks in natural language processing. As the largest pre-training model for Chinese and English dialogue, PLATO-XL hits new levels of conversation consistency and factuality, one step closer to the future of finally human-like learning and chatting abilities.

Nevertheless, there are still some limitations of dialogue generation models, such as unfair biases, misleading information, inability of continuous learning, etc. PLATO will continue to make efforts to improve the conversation quality on fairness and factuality.

In addition, we will release our source code together with the English model at GitHub soon, hoping to facilitate the frontier research in dialogue generation. For those interested in conversational AI, don't miss it.