2018-05-15

Back to listBuilding intelligent agents that can communicate naturally with and learn effectively from humans through language is incredibly valuable to the advancement of the technology. At Baidu Research, we aim to develop AI agents that can learn from human through natural interactions.

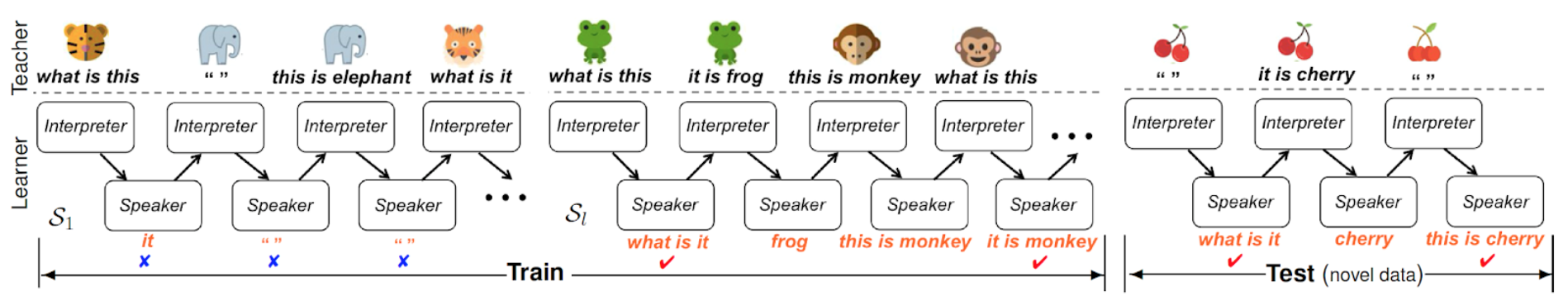

Our previous blog post shared our approach to teaching an AI agent how to learn to speak by interacting with a virtual teacher. In this most recent work, we highlighted conversational interaction between teacher and AI agent. This interaction serves as a natural interface both for language learning and for novel knowledge acquisition. Our proposed joint imitation and reinforcement approach allows for grounded language and fast concept learning through an interactive conversational game.

The agent trained with this approach is able to actively acquire information by asking questions about novel objects and use the just-learned knowledge in subsequent conversations in a one-shot fashion. Active refers to the fact that the agent can actively ask a teacher about unknown objects, in contrast with most existing paradigms that learns passively from pre-collected labeled data. One-shot means that, once deployed, no further training happens to the agent and it can learn to recognize a never-before-seen object after being taught only once by the teacher and can answer the teacher's questions about it correctly in the future.

To teach the agent, we constructed a teacher to converse with the AI agent in a virtual environment. The design of the teacher and environment is inspired by how humans teach infants to learn language and objects. At the beginning of each teaching session, the teacher randomly selects an object and interacts with the learner about the object by randomly 1) posing a question (e.g., “what is this”), 2) remaining silent or 3) making a statement (e.g., “this is monkey”). Then, the teacher will behave according to the response from learner, either answering a question from the agent or moving to another random object. The teacher will also provide an encouragement/discouragement reward signal according to the appropriateness of the response from the agent, e.g., encouraging asking questions about novel objects and answering questions correctly after been taught only once, etc.

The AI agent starts from blank states –Tabula Rasa– just like a newborn. It has to learn to crack the code of language and make sense of the raw visual and language signals. It assesses its knowledge states and memorizes useful information by purely interacting with the teacher, through listening, bubbling, and learning by mimicking and being reinforced by encouragements from the teacher. After this initial training, no further training happens to the agent and it can successfully transfer the developed language and one-shot ability to novel test scenarios.

For example, after training on a set of animals, when confronted with an image of a cherry, which is a novel class that the agent has never seen before, the agent can ask a question about it (“what is it'') and generate a correct statement (“this is cherry'') for another instance of a cherry after only being taught once.

Our next step is to further increase the complexity and diversity of the language learning tasks and investigate the application and generalization of the proposed approach to other relevant tasks. Our teaching environment is implemented using our open source XWorld simulation environment and the model training is done using the PaddlePaddle deep learning platform. For more details, please read our ACL’18 paper.