2017-06-07

Back to listIn early April, our team at Baidu Research successfully taught an AI agent to navigate a virtual maze using natural language command issued by a virtual teacher. Today, we are excited to announce that our AI agent successfully learned to speak by interacting with a virtual teacher.

Speaking, along with other abilities of human beings, is a critical component in our mission to create general intelligence for machines. Although it’s not uncommon to have simple conversations with a machine today, our approach to teaching a machine to speak differs greatly from the conventional method.

Our AI agent learns to speak in an interactive way similar to a baby. In contrast, the conventional approach relies on supervised training using a large corpus of pre-collected training set, which is static and makes it hard to capture the interactive nature within the process of language learning. As a result, the system trained in the conventional method mainly reflects the behavior in the dataset with limited adaptiveness and generalization capability. Our AI agent learns to speak by engaging in the conversation interactively, aiming at gaining the ability of language learning and understanding rather than purely capturing the statistical patterns in the data.

When learning to speak, a baby interacts with people and learns through mimicking and feedback. A baby initially performs verbal action by mimicking his conversational partner/teacher and masters the skill of generating an utterance (e.g., word or sentence). A baby also makes verbal utterances to his parent and adjusts his verbal behavior according to the correction/encouragement from parent.

Study Overview

We present an interactive approach for grounded natural language learning, where an agent learns natural language by interacting with a teacher and learning from feedback, thus learning and improving language skills while taking part in the conversation. There is no direct supervision in the form of labels to guide the behavior of the learner as in the supervised learning setting. Instead, the learner has to speak in order to learn speaking, and the teacher will provide natural verbal (e.g., yes/no) and non-verbal (e.g., nodding/smiling) feedbacks.

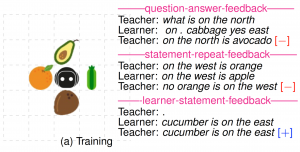

Example dialogues of several different forms are shown in the figure during training. Initially, the agent produces meaningless sentences, but improves conversational skills along the way purely by interacting. In the end, the agent can answer the teacher’s questions correctly in a natural language.

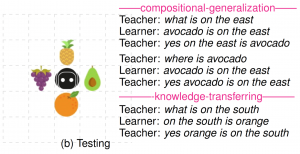

Another aspect that further demonstrates the language learning ability of our agent is its ability to generalize. This means that the agent can answer questions involving novel combinations of known ideas or questions about concepts that have been described by the teacher but previously never appeared in a question. For example, during training, the combination of {avocado, east} has never been involved in question-answering; the object orange is only described but has never been asked by the teacher. During testing, the agent is able to answer questions about “avocado” when it is on the “east” or questions about “orange”, as shown in the figure.

One future goal of this work is to increase the complexity of the language learning environment to foster more complex language behaviors. Another goal is to explore knowledge modeling and fast learning to enable the agent to interact with humans and learn from the physical world effectively.

For more details, please read our paper.