2017-08-21

Back to listBy Xiao Liu and Shilei Wen

This blog discusses a novel approach to video recognition and classification that won Baidu first place at the ActivityNet Challenge this year.

Artificial intelligence technologies are no longer limited to recognizing still, individual images as they can now also identify various activities in videos. Developing an automatic system for activity understanding is especially pertinent today, as video devices from dash-cams to cell phone cameras create far more footage than what humans alone are capable of analyzing.

Such a system creates opportunity for new applications while enhancing existing ones. For example, broadcasters will be able to accurately find high quality materials in a large trove of unidentified video databases, publishers will be able to present personalized videos to their viewers, and software will more accurately screen footage from security cameras.

The ActivityNet Challenge was created to encourage relevant developments in video understanding. At the challenge, AI algorithms are tasked with correctly recognizing activities in 10-second video clips with each clip containing a single human-focused activity – e.g., playing an instrument or shaking hands. For this task, participants used the Kinetics dataset, a new large-scale benchmark for trimmed action classification which has more than 200,000 training videos across 400 different actions.

Understanding what’s in a video is more challenging than recognizing still images. The content of a video is determined by all the frames; hence it is necessary to aggregate the multi-frame information to yield robust and discriminative video representation.

To address this issue, we propose a deep, temporal modeling framework that consists of four temporal modeling approaches:

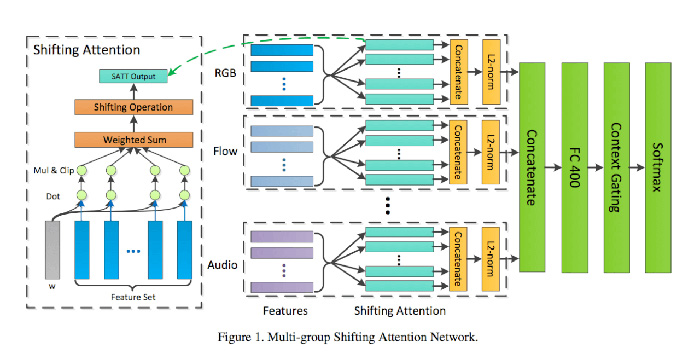

Multi-groups shifting attention network

Temporal Xcepiton network

Multi-stream sequence model

Fast-forward sequence model

In the framework, we first use the video data to train neural networks to extract features such as RGB, flow and audio, and then feed the features into our proposed temporal modeling models to conduct video classification.

Different proposed temporal modeling approaches are powerful and complementary to each other. Particularly, the multi-stream sequence model takes the best advantage of multimodal clues, the fast-forward sequence model plays an essential role in building a very deep sequence model, and the temporal Xception Network learns discriminative temporal patterns. The shifting attention model is solely based on attention and is our best single model.

Our new approach to video understanding and classification ultimately won Baidu first place at this year’s ActivityNet Challenge with a top-5 (suggest five labels, one of them is correct) accuracy of 94.8% and a top-1 accuracy of 80.4%. Our algorithm also placed third among the 650+ teams in the Youtbube-8M Challenge, and is the technology used to recommend a variety of video content to over 100 million daily users of Baidu’s NewsFeed product.

For more details about our solutions for the ActivityNet and Youtube-8M challenges, please read our full paper.

Mimee Xu contributed to this blog