2017-12-07

Back to listOur digital world and data are growing faster today than any time in the past—even faster than our computing power. Deep learning helps us quickly make sense of immense data, and offers users the best AI-powered products and experiences.

To continually improve user experience, our challenge, then, is to quickly improve our deep learning models for existing and emerging application domains. Model architecture search creates important new improvements, but this search often depends on epiphany; Breakthroughs often require complex reframing of the modeling problem, and can take weeks or months of testing.

It be great if we could supplement model architecture search with more reliable ways to improve model accuracy.

Today, we are releasing a large-scale study showing that deep learning accuracy improves predictably as we grow training data set size. Through empirical testing, we find predictable accuracy scaling as long as we have enough data and compute power to train large models. These results hold for a broad spectrum of state-of-the-art models over four application domains: machine translation, language modeling, image classification, and speech recognition.

More specifically, our results show that generalization error—the measure of how well a model can predict new samples—decreases as a power-law of the training data set size. Prior theoretical work also shows that error scaling should be a power-law. However, these works typically predict a “steep” learning curve—the power-law exponent is expected to be -0.5—suggesting models should learn very quickly. Our empirically-collected learning curves show smaller magnitude exponents in the range

[-0.35, -0.07]: Real models actually learn real-world data more slowly than suggested by theory.

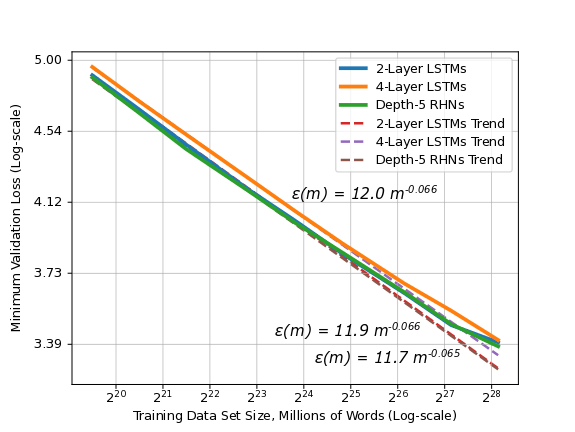

As an example, consider our results for word language modeling below (Note the log-log scale!):

Word language models show predictable power-law validation error scaling as training set size grows.

For word language modeling, we test LSTM and RHN models on subsets of the Billion Word dataset. The figure above shows each model architecture’s validation error (an approximation to generalization error) for the best-fit model size at each training set size. These learning curves are each predictable power-laws, which surprisingly have the same power-law exponent. On larger training sets, models tend away from the curve, but we find that optimization hyperparameter search often closes the gap.

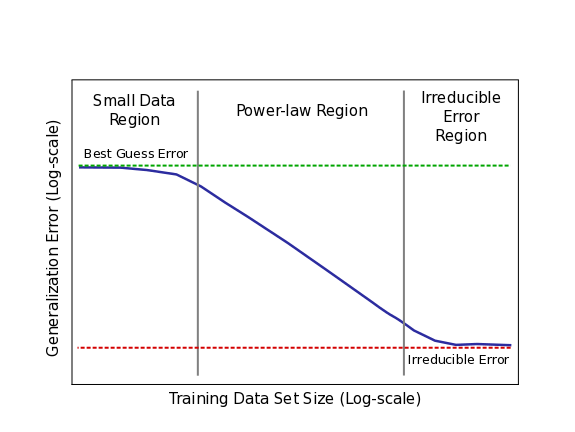

Model error improves starting with “best guessing” and following the power-law curve down to “irreducible error”.

More generally, our empirical results suggest that learning curves take the following form (Again, log-log scale!):

Sketch of power-law learning curves for real applications

The figure above shows a cartoon sketch power-law plot that breaks down learning curve phases for real applications. The curve begins in the small data region, where models struggle to learn from a small number of training samples. Here, models only perform as well as “best” or “random” guessing. The middle portion of learning curves is the power-law region, where each new training sample provides information that helps models improve predictions on previously unseen samples. The power-law exponent defines the steepness of this curve (slope when viewed in log-log scale). The exponent is an indicator of the difficulty of understanding the data. Finally, for most real world applications, there is likely to be a non-zero lower-bound error past which models will be unable to improve (we have yet to reach irreducible error on our real-world tests, but we’ve tested that it exists for toy problems). This irreducible error is caused by a combination of factors inherent in real-world data.

Across the applications we test, we find:

Power-law learning curves exist across all applications, model architectures, optimizers, and loss functions.

Most surprisingly, for a single application, different model architectures and optimizers show the same power-law exponent. The different models learn at the same relative rate as training set size increases.

The required model size (in number of parameters) to best fit each training set grows sublinearly in the training set size. This relationship is also empirically predictable.

We hope that these findings can spark a broader conversation in the deep learning community about ways that we can speed up deep learning progress. For deep learning researchers, learning curves can assist model debugging and predict the accuracy targets for improved model architectures. There is opportunity for redoubled effort to theoretically predict or interpret learning curve exponents. Further, predictable learning curves can guide decision-making about whether or how to grow data sets, system design and expansion, and they underscore the importance of continued computational scaling.

More details and data can be found in our paper: Deep Learning Scaling is Predictable, Empirically

Authors: Joel Hestness, Sharan Narang, Newsha Ardalani, Gregory Diamos, Heewoo Jun, Hassan Kianinejad, Md. Mostofa Ali Patwary, Yang Yang, Yanqi Zhou, Yi Li

This work would not have been possible without the significant efforts of the Systems team at Baidu Silicon Valley AI Lab (SVAIL). In addition to co-authors, we specifically thank members of the machine learning research team, Rewon Child, Jiaji Huang, Sercan Arik, and Anuroop Sriram, who have provided valuable feedback. We also thank those who have contributed to the discussion: Awni Hannun, Andrew Ng, Ilya Sutskever, Ian Goodfellow, Pieter Abbeel.