2023-08-18

Back to list

We are thrilled to share that Baidu Research Robotics and Autonomous Driving Lab (RAL) has once again showcased its commitment to pushing the boundaries of innovation and research in computer vision and robotics. With great pride, we present the remarkable achievements of our team, who have had seven papers featured in prestigious journals such as the IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), Autonomous Robots and IEEE Robotics and Automation Letters (RA-L), and conferences ACM Multimedia (ACM-MM) and IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS).

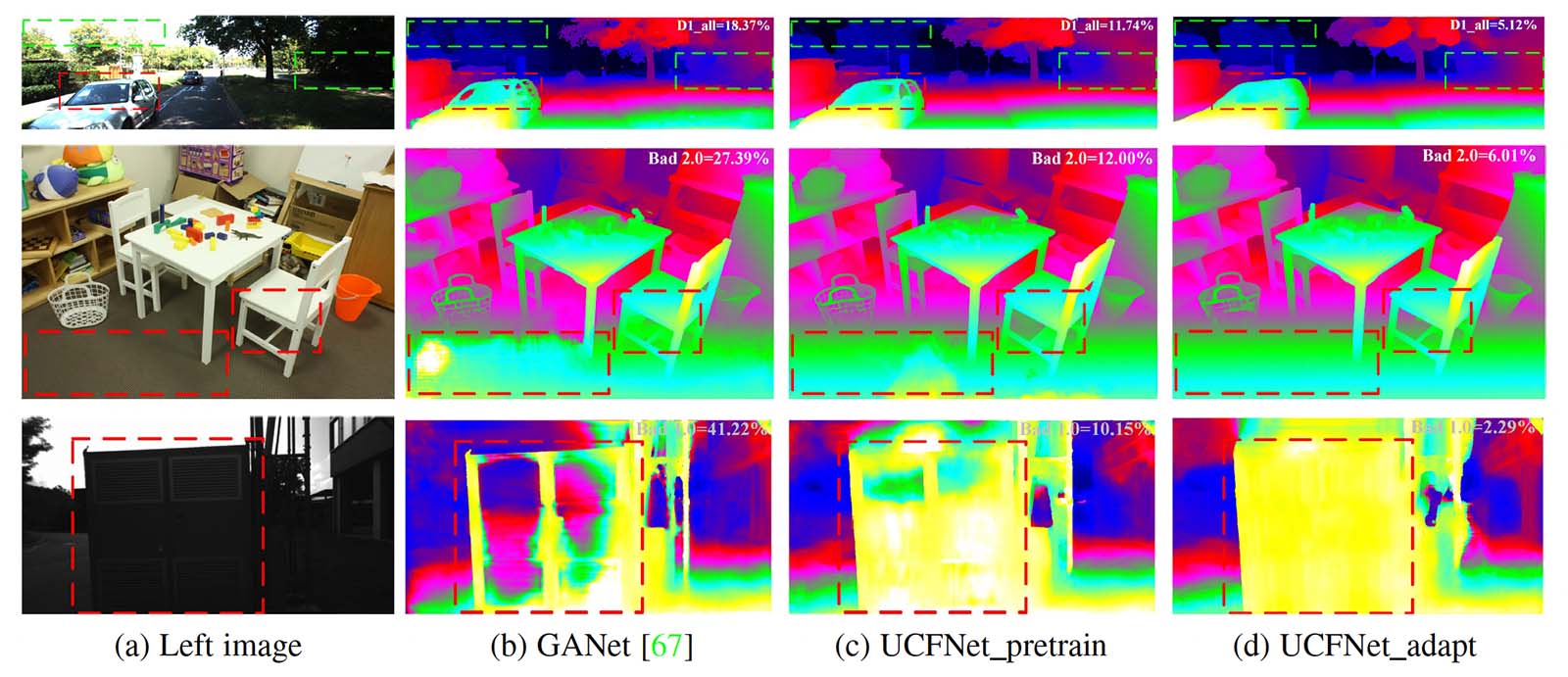

1. Digging into Uncertainty-based Pseudo-label for Robust Stereo Matching

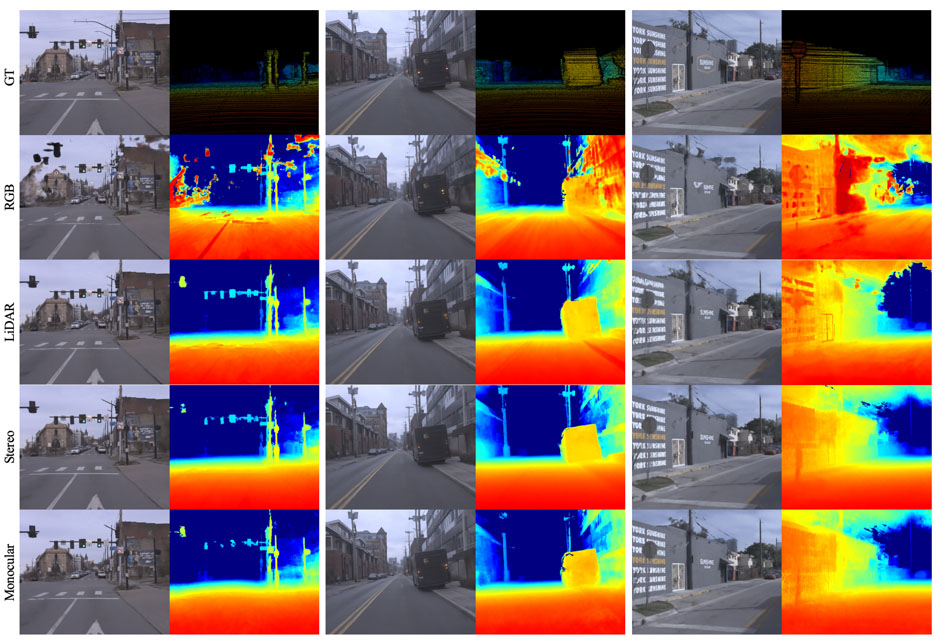

Stereo matching is a classical research topic in computer vision, which aims to estimate a disparity/depth map from a pair of rectified stereo images. However, due to the domain differences and unbalanced disparity distribution across multiple datasets, current stereo-matching approaches are commonly limited to a specific dataset and generalize poorly to others. Such domain shift issue is usually addressed by substantial adaptation on costly target-domain ground-truth data, which cannot be easily obtained in practical settings.

In this IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI) journal paper, we propose to dig into uncertainty estimation to solve the domain differences and unbalanced disparity distribution across multiple datasets in the stereo-matching task. Specifically, to balance the disparity distribution, we employ a pixel-level uncertainty estimation to adaptively adjust the subsequent stage disparity searching space, in this way driving the network progressively prune out the space of unlikely correspondences. Then, to solve the limited ground truth data, an uncertainty-based pseudo-label is proposed to adapt the pre-trained model to the new domain, where pixel-level and area-level uncertainty estimation is proposed to filter out the high-uncertainty pixels of predicted disparity maps and generate sparse while reliable pseudo-labels to align the domain gap.

Experimentally, our method shows strong cross-domain, adapt, and joint generalization and obtains 1st place on the stereo task of Robust Vision Challenge 2020. Additionally, our uncertainty-based pseudo-labels can be extended to train monocular depth estimation networks in an unsupervised way and even achieves comparable performance with the supervised methods. This work has been accepted for publication in the highly esteemed IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI) journal. Check out the video at https://github.com/gallenszl/UCFNet and the paper at: https://arxiv.org/abs/2307.16509.

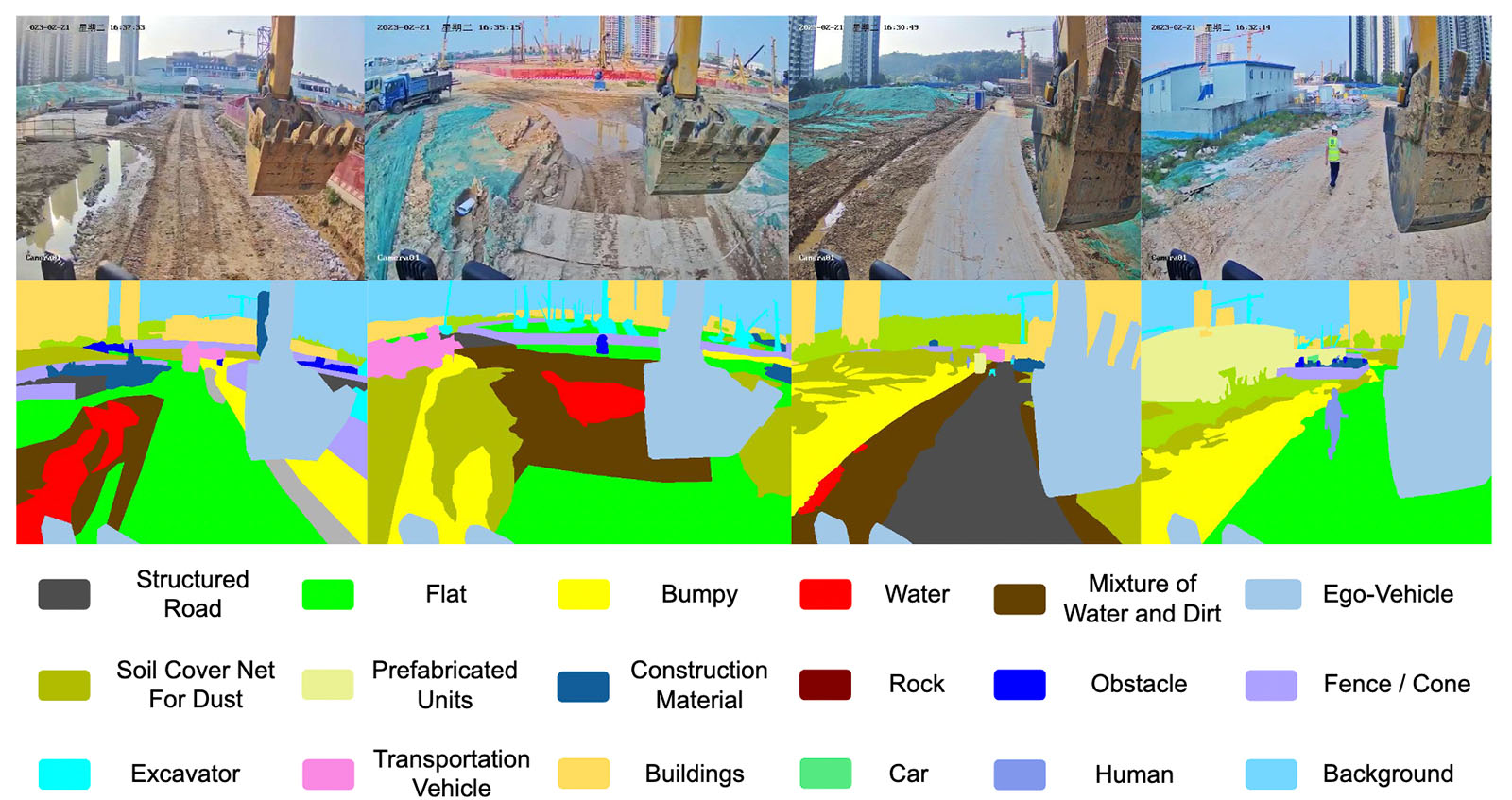

2. TNES: Terrain Traversability Mapping, Navigation, and Excavation System for Autonomous Excavators on Worksite

Published in Autonomous Robots, this research presents a terrain traversability mapping and navigation system (TNS) for autonomous excavator applications in an unstructured environment. We efficiently extract terrain features from RGB images and 3D point clouds and incorporate them into a global map for planning and navigation. Our system can adapt to changing environments and update the terrain information in real-time. The work exemplifies a fusion of computer vision and robotics, opening new avenues for the construction industry. Check out the work at: https://link.springer.com/article/10.1007/s10514-023-10113-9.

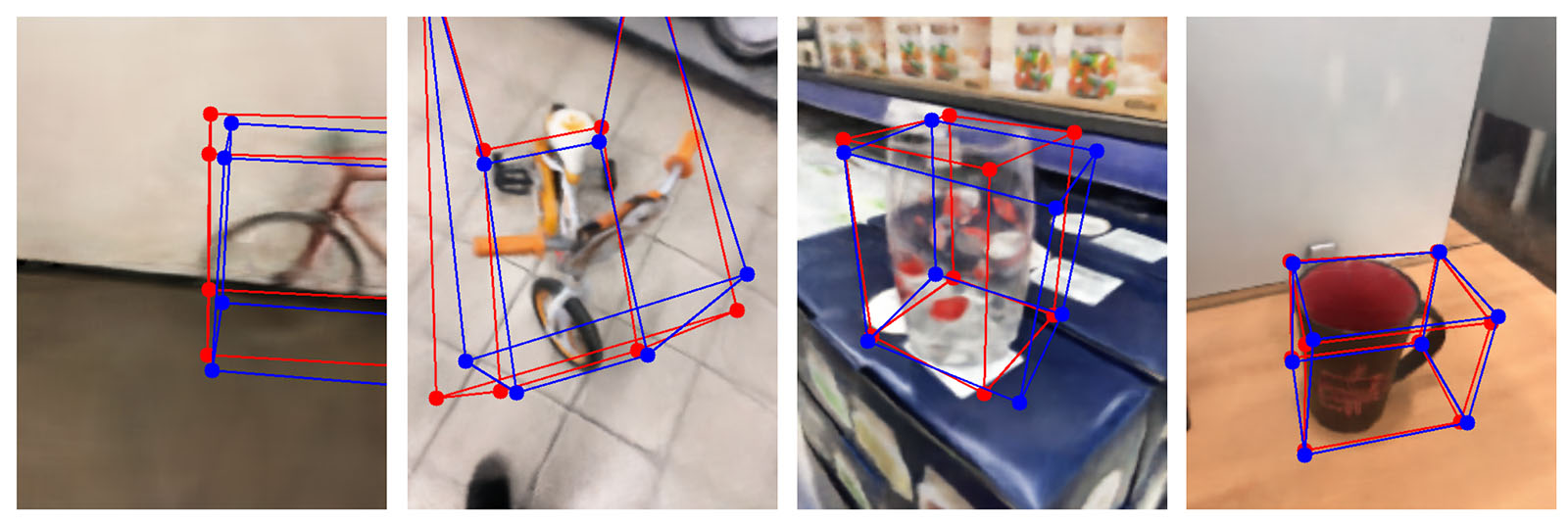

3. NeRF-Loc: Transformer-Based Object Localization Within Neural Radiance Fields

This research introduces NeRF-Loc, a transformer-based framework, to extract 3D bounding boxes of objects in NeRF scenes. NeRF-Loc takes a pre-trained NeRF model and camera view as input, producing labeled, oriented 3D bounding boxes of objects as output. This research is published in IEEE Robotics and Automation Letters. It holds immense potential for object localization in various real-world applications. Check out the work at: https://arxiv.org/abs/2209.12068.

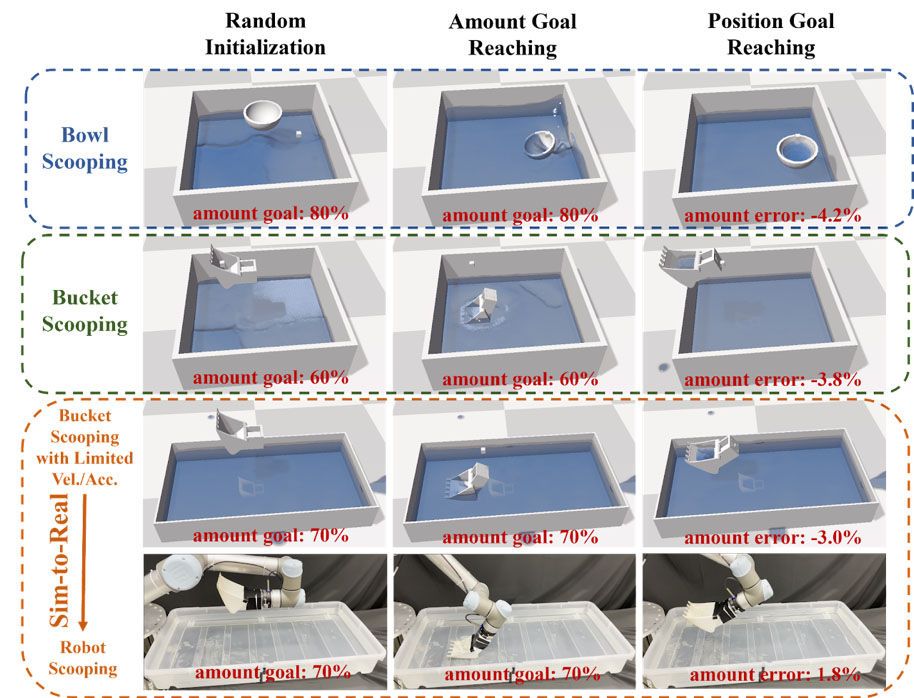

4. GOATS: Goal Sampling Adaptation for Scooping with Curriculum Reinforcement Learning

At the IEEE/RSJ International Conference on Intelligent Robots and Systems, Baidu Research RAL unveils GOATS, Goal Sampling Adaptation for Scooping, a curriculum reinforcement learning method that can learn an effective and generalizable policy for robot scooping tasks. Specifically, we use a goal-factorized reward formulation and interpolate position goal distributions and amount goal distributions to create a curriculum throughout the learning process. The videos and the paper are on the project page: https://sites.google.com/view/goatscooping

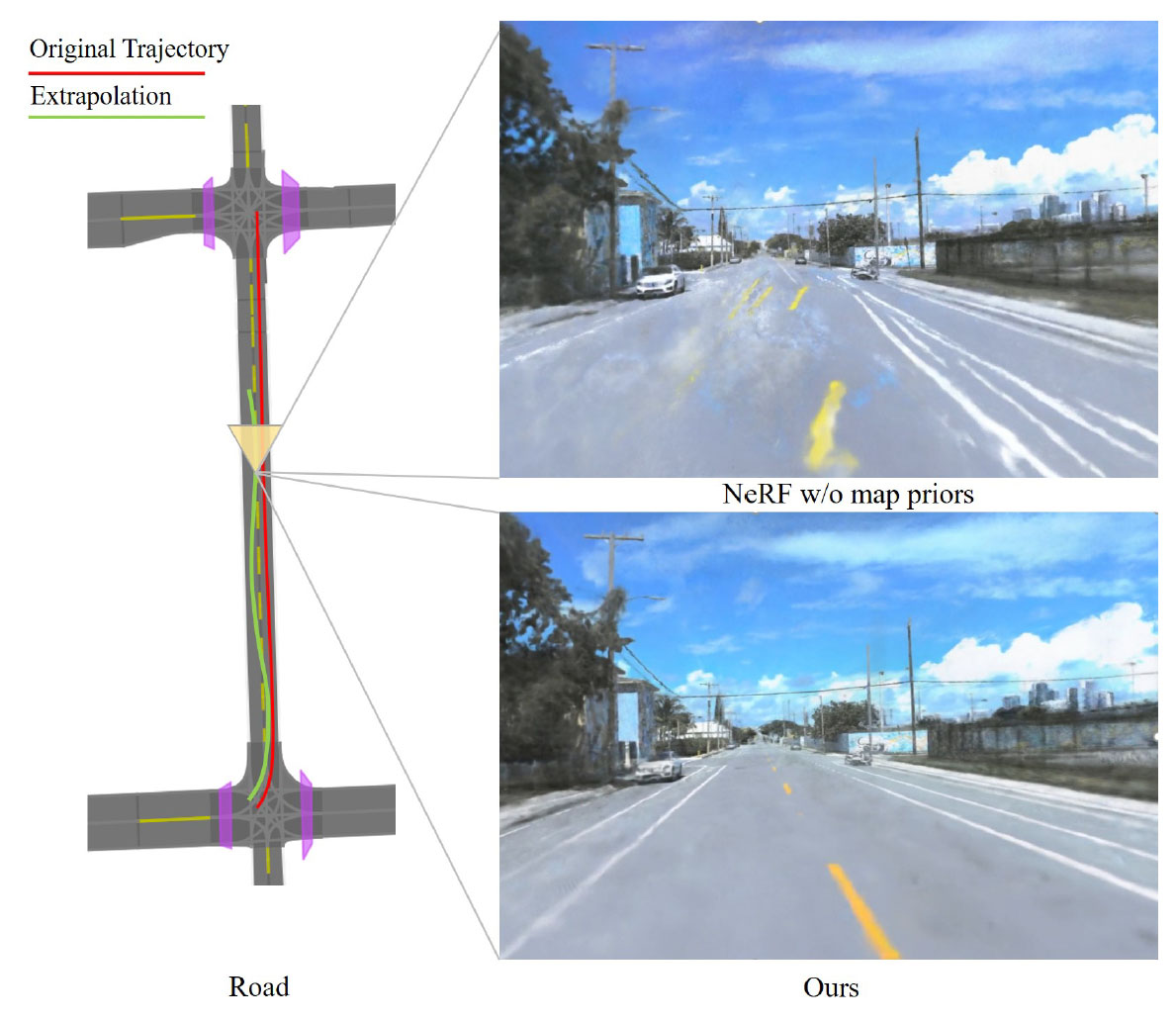

5. MapNeRF: Incorporating Map Priors into Neural Radiance Fields for Driving View Simulation

As end-to-end autonomous driving is dominating the research and development of new-generation autonomous driving technology, a simulator that supports closed-loop simulation for autonomous driving is essential. A long-standing and challenging problem between reality and the virtual world is a sim-real gap. In short, existing simulators commonly use game engines such as UE5 and Unity to render designed assets such as roads, vehicles, pedestrians, trees, and more, while the views that an autonomous driving vehicle sees are different from those rendered assets in pixel-level.

Recent advances in neural radiance fields (NeRF) enable reconstructing a 3D world from 2D images. This is potentially useful to bridge the sim-real gap in autonomous driving simulation. However, existing NeRF methods suffer from the limited data provided by log data from road tests, which may result in unsatisfactory outcomes, particularly when the camera pose is placed out-of-trajectory; semantic consistency cannot be guaranteed when synthesizing images from deviated views. We observe this problem under this data condition in all neural radiance approaches. Our work on MapNeRF introduces an ingenious concept incorporating map priors into neural radiance fields for driving view simulation. Our proposed work uses map priors, which are easily accessible in autonomous driving simulation, to assist the build of NeRF for synthesizing high-quality deviated views. We propose to supervise the ground density using multi-view consistency with uncertainty tempering. This strategy surprisedly improves simulation quality and is pluggable to a large number of NeRF methods.

This paper has been accepted for the 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems. This work contributes significantly to the field of autonomous driving and perception.

A video demonstrating the proposed MapNeRF can be viewed at: https://youtu.be/jEQWr-Rfh3A. Check out the paper at: https://arxiv.org/pdf/2307.14981.pdf.

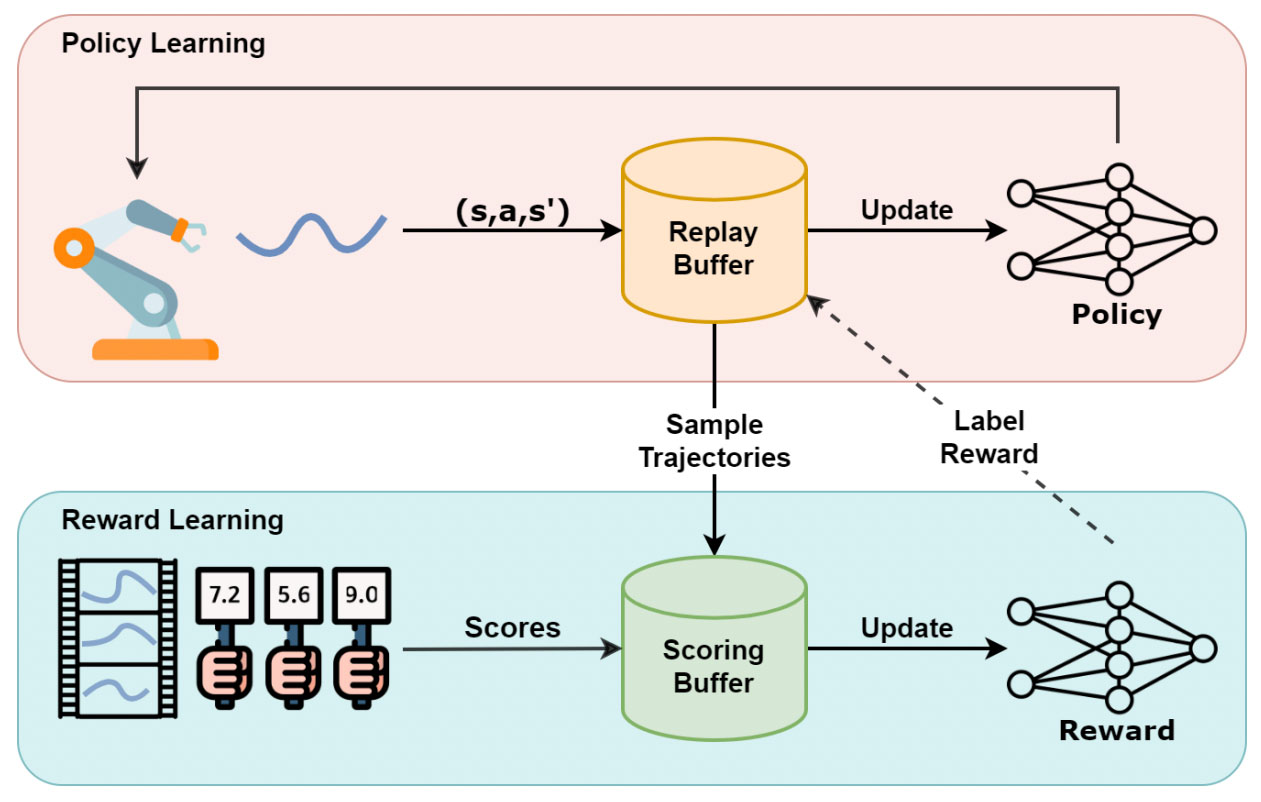

6. Boosting Feedback Efficiency of Interactive Reinforcement Learning by Adaptive Learning from Scores

RLHF (Reinforcement Learning from Human Feedback) is an essential unit in building large language models, which addresses the complex problem of learning the reward model (RM) from human feedback.

At the IEEE/RSJ International Conference on Intelligent Robots and Systems, Baidu RAL presented a new method that uses scores provided by humans instead of pairwise preferences to improve the feedback efficiency of interactive reinforcement learning. The adaptive learning from scores methodology propels interactive learning to new heights. Check out the paper at: https://arxiv.org/abs/2307.05405.

7. Digging into Depth Priors for Outdoor Neural Radiance Fields

Neural Radiance Fields (NeRF) have demonstrated impressive performance in vision and graphics tasks, such as novel view synthesis and immersive reality. However, the shape-radiance ambiguity of radiance fields remains a challenge, especially in the sparse viewpoints setting. Recent work resorts to integrating depth priors into outdoor NeRF training to alleviate the issue. However, the criteria for selecting depth priors and the relative merits of different priors have not been thoroughly investigated. Moreover, the relative merits of choosing different approaches to use the depth priors is also an unexplored problem.

In this ACM Multimedia conference paper, we provide a comprehensive study and evaluation of employing depth priors to outdoor neural radiance fields, covering common depth sensing technologies and most application ways. Specifically, we conduct extensive experiments with two representative NeRF methods equipped with four commonly-used depth priors and different depth usages on two widely used outdoor datasets. Our experimental results reveal several interesting findings that can benefit practitioners and researchers in training their NeRF models with depth priors. The results and the paper are on the project page: https://cwchenwang.github.io/outdoor-nerf-depth/

Baidu Research RAL is honored to contribute to the global research community through these remarkable publications. These achievements underscore our passion for excellence and reinforce Baidu Research RAL’s position as a driving force in cutting-edge technology.