2022-10-25

Back to list

This week marks the beginning of two flagship conferences in computer vision and robotics, the European Conference on Computer Vision (ECCV 2022), a premier computer vision research conference, and the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2022), a top academic conference in robotics with more than 3,000 onsite attendees worldwide.

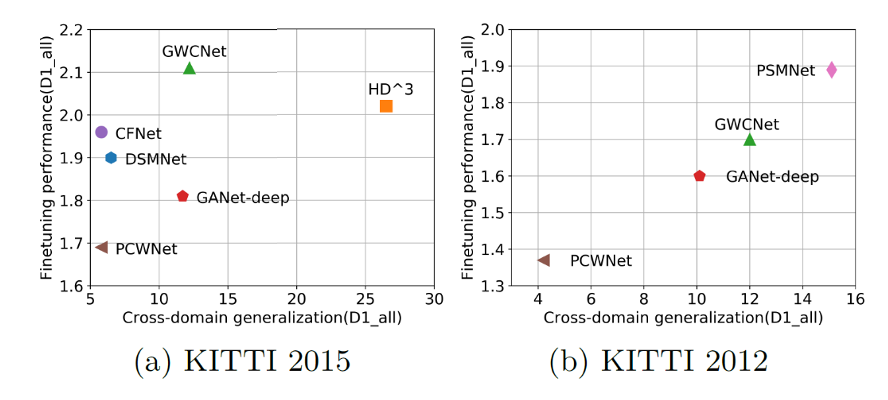

The Baidu Research Robotics and Autonomous Driving Lab (RAL) will present five research papers at these two conferences. The papers include new developments in LiDAR-based and stereo-based perception, which allow for advanced sensing by autonomous vehicles to see their surroundings, as well as the creation of a human-like learning pattern to enable greater efficiency in robotic excavators.

These research innovations epitomize Baidu RAL's latest efforts to advance automation technology for transportation and construction machinery, aimed at steering human interactions with our world toward a better, safer, and greener future.

Autonomous driving is set to bring a profound impact on our society by reducing casualties and road congestion. Baidu’s robotaxis are now operating over 10 cities and have accumulated one million rides as of July 2022.

Automation is also in urgent demand in the heavy construction and mining industry, as it faces hiring shortages due to hazardous and toxic work environments. Last year, Baidu RAL introduced an autonomous excavator system (AES) for material loading tasks. The research behind this development was published in the journals, such as Science Robotics and Robotics, Science and Systems. As a result, several of the world's leading construction machinery companies and end-users have adopted AES to automate traditional heavy construction machinery. AES has been used for more than 10,000 hours in the real world.

ECCV Oral Presentation Paper: Pyramid Combination and Warping Cost Volume for Stereo Matching

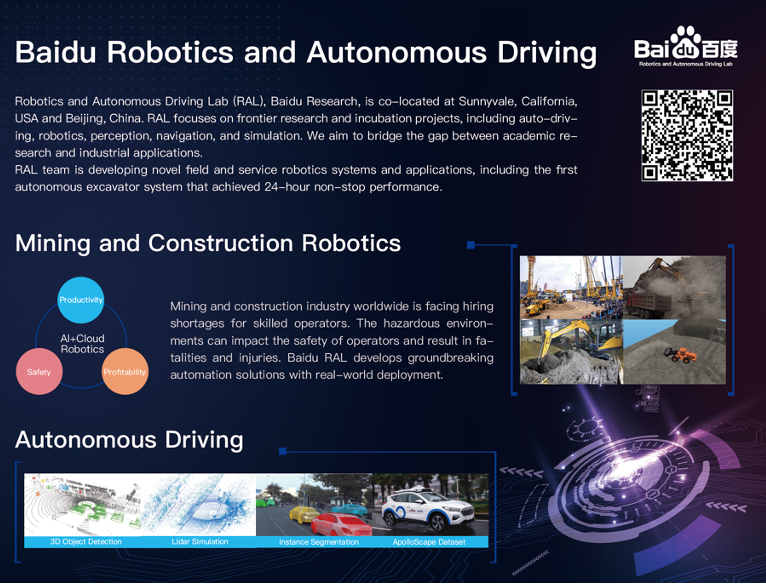

Left: Cross-domain generalization evaluation, where the proposed PCW-Net stereo matching approach can generate a smoother disparity map for the textureless road region as compared to other approaches.

Right: Visualization of the reconstructed 3D environment based on PCW-Net.

Depth sensing plays a central role in many computer vision applications. Indeed, the availability of 3D data can boost the effectiveness of solutions to tasks such as autonomous driving, SLAM, and robot navigation. Active 3D sensors exhibit well-known drawbacks that may limit their practical usability: LiDAR, e.g., is expensive and provides only sparse measurements, while structured light features a limited working range and is mainly suited to indoor environments. In contrast, passive techniques to infer depth from images are suitable for more scenarios due to their low cost and easiness of deployment. Among these, binocular stereo – estimating the disparity map between a rectified image pair – has become a popular subject in active research.

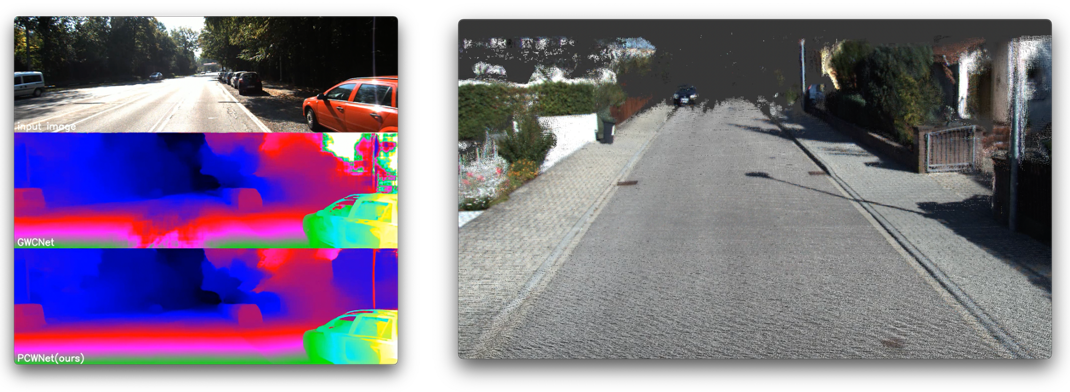

The network architecture of the proposed PCW-Net

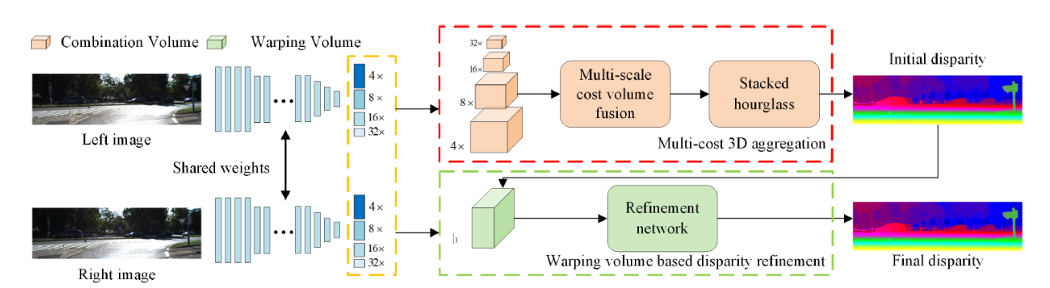

Baidu RAL will present an oral research paper (acceptance rate 2.7%) on Pyramid Combination and Warping Cost Volume for Stereo Matching in ECCV2022. In this paper, Baidu researchers propose PCW-Net, a Pyramid Combination and Warping cost volume-based network, which can achieve good performance on both cross-domain generalization and fine-tuning accuracy on various benchmarks. In particular, the proposed PCW-Net is designed for two purposes. First, researchers constructed combination volumes on the upper levels of the pyramid and developed a cost volume fusion module to integrate them for initial disparity estimation. Second, researchers constructed the warping volume at the last level of the pyramid for disparity refinement. When training on synthetic datasets and generalizing to unseen real datasets, this method shows strong cross-domain generalization and outperforms existing state-of-the-art by a large margin. This method ranks first on KITTI 2012, second on KITTI 2015, and first on the Argoverse among all published methods as of March 7, 2022.

Model generalization ability vs. fine-tuning performance on KITTI 2012 & 2015 datasets

ECCV Research Paper: ProposalContrast: Unsupervised Pre-training for LiDAR-based 3D Object Detection (https://arxiv.org/pdf/2207.12654.pdf)

Existing approaches for unsupervised point cloud pre-training are constrained to either scene-level or point/voxel-level instance discrimination. Scene-level methods tend to lose local details that are crucial for recognizing road objects, while point/voxel-level methods inherently suffer from a limited receptive field incapable of perceiving large objects or context environments. Considering region-level representations are more suitable for 3D object detection, this research devised a new unsupervised point cloud pre-training framework, called ProposalContrast, that learns robust 3D representations by contrasting region proposals. Specifically, with an exhaustive set of region proposals sampled from each point cloud, geometric point relations within each proposal are modeled for creating expressive proposal representations. To better accommodate 3D detection properties, ProposalContrast optimizes with both inter-cluster and inter-proposal separation, i.e., sharpening the discriminativeness of proposal representations across semantic classes and object instances. The generalizability and transferability of ProposalContrast are verified on various 3D detectors (i.e., PV-RCNN, CenterPoint, PointPillars and PointRCNN) and datasets (i.e., KITTI, Waymo and ONCE).

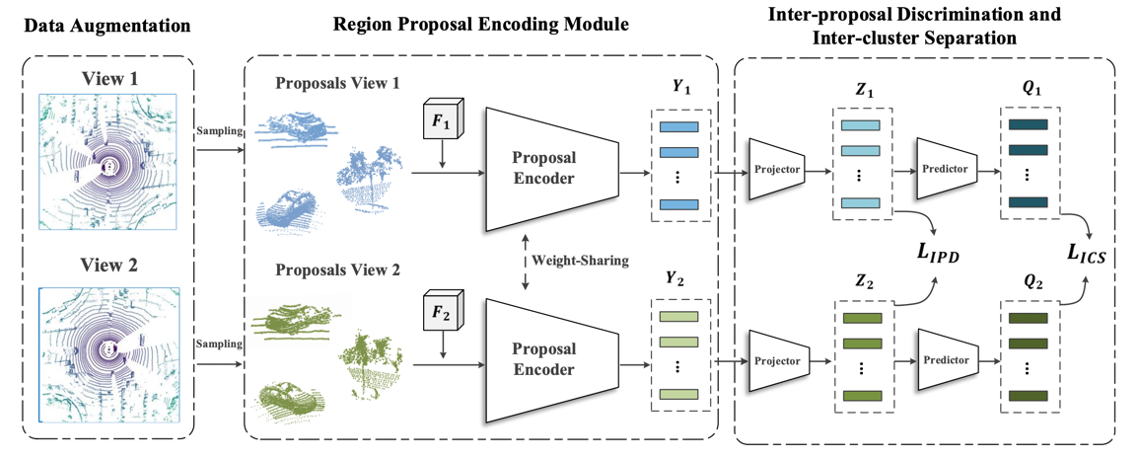

Illustration of the ProposalContrast framework. Given an augmented point cloud with different views, the method first samples paired region proposals and then extracts the features with a region proposal encoding module. After that, inter-proposal discrimination and inter-cluster separation are enforced to optimize the whole network.

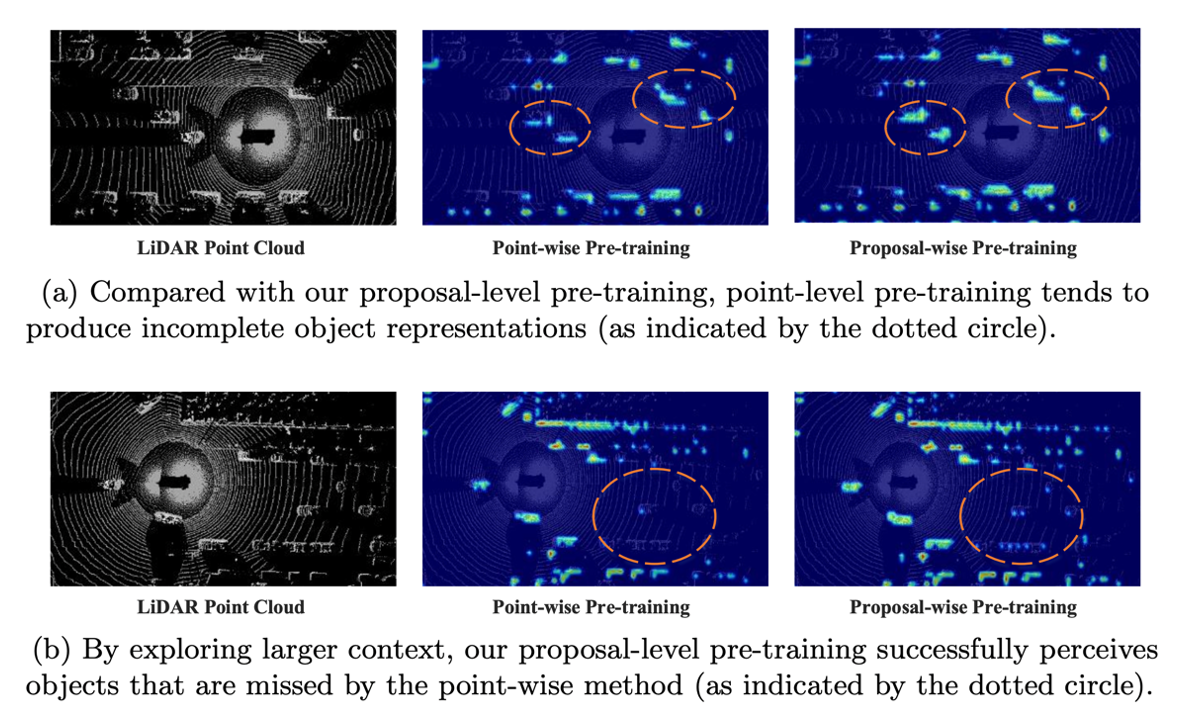

Comparison of VoxelNet representations learned from PointContrast and ProposalContrast.

Comparison of VoxelNet representations learned from PointContrast and ProposalContrast.

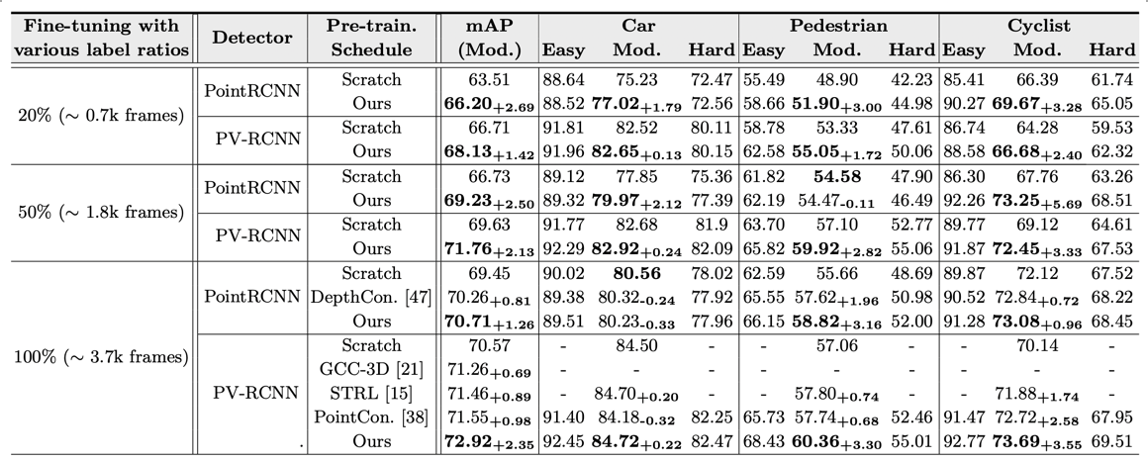

The table illustrates the data efficiency of the approach to 3D Object Detection on KITTI. Researchers pre-train the backbones of PointRCNN and PV-RCNN on the Waymo dataset and transfer them to KITTI 3D object detection with different label configurations. Consistent improvements are obtained under each setting. The proposed approach outperforms all the concurrent self-supervised learning methods, i.e., DepthContrast, PointContrast, GCC-3D, and STRL.

Baidu RAL will also present its latest progress on Semi-supervised 3D Object Detection with Proficient Teachers. The research proposes a new Pseudo-Labeling framework for semi-supervised 3D object detection, by enhancing the teacher model to a proficient one with several necessary designs. The approach significantly improves the baseline by 9.51 mAP and ] outperforms the oracle model with full annotations on the benchmark with only half annotations.

IROS Research Paper: Excavation of Fragmented Rocks with Multi-modal Model-based Reinforcement Learning

Excavators are used widely in a range of engineering fields. However, excavator operation is often both dangerous and costly, challenges that can be overcome through the use of automated excavators. The majority of the literature on this topic focuses on the excavation of soil. Soil is mostly homogeneous and if a path is laid out, the controller is likely able to simply follow it.

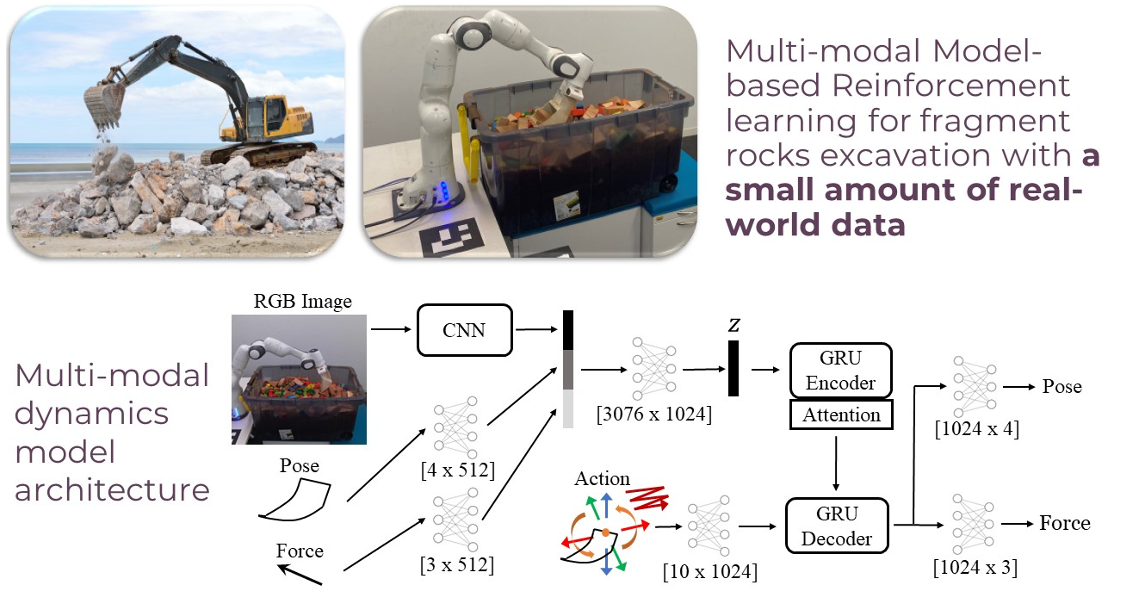

However, for a pile of rocks, if the operator simply moves the digging bucket straight through the rocks, the bucket is likely to get stuck, meaning that smarter strategies are needed to excavate rocks. In this work, the research team aimed to equip automated excavators with similar capabilities compared to human operators. The proposed method first designs a discrete set of primitive motions taking advantage of excavation domain knowledge. Then a multi-modal dynamics model based on RNN is learned from a small amount of real-world data. Lastly, a model predictive controller is used for closed-loop planning.

Framework for learning-based excavation of fragmented rigid objects

Framework for learning-based excavation of fragmented rigid objects

To evaluate the new approach, researchers compared three manual design strategies, with brute-force, random shooting, and Monte Carlo tree search (MCTS) planners on different planning horizons. These manual strategies and planners were tested for different trials, which penetrate the surface with shallow, medium, and deep depth. Two metrics were used for comparison. The first metric is the number of trajectories that resulted in excessive force and jammed the robot. The other metric is the weight of excavated objects per action. From the results, the manual strategies significantly underperform the other methods.

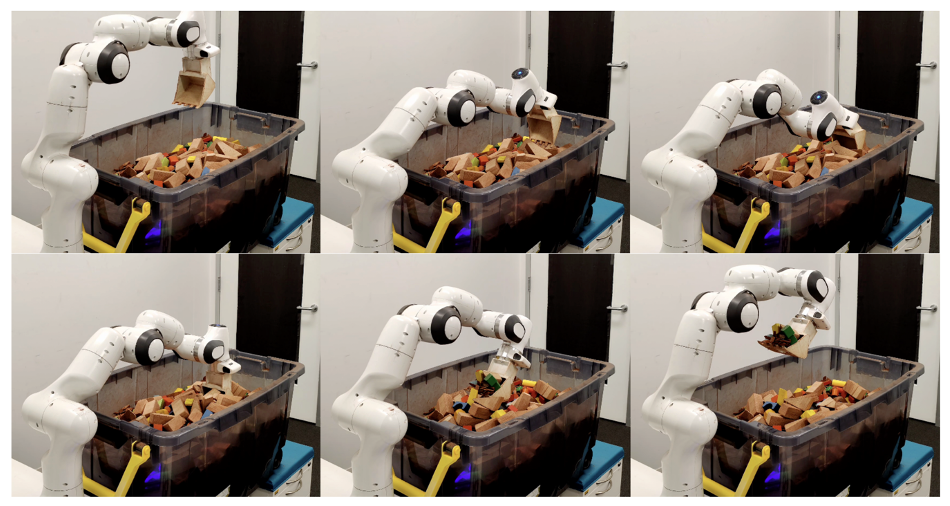

Trajectory execution on the robot arm for excavating rigid blocks

Baidu RAL will also present an approach for learning-based robotic excavation, Imitation Learning and Model Integrated Excavator Trajectory Planning at IROS 2022. The two-stage method integrates data-driven imitation learning and model-based trajectory optimization to generate optimal trajectories for autonomous excavators. Baidu RAL researchers further evaluated this method on different material types, such as sand and rigid blocks. The experimental results show that the proposed two-stage algorithm combining expert knowledge and model optimization can increase excavation weights by up to 24.77% with low variance.

About Baidu RAL

The Robotics and Autonomous Driving Lab (RAL), Baidu Research, has two locations, in Sunnyvale, California, USA, and Beijing, China. RAL focuses on frontier research and incubation projects, including autonomous driving, robotics, perception, navigation, and simulation. We aim to bridge the gap between academic research and industrial applications. The RAL team is developing novel construction, mining and transportation robotics systems and applications, including the first autonomous excavator system that has been used for more than 10,000 hours in the real world.