2021-06-18

Back to listThe Computer Vision and Pattern Recognition Conference (CVPR) 2021 kicked off last weekend as a virtual event from June 19 – June 25. As the premier annual computer vision conference, CVPR 2021 received over 7,015 submissions and accepted 1,663 accepted research papers, yielding an acceptance rate of 23.7 percent.

At this year's CVPR, Baidu is pushing the edge forward in many important areas of computer vision including semantic image segmentation, video retrieval, 3D object detection, style transfer, video understanding, and transfer learning. These results will facilitate AI deployments in industries like healthcare, autonomous driving, smart cities, entertainment, and manufacturing.

We are also excited to co-organize the Neural Architecture Search workshop this year along with the 1st lightweight NAS challenge to bring together emerging research innovations in such areas. You can visit the site here for more details.

In this blog, we will highlight innovations from some of our accepted research papers, in additional detail.

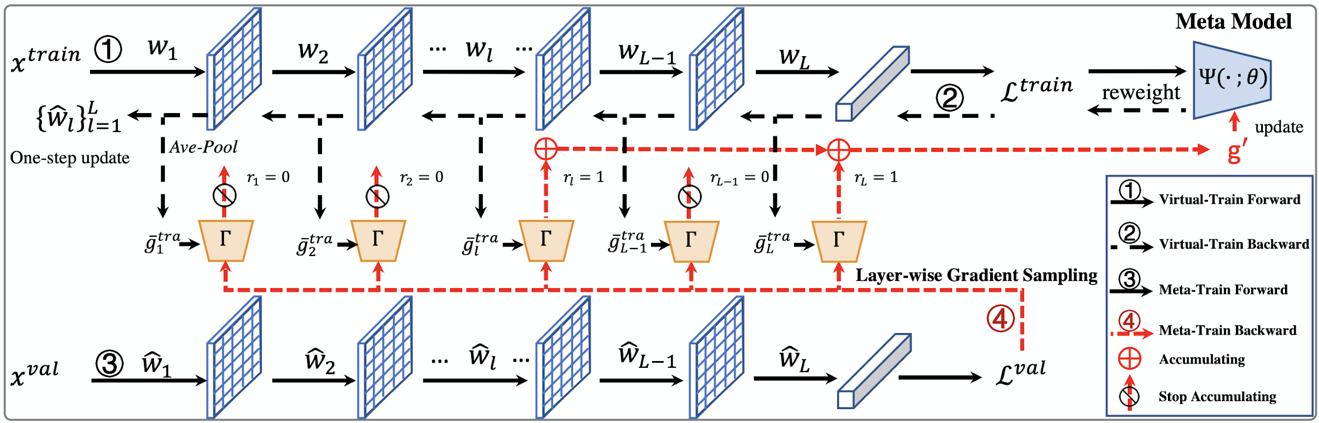

Faster Meta Update Strategy for Noise-Robust Deep Learning

It has been shown that deep neural networks are prone to overfitting on biased training data. Towards addressing this issue, meta-learning employs a meta model for correcting the training bias. Despite the promising performances, super slow training is currently the bottleneck in the meta learning approaches. In this paper, we introduce a novel Faster Meta Update Strategy (FaMUS) to replace the most expensive step in the meta gradient computation with a faster layer-wise approximation. We empirically find that FaMUS yields not only a reasonably accurate but also a low-variance approximation of the meta gradient. We conduct extensive experiments to verify the proposed method on two tasks. We show our method is able to save two-thirds of the training time while still maintaining the comparable or achieving even better generalization performance. In particular, our method achieves the state-of-the-art performance on both synthetic and realistic noisy labels, and obtains promising performance on long-tailed recognition on standard benchmarks.

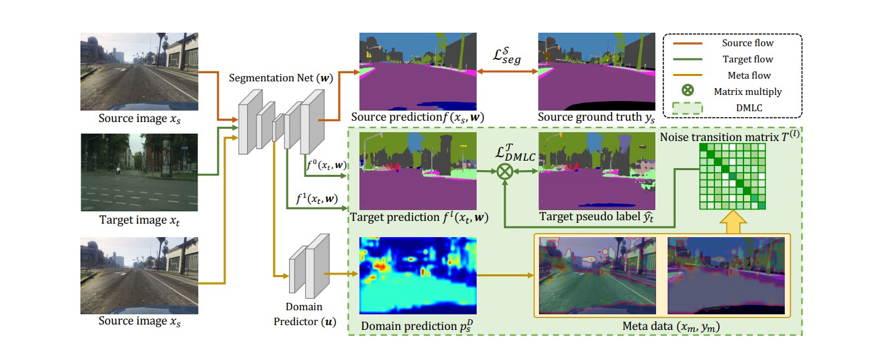

Unsupervised domain adaptation (UDA) aims to transfer the knowledge from the labeled source domain to the unlabeled target domain. Existing self-training-based UDA approaches assign pseudo labels for target data and treat them as ground truth labels to fully leverage unlabeled target data for model adaptation. However, the generated pseudo labels from the model optimized on the source domain inevitably contain noise due to the domain gap. To tackle this issue, we advance a MetaCorrection framework, where a Domain-aware Meta-learning strategy is devised to benefit Loss Correction (DMLC) for UDA semantic segmentation. In particular, we model the noise distribution of pseudo labels in target domain by introducing a noise transition matrix (NTM) and construct meta data set with domain-invariant source data to guide the estimation of NTM. Through the risk minimization on the meta data set, the optimized NTM thus can correct the noisy issues in pseudo labels and enhance the generalization ability of the model on the target data. Considering the capacity gap between shallow and deep features, we further employ the proposed DMLC strategy to provide matched and compatible supervision signals for different level features, thereby ensuring deep adaptation. Extensive experimental results highlight the effectiveness of our method against existing state-of-the-art methods on three benchmarks.

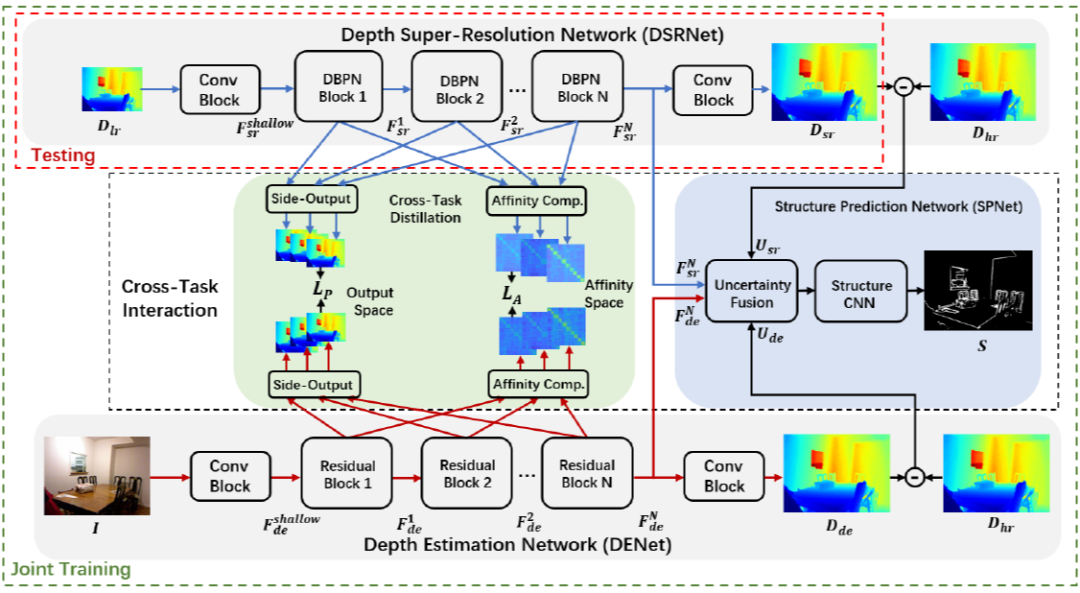

Existing color-guided depth super-resolution (DSR) approaches require paired RGB-D data as training samples where the RGB image is used as structural guidance to recover the degraded depth map due to their geometrical similarity. However, the paired data may be limited or expensive to be collected in actual testing environment. Therefore, we explore for the first time to learn the cross-modality knowledge at training stage, where both RGB and depth modalities are available, but test on the target dataset, where only single depth modality exists. Our key idea is to distill the knowledge of scene structural guidance from RGB modality to the single DSR task without changing its network architecture. Specifically, we construct an auxiliary depth estimation (DE) task that takes an RGB image as input to estimate a depth map, and train both DSR task and DE task collaboratively to boost the performance of DSR. Upon this, a cross-task interaction module is proposed to realize bilateral cross task knowledge transfer. First, we design a cross-task distillation scheme that encourages DSR and DE networks to learn from each other in a teacher-student role-exchanging fashion. Then, we advance a structure prediction (SP) task that provides extra structure regularization to help both DSR and DE networks learn more informative structure representations for depth recovery. Extensive experiments demonstrate that our scheme achieves superior performance in comparison with other DSR methods.

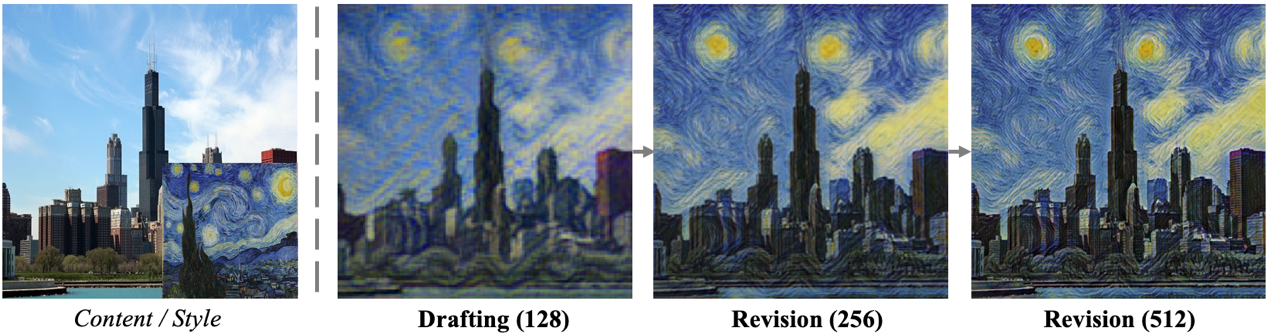

Drafting and Revision: Laplacian Pyramid Network for Fast High-Quality Artistic Style Transfer

Artistic style transfer aims at migrating the style from an example image to a content image. Currently, optimization-based methods have achieved great stylization quality, but expensive time cost restricts their practical applications. Meanwhile, feed-forward methods still fail to synthesize complex style, especially when holistic global and local patterns exist. Inspired by the common painting process of drawing a draft and revising the details, we introduce a novel feed-forward method named Laplacian Pyramid Network (LapStyle). LapStyle first transfers global style patterns in low-resolution via a Drafting Network. It then revises the local details in high-resolution via a Revision Network, which hallucinates a residual image according to the draft and the image textures extracted by Laplacian filtering. Higher resolution details can be easily generated by stacking Revision Networks with multiple Laplacian pyramid levels. The final stylized image is obtained by aggregating outputs of all pyramid levels. %We also introduce a patch discriminator to better learn local patterns adversarially. Experiments demonstrate that our method can synthesize high quality stylized images in real time, where holistic style patterns are properly transferred.

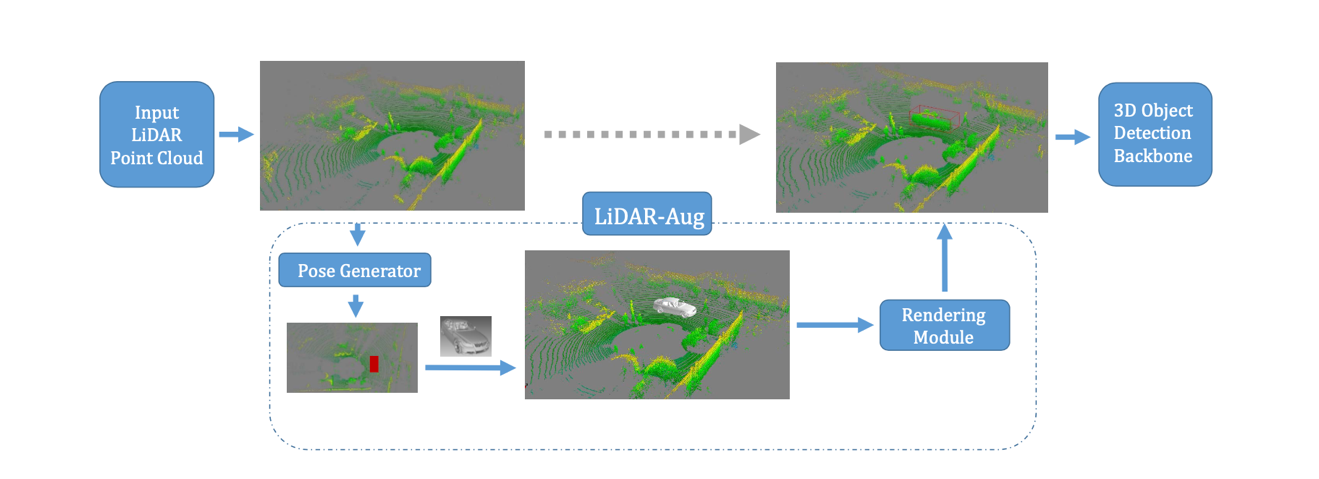

LiDAR-Aug: A General Rendering-based Augmentation Framework for 3D Object Detection

Annotating the LiDAR point cloud is crucial for deep learning-based 3D object detection tasks. Due to expensive labeling costs, data augmentation has been taken as a necessary module and plays an important role in training the neural network. “Copy” and “paste” (i.e., GT- Aug) is the most commonly used data augmentation strategy, however, the occlusion between objects has not been taken into consideration. To handle the above limitation, we propose a rendering-based LiDAR augmentation frame- work (i.e., LiDAR-Aug) to enrich the training data and boost the performance of LiDAR-based 3D object detectors. The proposed LiDAR-Aug is a plug-and-play module that can be easily integrated into different types of 3D object detection frameworks. Compared to the traditional object augmentation methods, LiDAR-Aug is more realistic and effective. Finally, we verify the proposed framework on the public KITTI dataset with different 3D object detectors. The experimental results show the superiority of our method compared to other data augmentation strategies. We plan to make our data and code public to help other researchers reproduce our results.

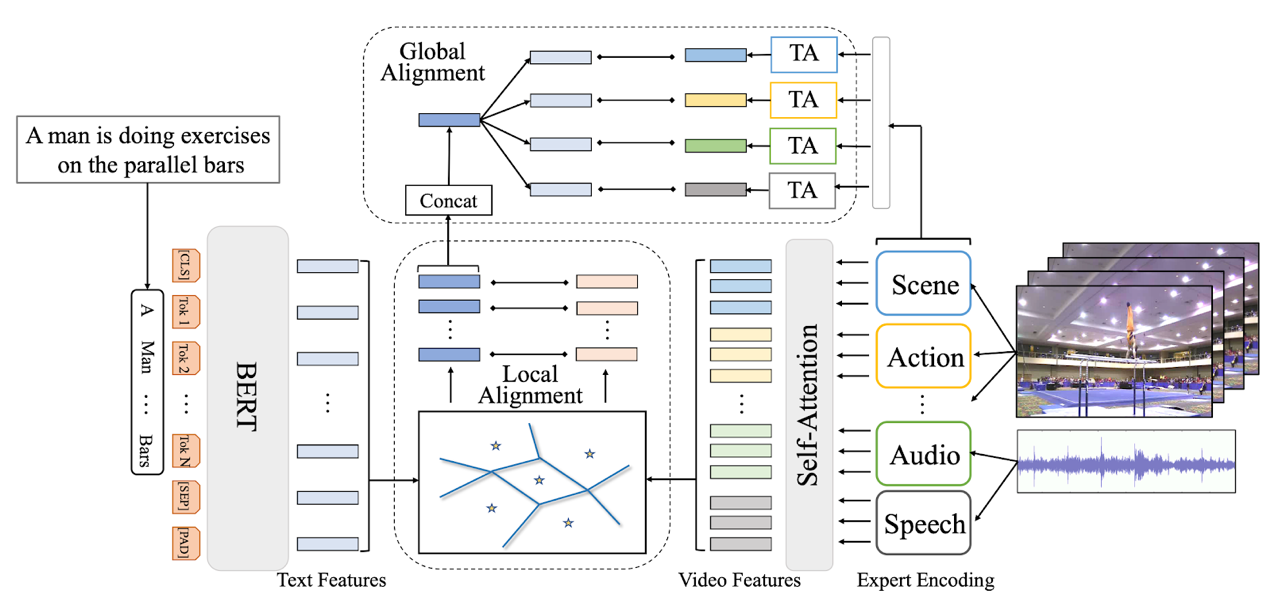

T2VLAD: Global-Local Sequence Alignment for Text-Video Retrieval

Text-video retrieval is a challenging task that aims to search relevant video contents based on natural language descriptions. The key to this problem is to measure text-video similarities in a joint embedding space. However, most existing methods only consider the global cross-modal similarity and overlook the local details. Some works incorporate the local comparisons through cross-modal local matching and reasoning. These complex operations introduce tremendous computation. In this paper, we design an efficient global-local alignment method. The multi-modal video sequences and text features are adaptively aggregated with a set of shared semantic centers. The local cross-modal similarities are computed between the video feature and text feature within the same center. This design enables the meticulous local comparison and reduces the computational cost of the interaction between each text-video pair. Moreover, a global alignment method is proposed to provide a global cross-modal measurement that is complementary to the local perspective. The global aggregated visual features also provide additional supervision, which is indispensable to the optimization of the learnable semantic centers. We achieve consistent improvements on three standard text-video retrieval benchmarks and outperform the state-of-the-art by a clear margin.

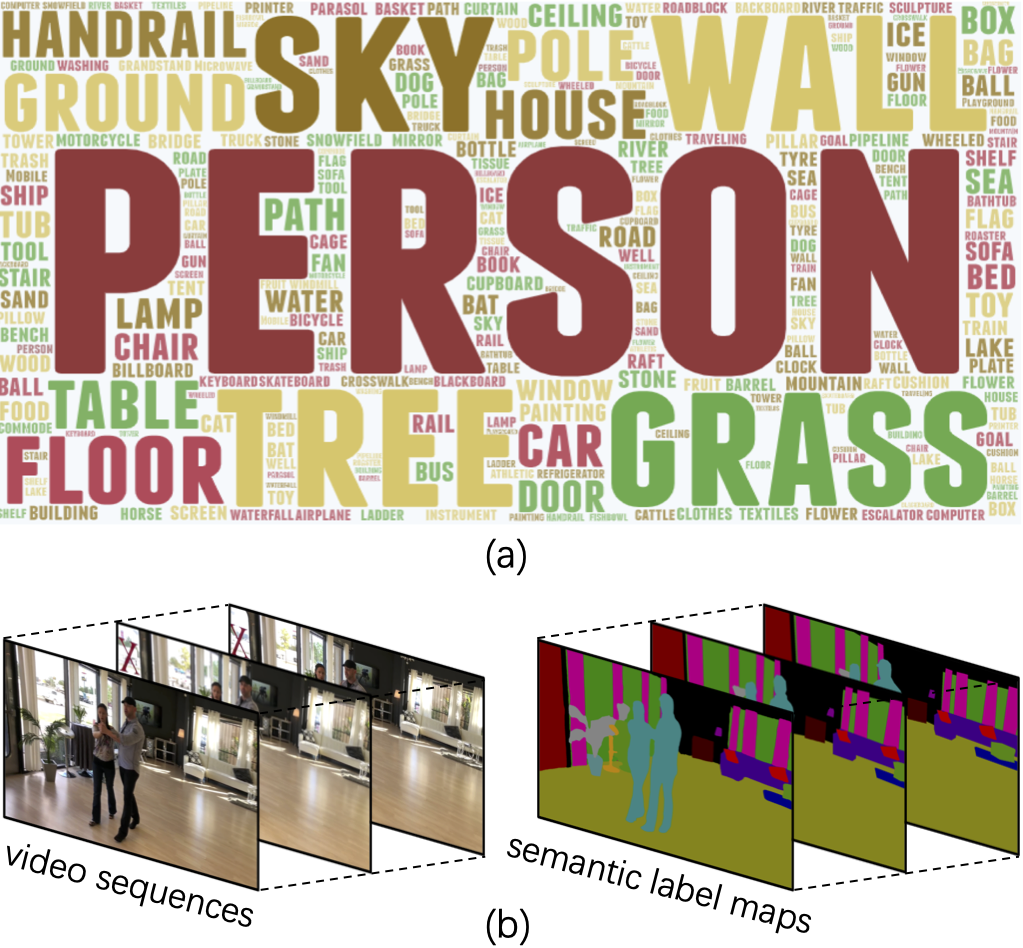

VSPW: A Large-scale Dataset for Video Scene Parsing in the Wild

In this paper, we present a new dataset with the target of advancing the scene parsing task from images to videos. Our dataset aims to perform Video Scene Parsing in the Wild (VSPW), which covers a wide range of real-world sce- narios and categories. To be specific, our VSPW is fea- tured from the following aspects: 1) Well-trimmed long- temporal clips. Each video contains a complete shot, last- ing around 5 seconds on average. 2) Dense annotation. The pixel-level annotations are provided at a high frame rate of 15 f/s. 3) High resolution. Over 96% of the cap- tured videos are with high spatial resolutions from 720P to 4K. We totally annotate 3,536 videos, including 251,633 frames from 124 categories. To the best of our knowl- edge, our VSPW is the first attempt to tackle the challenging video scene parsing task in the wild by considering diverse scenarios. Based on VSPW, we design a generic Tempo- ral Context Blending (TCB) network, which can effectively harness long-range contextual information from the past frames to help segment the current one. Extensive experi- ments show that our TCB network improves both the seg- mentation performance and temporal stability comparing with image-/video-based state-of-the-art methods. We hope that the scale, diversity, long-temporal, and high frame rate of our VSPW can significantly advance the research of video scene parsing and beyond. The dataset is available at https://www.vspwdataset.com/.

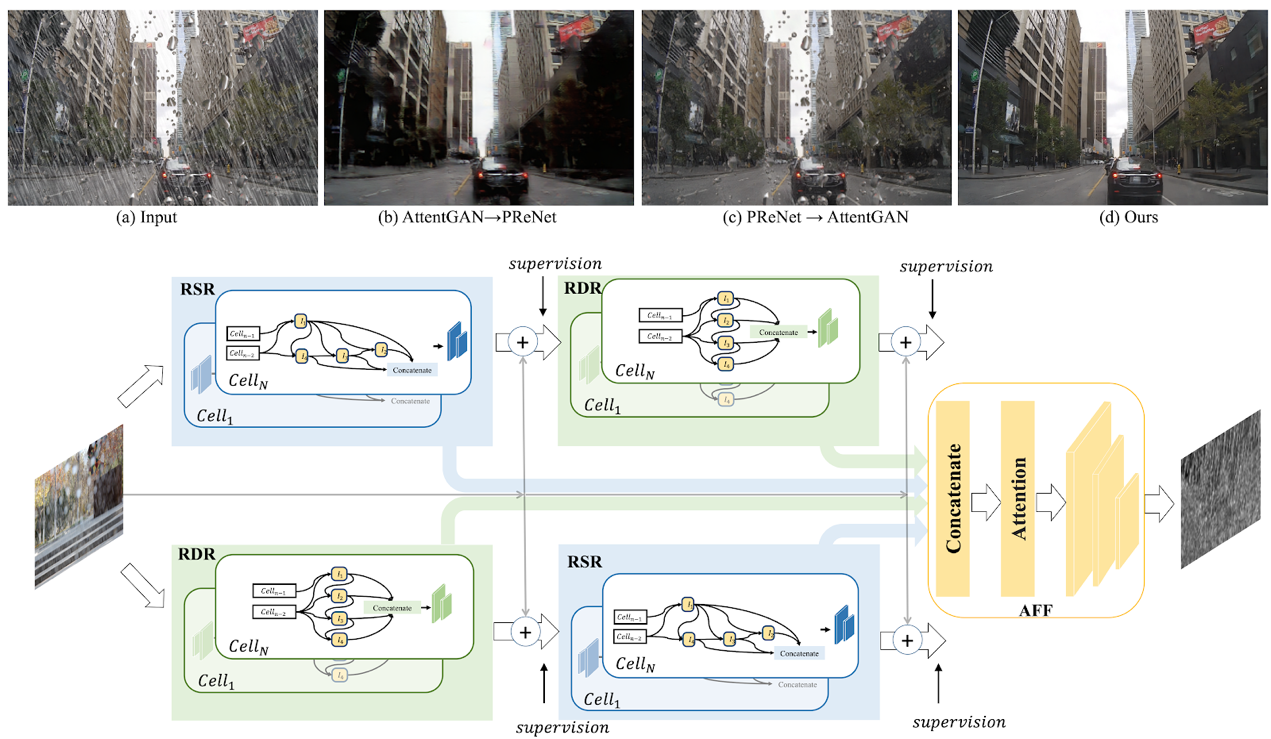

Removing Raindrops and Rain Streaks in One Go

Existing rain-removal algorithms often tackle either rain streak removal or raindrop removal, and thus may fail to handle real-world rainy scenes. Besides, the lack of real- world deraining datasets comprising different types of rain and their corresponding rain-free ground-truth also impedes deraining algorithm development. In this paper, we aim to address real-world deraining problems from two aspects. First, we propose a complementary cascaded net- work architecture, namely CCN, to remove rain streaks and raindrops in a unified framework. Specifically, our CCN re- moves raindrops and rain streaks in a complementary fashion, i.e., raindrop removal followed by rain streak removal and vice versa, and then fuses the results via an attention based fusion module. Considering significant shape and structure differences between rain streaks and raindrops, it is difficult to manually design a sophisticated network to remove them effectively. Thus, we employ neural architecture search to adaptively find optimal architectures within our specified deraining search space. Second, we present a new real-world rain dataset, namely RainDS, to prosper the development of deraining algorithms in practical scenarios. RainDS consists of rain images in different types and their corresponding rain-free ground-truth, including rain streak only, raindrop only, and both of them. Extensive experimental results on both existing benchmarks and RainDS demonstrate that our method outperforms the state-of-the-art.

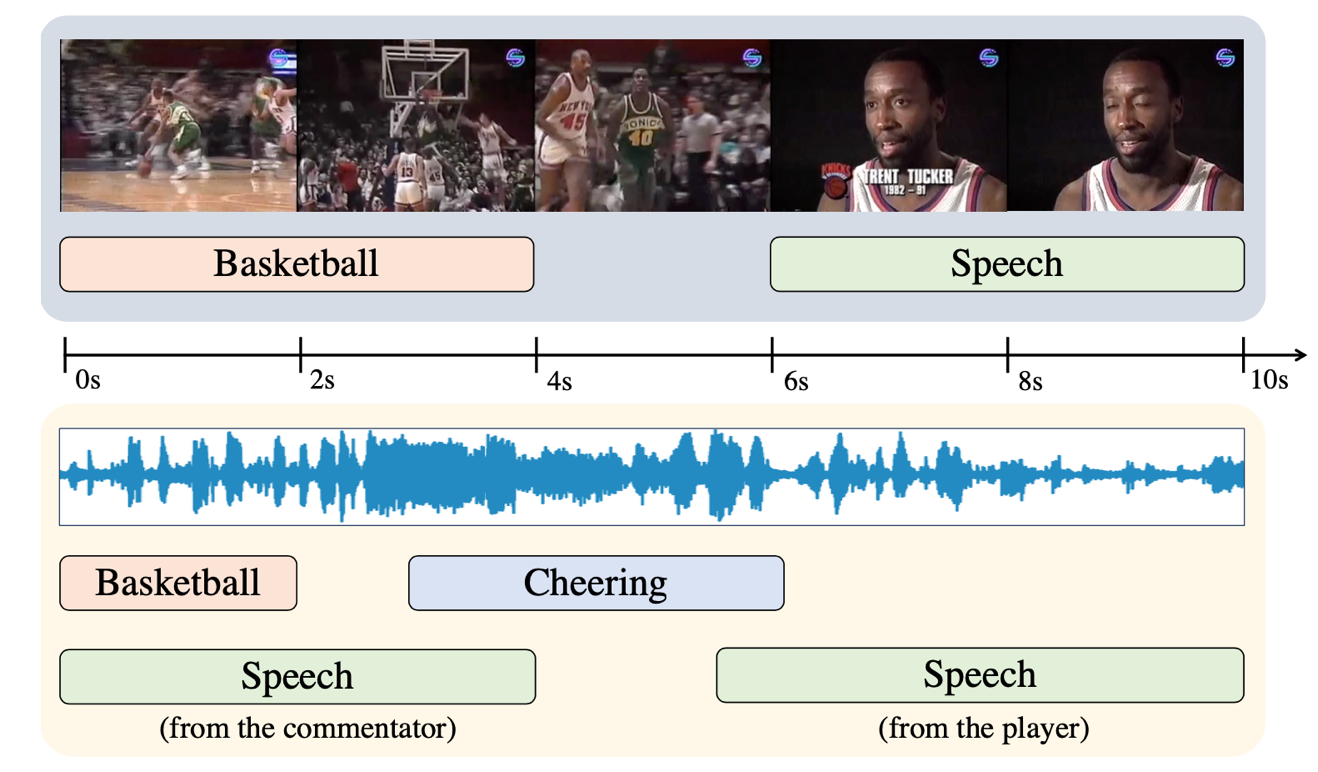

Exploring Heterogeneous Clues for Weakly-Supervised Audio-Visual Video Parsing

We investigate the weakly-supervised audio-visual video parsing task, which aims to parse a video into temporal event segments and predict the audible or visible event categories. The task is challenging since there only exist video-level event labels for training, without indicating the temporal boundaries and modalities. Previous works take the overall event labels to supervise both audio and visual model predictions. However, we argue that such overall labels harm the model training due to the audio-visual asynchrony. For example, commentators speak in a basketball video, but we cannot visually find the speakers. In this paper, we tackle this issue by leveraging the cross-modal correspondence of audio and visual signals. We generate re- liable event labels individually for each modality by swapping audio and visual tracks with other unrelated videos. If the original visual/audio data contain event clues, the event prediction from the newly assembled data would still be highly confident. In this way, we could protect our models from being misled by ambiguous event labels. In addition, we propose the cross-modal audio-visual contrastive learning to induce temporal difference on attention models within videos, i.e., urging the model to pick the current temporal segment from all context candidates. Experiments show we outperform state-of-the-art methods by a large margin.

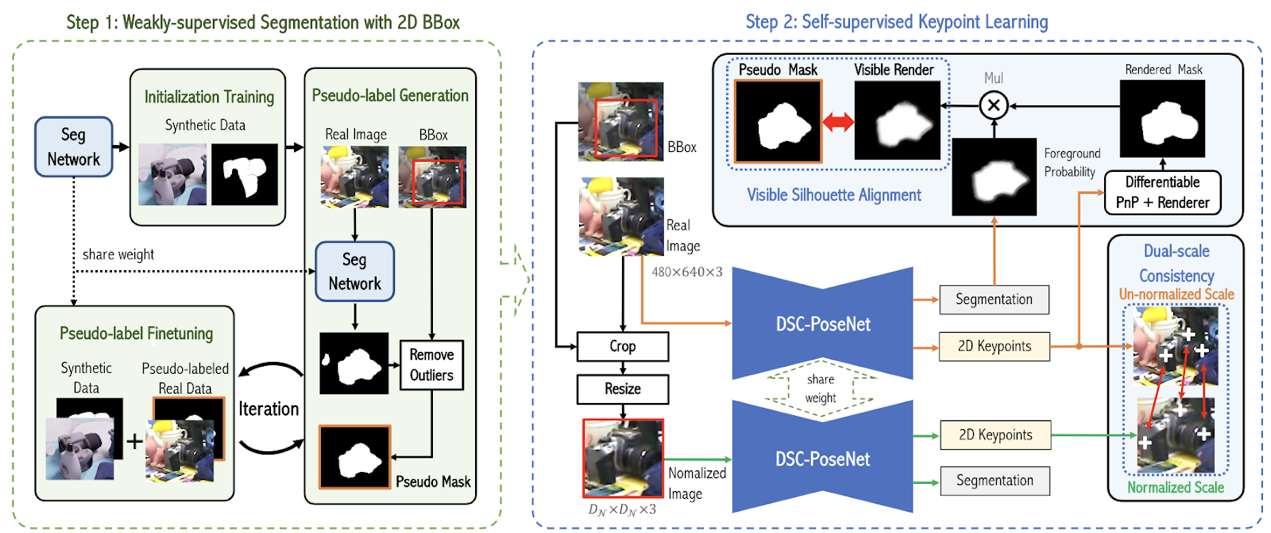

DSC-PoseNet: Learning 6DoF Object Pose Estimation via Dual-scale Consistency

Compared to 2D object bounding-box labeling, it is very difficult for humans to annotate 3D object poses, especially when depth images of scenes are unavailable. This paper investigates whether we can estimate the object poses effectively when only RGB images and 2D object annotations are given. To this end, we present a two-step pose estimation framework to attain 6DoF object poses from 2D object bounding-boxes. In the first step, the framework learns to segment objects from real and synthetic data in a weakly-supervised fashion, and the segmentation masks will act as a prior for pose estimation. In the second step, we design a dual-scale pose estimation network, namely DSC-PoseNet, to predict object poses by employing a differential renderer. To be specific, our DSC-PoseNet firstly predicts object poses in the original image scale by comparing the segmentation masks and the rendered visible object masks. Then, we resize object regions to a fixed scale to estimate poses once again. In this fashion, we eliminate large scale variations and focus on rotation estimation, thus facilitating pose estimation. Moreover, we exploit the initial pose estimation to generate pseudo ground-truth to train our DSC-PoseNet in a self-supervised manner. The estimation results in these two scales are ensembled as our final pose estimation. Extensive experiments on widely-used benchmarks demonstrate that our method outperforms state-of-the-art models trained on synthetic data by a large margin and even is on par with several fully-supervised methods.

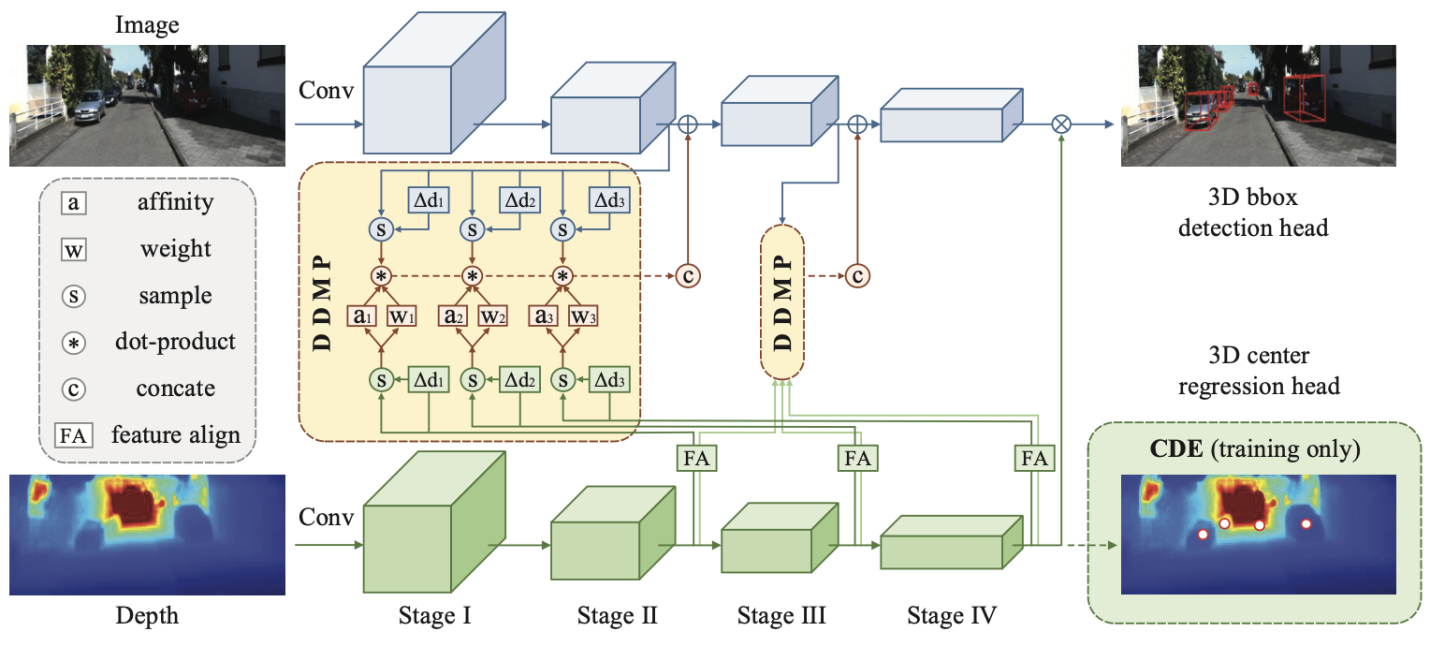

Depth-conditioned Dynamic Message Propagation for Monocular 3D Object Detection

The objective of this paper is to learn context- and depth-aware feature representation to solve the problem of monocular 3D object detection. We make following contributions: (i) rather than appealing to the complicated pseudo-LiDAR based approach, we propose a depth-conditioned dynamic message propagation (DDMP) network to effectively integrate the multi-scale depth information with the image context;(ii) this is achieved by first adaptively sampling context-aware nodes in the image context and then dynamically predicting hybrid depth-dependent filter weights and affinity matrices for propagating information; (iii) by augmenting a center-aware depth encoding (CDE) task, our method successfully alleviates the inaccurate depth prior; (iv) we thoroughly demonstrate the effectiveness of our proposed approach and show state-of-the-art results among the monocular-based approaches on the KITTI benchmark dataset. Particularly, we rank 1st in the highly competitive KITTI monocular 3D object detection track on the submission day (November 16th, 2020). Code and models are released at this https URL.

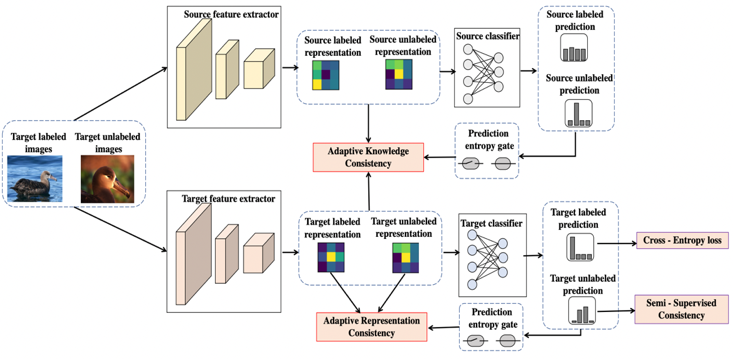

Adaptive Consistency Regularization for Semi-Supervised Transfer Learning

While recent studies on semi-supervised learning have shown remarkable progress in leveraging both labeled and unlabeled data, most of them presume a basic setting of the model is randomly initialized. In this work, we consider semi-supervised learning and transfer learning jointly, leading to a more practical and competitive paradigm that can utilize both powerful pre-trained models from source domain as well as labeled/unlabeled data in the target domain. To better exploit the value of both pre-trained weights and unlabeled target examples, we introduce adaptive consistency regularization that consists of two complementary components: Adaptive Knowledge Consistency (AKC) on the examples between the source and target model, and Adaptive Representation Consistency (ARC) on the target model between labeled and unlabeled examples. Examples involved in the consistency regularization are adaptively selected according to their potential contributions to the target task. We conduct extensive experiments on several popular benchmarks including CUB-200-2011, MIT Indoor-67, MURA, by fine-tuning the ImageNet pre-trained ResNet-50 model. Results show that our proposed adaptive consistency regularization outperforms state-of-the-art semi-supervised learning techniques such as Pseudo Label, Mean Teacher, and MixMatch. Moreover, our algorithm is orthogonal to existing methods and thus able to gain additional improvements on top of MixMatch and FixMatch. Our code is available at this https URL.