2019-05-22

Back to listBy Kainan Peng, Wei Ping, Zhao Song, Kexin Zhao

Text-to-Speech (TTS), also called speech synthesis, has a variety of applications, such as smart home devices, map navigation and content creation. In recent years, neural TTS system has obtained the state-of-the-art results and gained a lot of attention.

Today, we're excited to announce the first parallel neural TTS system, the latest milestone of TTS research at Baidu. Previous neural TTS systems rely on autoregressive or recurrent neural networks to predict acoustic features and generate raw waveform, which operate sequentially at a high temporal resolution of the data (e.g., 24 kHz) at synthesis and can be quite slow on modern hardware optimized for parallel execution. In this work, we introduce the first fully parallel neural TTS system by proposing a non-autoregressive seq2seq architecture. Notably, our system can synthesize speech from text through a single feed-forward pass.

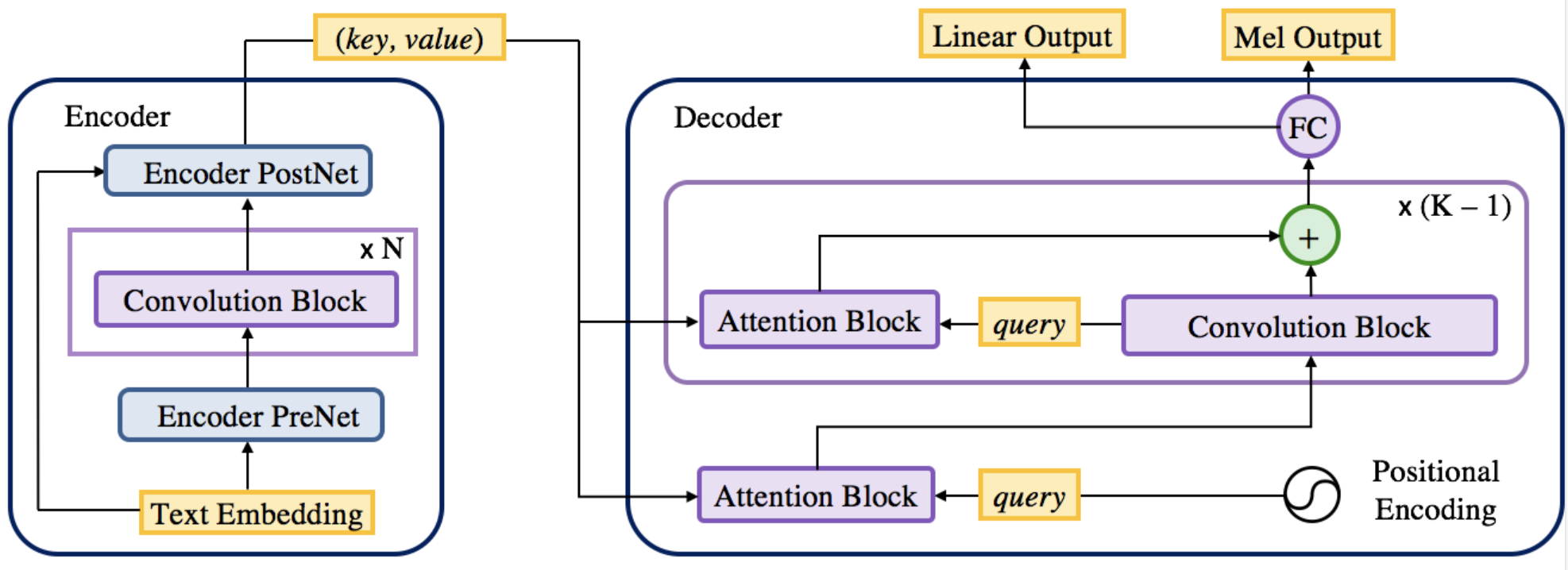

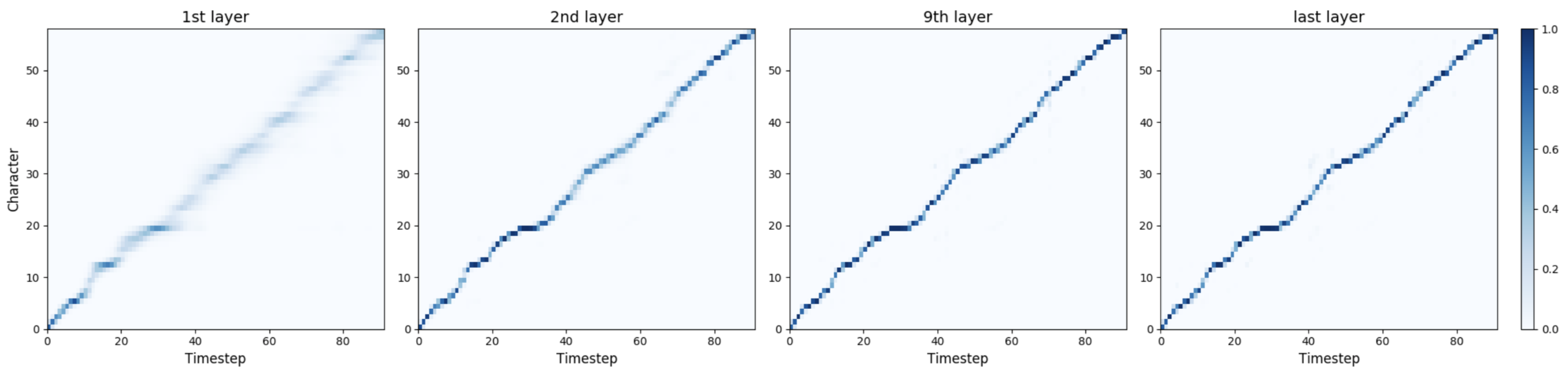

Our parallel neural TTS system has two components: 1) a novel feed-forward text-to-spectrogram model, and 2) a parallel waveform synthesizer conditioned on spectrogram. In particular, we propose ParaNet, the first non-autoregressive attention-based architecture for TTS (see Figure 1), which is fully convolutional and converts text to spectrogram. Our ParaNet can iteratively refine the attention alignment between text and spectrogram in a layer-by-layer manner (see Figure 2). It achieves ~46.7 times speech-up over its autoregressive counterpart, while maintaining comparable speech quality. Interestingly, the non-autoregressive ParaNet produces even fewer attention errors on the challenging test sentences than the state-of-the-art autoregressive model.

Figure 1. The encoder-decoder architecture of ParaNet.

Figure 2. ParaNet iteratively refines the attention and becomes more confident about the alignment between text and speech layer-by-layer.

We then apply the Gaussian inverse autoregressive flow (IAF) to synthesize raw waveform in parallel from spectrogram, which is also a feed-forward neural network and was proposed in our previous work (link: ClariNet). Furthermore, we explore a novel approach, WaveVAE, to train the IAF as a generative model for raw waveform. In contrast to previous methods, WaveVAE avoids the need for distillation from a separately trained WaveNet and can be trained from scratch by using the Gaussian IAF as the decoder in the variational auto-encoder (VAE) framework.

For more details of our parallel neural TTS system, please check out our paper .

Audio samples are in:

https://parallel-neural-tts-demo.github.io/