2020-07-08

Back to list

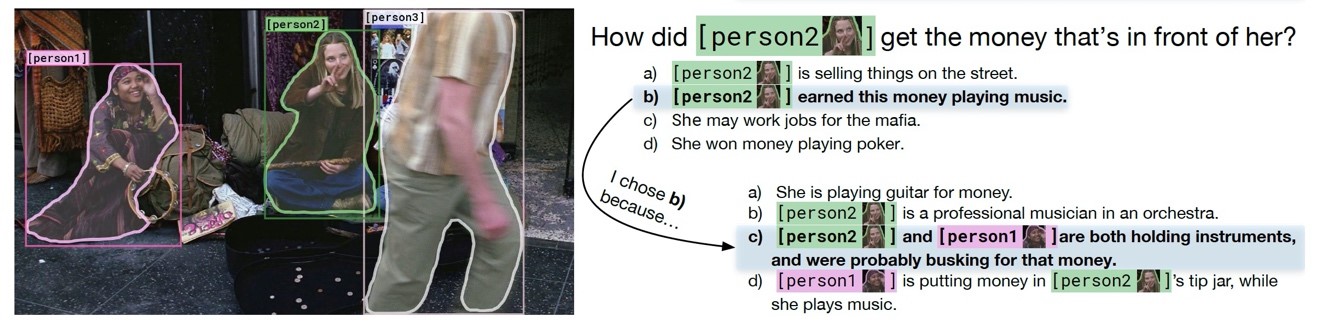

“How did person2 in the image above get the money that’s in front of her? And how did you justify your answer?”

With one glance at an image, humans can effortlessly reason what’s happening and explain why: two women sitting by the street playing instruments to busk for money. The task, however, is a relatively difficult challenge for today’s computer systems.

A dataset built by the University of Washington and the Allen Institute for Artificial Intelligence known as Visual Commonsense Reasoning (VCR), is collecting 290K visual question-answering problems of this type, aiming to test AI’s commonsense understanding capability. The best AI system could only score 66.8 compared to human performance of 85.

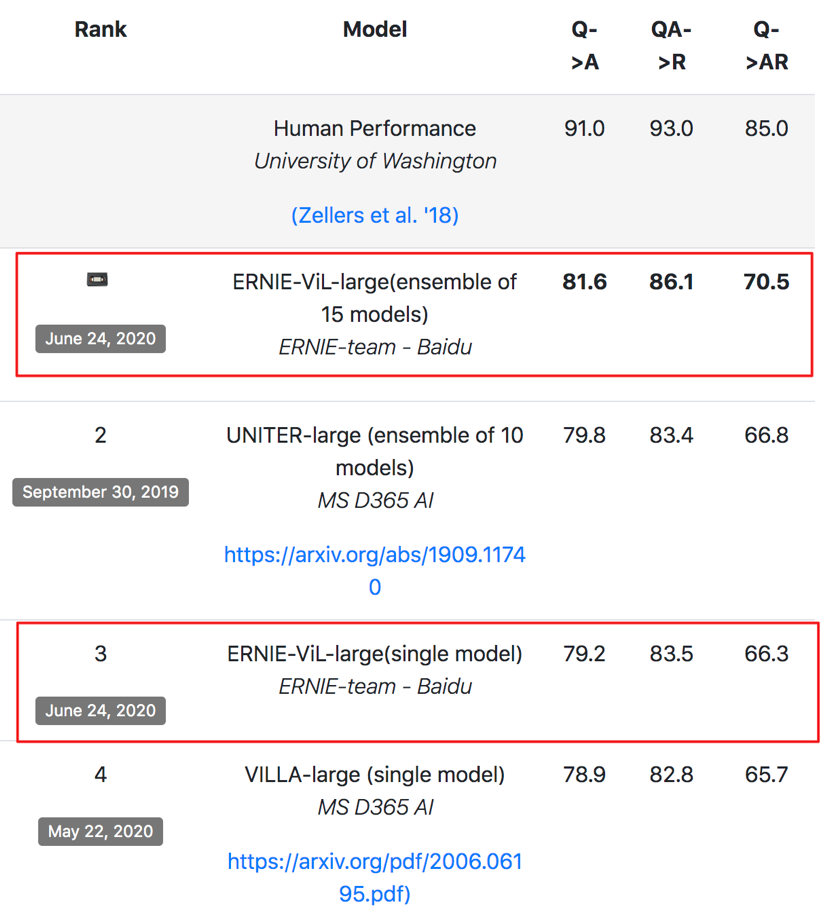

We are proud to introduce ERNIE-ViL, a knowledge-enhanced approach to learn joint representations of vision and language. To our best knowledge, ERNIE-ViL is the first model introducing structure knowledge to enhance vision-language pre-training. After fine-tuning ERNIE-ViL, the model ranked first on the VCR leaderboard with an absolute improvement of 3.7% and achieved state-of-the-art performance on five vision-language downstream tasks.

You can read the ERNIE-ViL paper on arXiv. The model is implemented using Baidu’s deep learning platform PaddlePaddle. The code will soon be available on GitHub.

Why is visual understanding so important?

Visual understanding lays the foundation for computer systems to physically interact in everyday scenes.

In 1966, one of the greatest AI pioneers, Marvin Minsky, asked his graduate student to spend the "summer linking a camera to a computer and getting the computer to describe what it saw." For the past half-century, AI researchers have made visual understanding – a capability of understanding and reasoning the world from a scene – something of a holy grail.

Visual understanding goes well beyond merely recognizing and labeling objects. Researchers have spent years studying algorithms necessary for a system to understand visual content and express that understanding with languages.

The steady progress achieved in natural language processing (NLP), thanks to pre-training models like Google's BERT and Baidu's ERNIE, has motivated researchers to apply pre-training methods to learn joint representations of image content and natural language.

Existing vision-language models learn joint representations through visual grounding tasks on large image-text datasets, but they lack the capability of capturing detailed semantic information, such as objects, attributes, and relationships between objects.

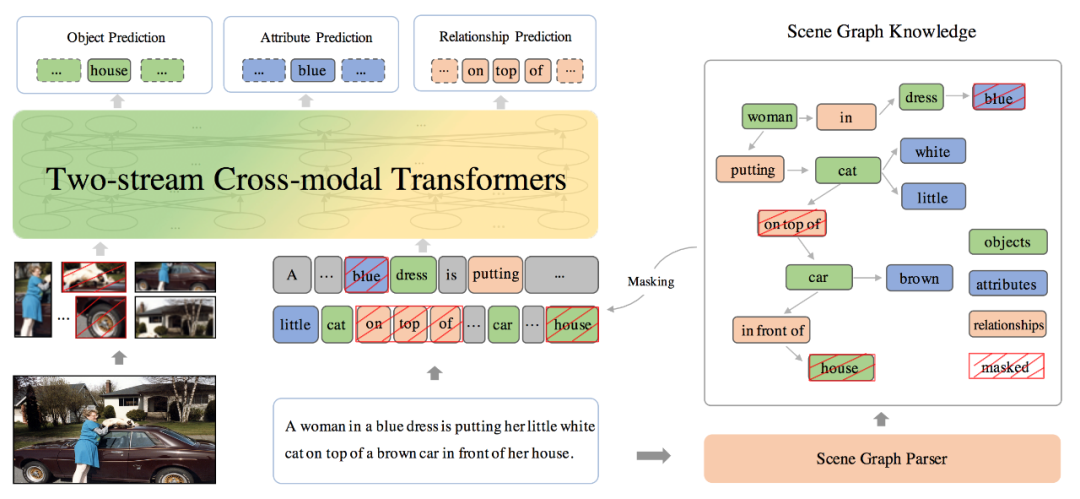

When humans describe the scene from the image below, they tend to lock their eyes on important properties. For example, they focus on objects like a car, person, cat, or house and the attributes of those objects such as "the cat is white" or " the car is brown." They also create semantic relationships between objects like "the cat on top of the car," or "the car in front of the house," etc.

Our researchers borrowed lessons from ERNIE which achieved SOTA results on a variety of NLP tasks by learning more structured knowledge like phrases and named entities instead of individual sub-words. They incorporated knowledge from scene graphs to construct better and more robust representations for vision-language joint modeling.

Scene graph prediction

A scene graph is usually a graph structure where each node in the graph represents information from a visual scene. Using the scene graph knowledge parsed from the sentence as shown below, our researchers created Object Prediction, Attribute Prediction and Relationship Prediction tasks in the pre-training phase to learn the alignments of detailed semantics between image and language.

Specifically, in the Object Prediction task, researchers randomly masked 30% of all the objects nodes in the scene graph, such as “house” in the image above, and trained the model to predict as a visual cloze test.

It’s the same with the Attribute Prediction task, where the model is tasked to uncover 30% of masked attribute nodes, such as “blue dress” in the image above. With the Relationship Prediction task, researchers kept the objects and masked out the relationship node, such as “the cat on top of the car”.

ERNIE-ViL is essentially a two-stream cross-modal Transformers network. Similar to VilBERT from Facebook AI, ERNIE-ViL consists of two parallel BERT-style models operating over image regions and text segments.

In addition to the three scene graph prediction tasks, ERNIE-ViL is also trained with Masked Language Modelling (MLM) Masked Region Prediction and Image-text matching.

Evaluations

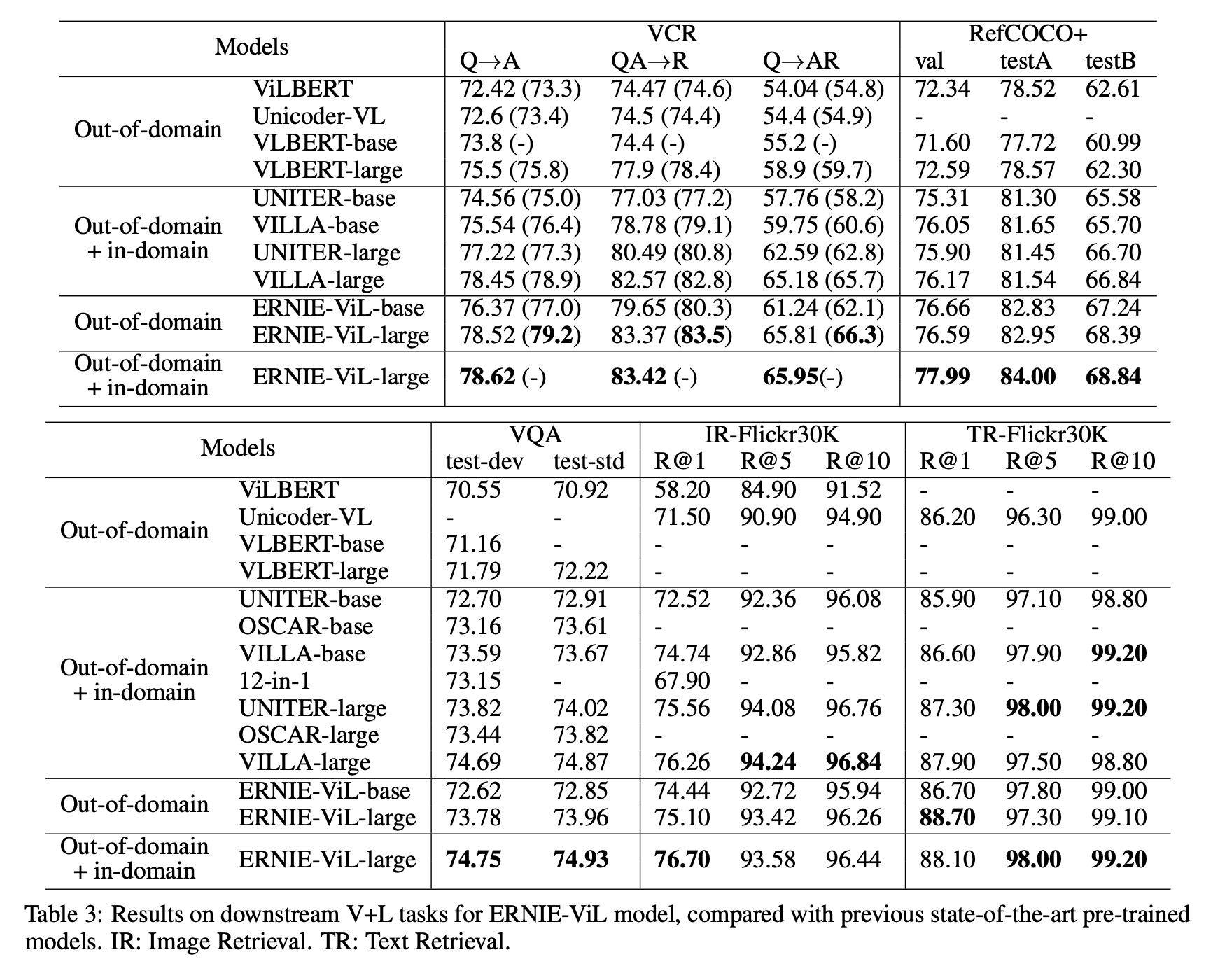

In the experiments, our researchers compared pre-training ERNIE-ViL model against other cross-modal pre-training models. ERNIE-ViL achieved state-of-the-art results on all downstream tasks, including Visual Question Answering (VQA), Image-Retrieval (IR), Text-Retrieval (TR), and Referring Expressions Comprehension (RefCOCO+).

In VCR, which contains visual question answering (Q→A) and answer justification (QA→R), which are both multiple choice problems, a model is tasked not only to answer commonsense visual questions, but also provide a rationale that explains why the answer is true. ERNIE-ViL is the best single model on VCR, scoring 79.2 in Q->A and 83.5 in QA->R, with a total of 66.3.

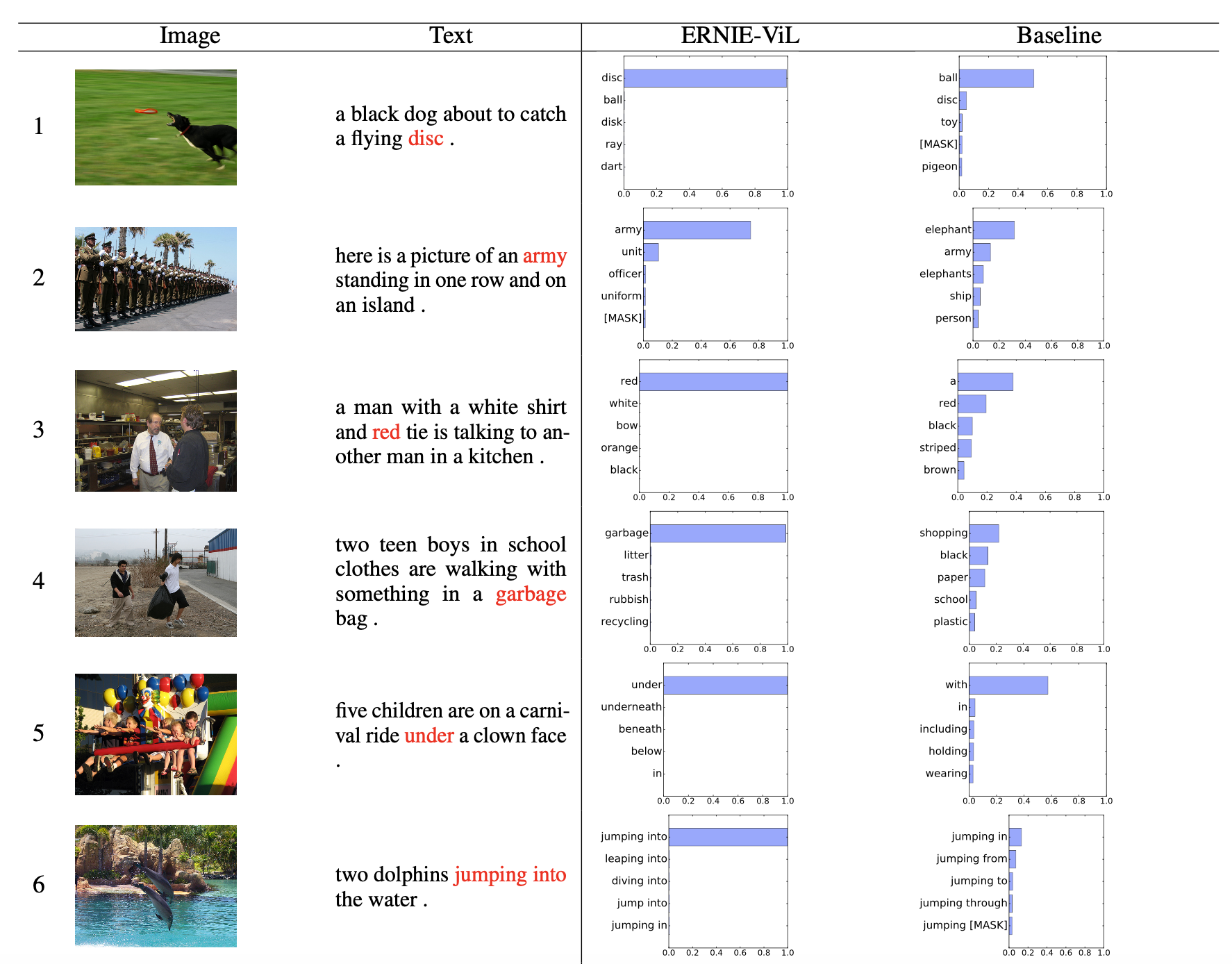

Researchers also assessed the effectiveness of incorporating knowledge from the scene graph using visual language cloze tests. Compared to the baseline model, which is pre-trained without the Masked Scene Graph prediction task, ERNIE-ViL demonstrates an absolute improvement of 2.12% for objects, 1.31% for relationships and 6.00% for attributes. As shown in the illustration below, ERNIE-ViL can deliver more accurate predictions with higher confidence in most cases compared to the baseline model

We believe scene graph may promise more potential in multimodal pretraining. For future work, the scene graph extracted from images could also be incorporated into cross-modal pre-training. Moreover, Graph Neural Networks could also be considered to integrate more structured knowledge.