2019-06-18

Back to listThis blog post introduces our recent publication in ACL 2019.

Bilingual Lexicon Induction (BLI) is the task of translating words from non-parallel corpora in two languages. The non-parallelism is a major challenge, in the sense that no sentence-to-sentence translation exists between the two corpora. This fact also signifies the usefulness of BLI for building machine translation systems, when parallel corpora are scarce, e.g., minority languages.

A Typical Approach for BLI

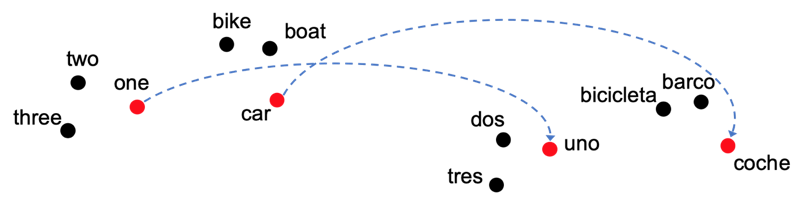

State-of-the-art methods resort to word embeddings, as there is an approximate isometry between the embedding spaces of the two languages. A typical approach works by learning an orthogonal mapping that aligns some given translation pairs (called seeds). Then more translations are induced by Nearest Neighbor (NN) search in the “aligned” embedding space. Figure 1 illustrates the idea. Note that the two spaces share some similar local structures.

Figure 1 The word embedding spaces of English (left) and Spanish (right). In red are seeds. The task is to infer correspondence for the remaining words. Dashed curves picturize the learned mapping, which may bring an English word closest to its translation, amongst all Spanish words. For example, bike may be mapped to be very close to bicicleta.

Hubness Nearest Neighbor Search

The NN search is a crucial step for BLI. However, NN is often degraded by a phenomenon called hubness. Hubness is a tendency that some data points, called "hubs", are suspiciously close to many others. As a result, NN search may retrieve these "hubby" words more often than they should be. For example, in a Portuguese-to-English task, word "these" is retrieved as a top-5 translation candidate for 1,042 Portuguese words. Obviously it is more popular than should be.

The proposed method, Hubless Nearest Neighbor (HNN) search hinges upon an Equal Preference Assumption. Essentially, it is a constraint that all target words are equally likely to be retrieved as a translation candidate. The retrieval task is then re-formulated into a linear assignment problem. By relaxing its objective function, we further propose an efficient solver in the paper.

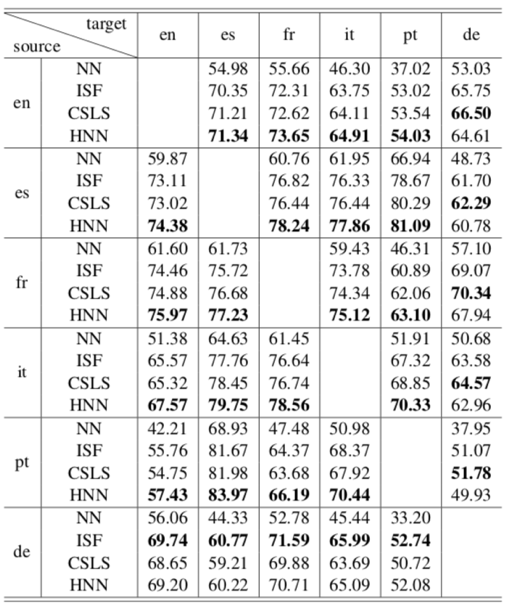

Table 1 reports extensive results on 6 European languages. The compared are HNN, NN and two other state-of-the-art alternatives. In most cases, HNN achieves the best accuracy. The only exceptions are cases where German (de) is involved. In fact, these cases are where the Equal Preference Assumption is violated the most.

Table 1 Accuracies of BLI on 6 European languages, using different retrieval methods. NN is the baseline. ISF and CSLS are two state-of-the-art competitors.

More details can be found in our paper https://huangjiaji.github.io/resource/HNN.pdf

Code to reproduce major results is published at https://github.com/baidu-research/HNN

Future works

As discussed by the last section, hubness is a commonly seen phenomenon in high dimensional space. The word embedding space is just one example. In that sense, the proposed HNN is a general technique that may benefit any multi-dimensional retrieval task. For example, zero-shot image classification. We hope the proposed HNN can spawn interesting future works in multiple areas, including computational linguistics, computer vision and so on.