2017-10-24

Back to listToday, we are excited to announce Deep Voice 3, the latest milestone of Baidu Research’s Deep Voice project. Deep Voice 3 teaches machines to speak by imitating thousands of human voices from people across the globe.

The Deep Voice project was started to revolutionize human-technology interactions by applying modern deep learning techniques to artificial speech generation. In February 2017, we launched Deep Voice 1, which focused on building the first entirely neural text-to-speech system which could operate in real-time – fast enough for efficient deployment. In May 2017, we released Deep Voice 2, with substantial improvements on Deep Voice 1 and, more importantly, the ability to reproduce several hundred voices using the same system.

Deep Voice 3 introduces a completely novel neural network architecture for speech synthesis. This novel architecture trains an order of magnitude faster, allowing us to scale over 800 hours of training data and synthesize speech from over 2,400 voices, which is more than any other previously published text-to-speech model.

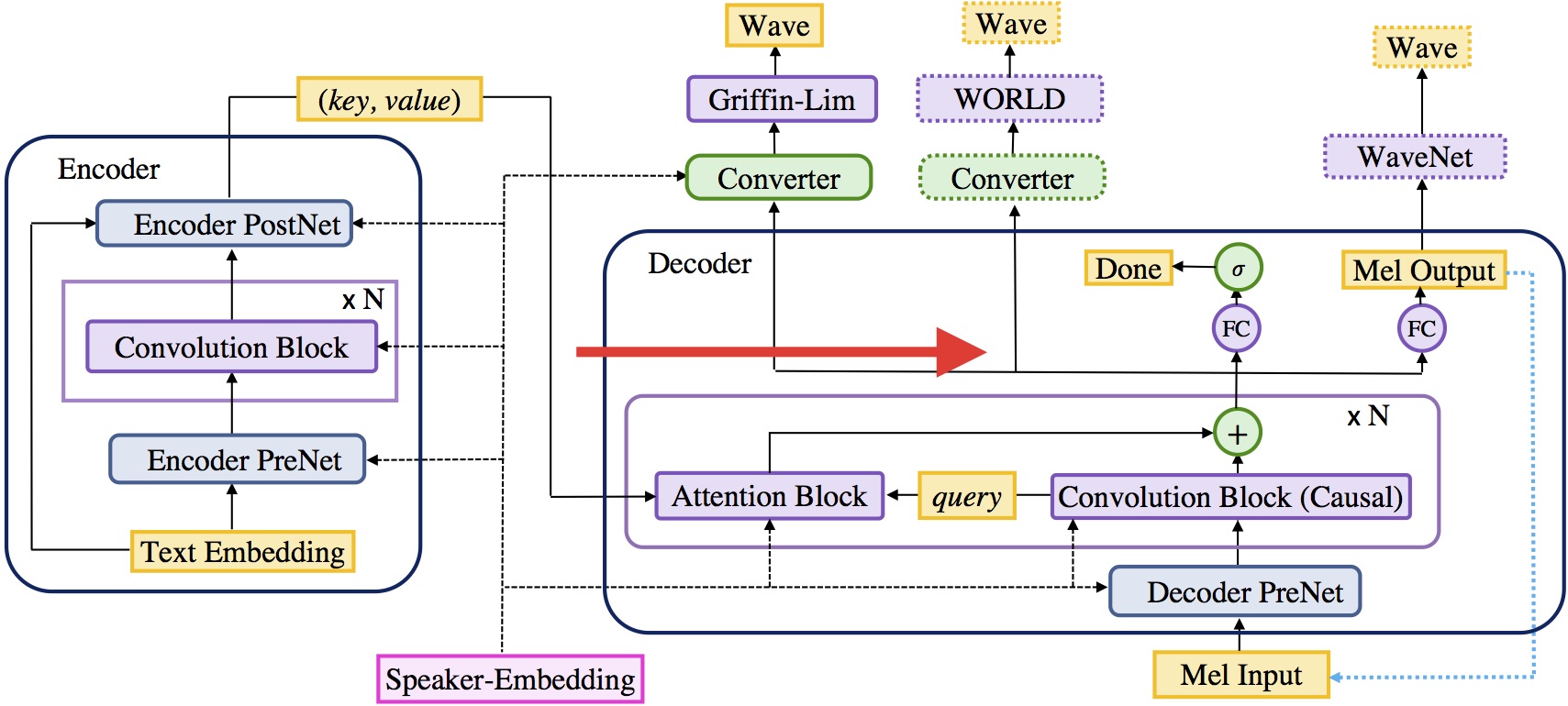

Fig. 1 Deep Voice 3 architecture.

The Deep Voice 3 architecture (see Fig. 1) is a fully-convolutional sequence-to-sequence model which converts text to spectrograms or other acoustic parameters to be used with an audio waveform synthesis method. We use low-dimensional speaker embeddings to model the variability among the thousands of different speakers in the dataset.

A sequence-to-sequence model like Deep Voice 3 consists of an encoder, which maps input to embeddings containing relevant information to the output, and a decoder which generates output from these embeddings. Unlike most sequence-to-sequence architectures that process data sequentially using recurrent cells, Deep Voice 3 is entirely convolutional, which allows parallelization of the computation, and results in very fast training.

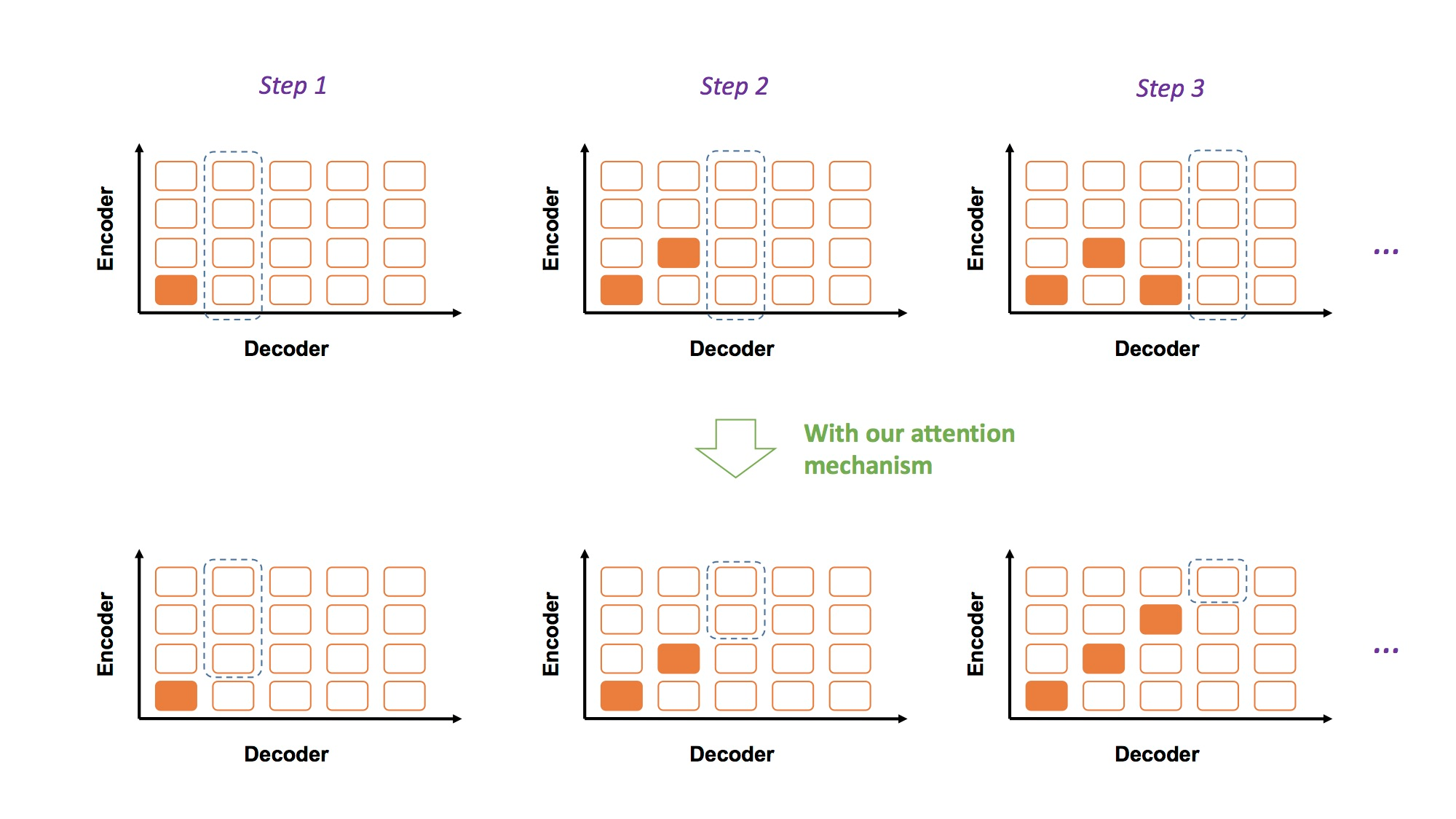

A common challenge for sequence-to-sequence models is learning the correct “attention” mechanism – the capability to closely keep track of the input data. Errors in the attention mechanism often result in repetition, skipping of some text or pronunciation mistakes. We address these issues with a novel attention mechanism that has the constraint to always look ahead to find the relevant information for output generation (see Fig. 2). We demonstrate that text-to-speech significantly benefits from a consistent and monotonic alignment.

Fig. 2 Attention mechanism to introduce monotonic alignment.

For more details about Deep Voice 3, please check out our paper.

Here are some randomly-chosen samples from our system trained for 1 speaker (with in total ~20 hours of TTS data):

Here are some randomly-chosen samples from our system trained for 108 speakers (with in total ~44 hours of TTS data):

Here are some randomly-chosen samples from our system trained for 2484 speakers (with in total ~820 hours of ASR data):