2021-12-28

Back to list

Director of Peng Cheng Laboratory Wen Gao (left) and Baidu CTO Haifeng Wang (right)

From the day we are born, we acquire and accumulate knowledge through learning and experience to understand everything from surrounding subjects to the universe. As our knowledge base constantly grows, our ability to comprehend and solve problems and distinguish facts from absurdities continually evolves.

AI systems lack such knowledge, limiting their ability to adapt to unusual problem data. For example, recently-emerged large-scale language models (or “LLMs”) are trained on massive text corpora and demonstrate reading comprehension and creative writing skills. However, they can also produce nonsense sentences like “Your foot has two eyes.” As we are increasingly reliant on AI to solve real-world problems, the ability to acquire and act on knowledge is as critical for humans as for a computer.

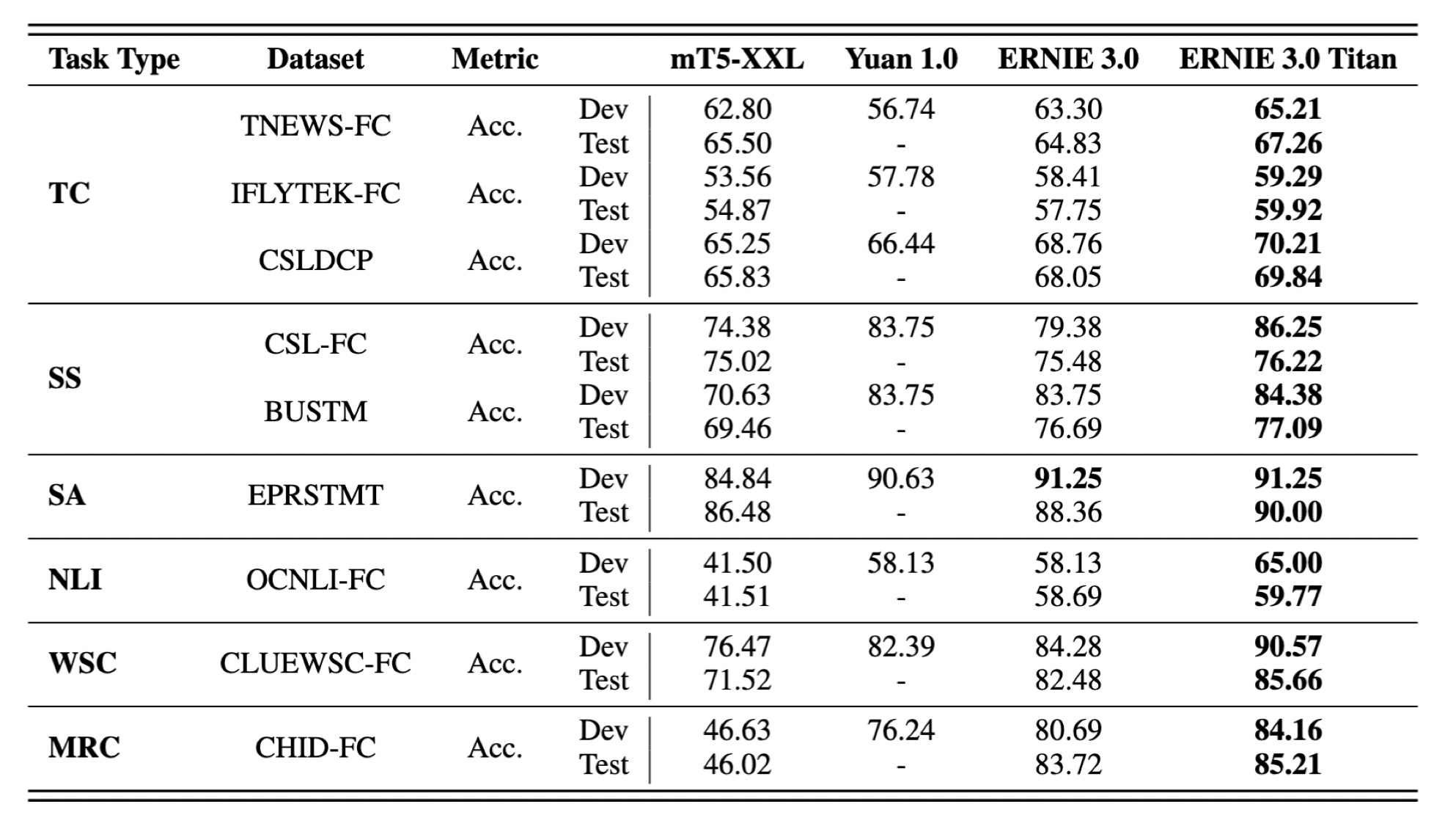

We are excited to introduce PCL-BAIDU Wenxin (or “ERNIE 3.0 Titan”), a pre-training language model with 260 billion parameters. We trained our model on massive unstructured data and a gigantic knowledge graph, and it excels at both natural language understanding (NLU) and generation (NLG). To our knowledge, ERNIE 3.0 Titan is the world’s first knowledge enhanced multi-hundred billion parameter model and the world’s largest Chinese singleton model, with a dense model structure distinguishable from the sparse Mixture of Experts (MoE) system.

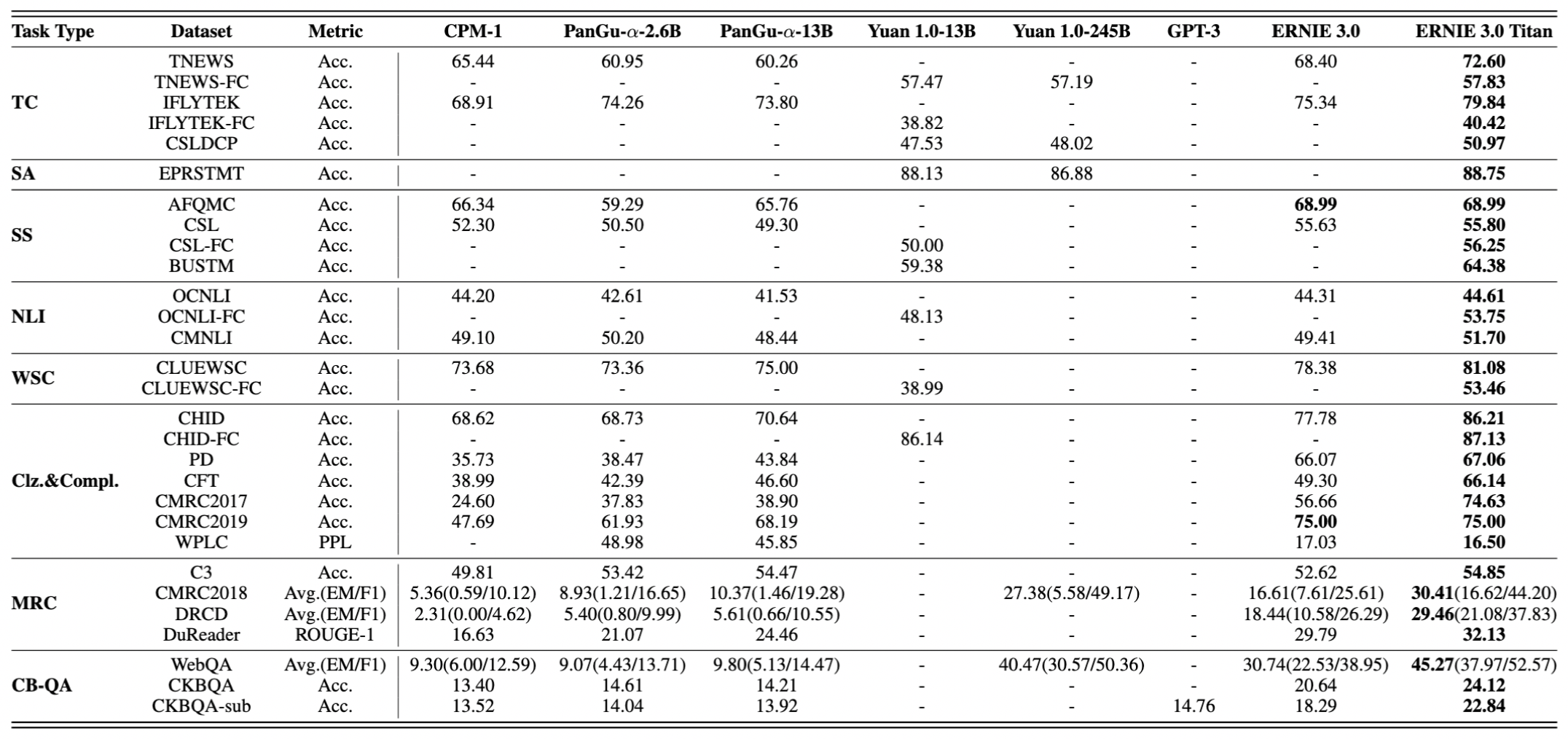

The model was developed through collaboration between Baidu and Peng Cheng Laboratory (PCL), a Shenzhen-based scientific research institution. Experimental results show ERNIE 3.0 Titan has obtained state-of-the-art results in more than 60 natural language processing tasks, including machine reading comprehension, text classification and semantic similarity. The model also excels at 30 few-shot and zero-shot benchmarks, indicating it can generalize across various downstream tasks given a limited amount of labeled data and further lower the threshold of application.

ERNIE 3.0 Titan is the latest addition to Baidu’s ERNIE family that was first launched in 2019. Today, ERNIE has been widely deployed across finance, healthcare, insurance, equity, Internet, logistics, and other fields. You can read the paper here for more information.

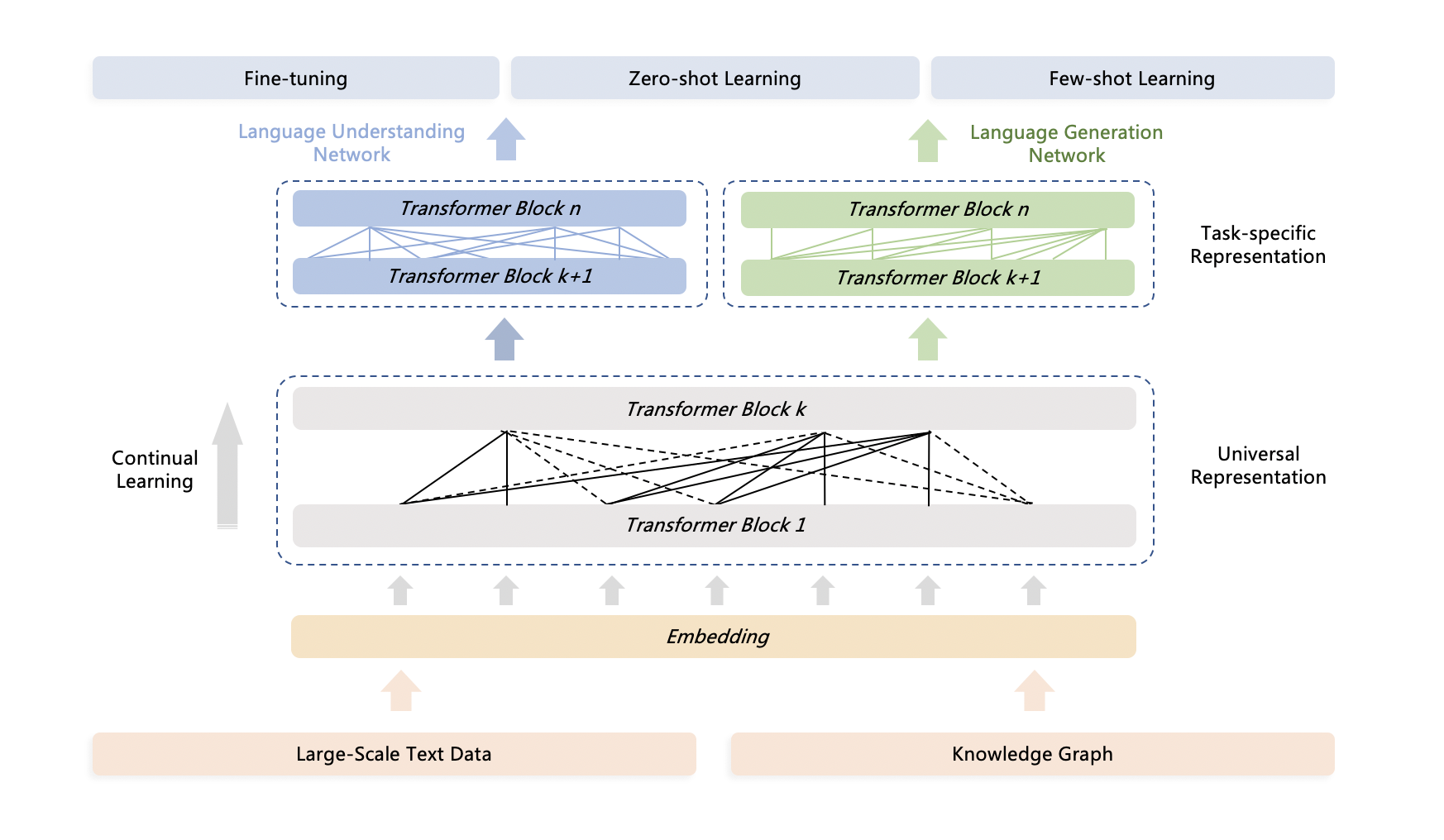

The framework of ERNIE 3.0 Titan

Controllable and credible learning algorithms

Self-supervised pre-training helps AI scale the number of parameters and tap into larger, unlabeled datasets. The increasingly popular technique has resulted in recent breakthroughs, especially in natural language processing (NLP). Compared to ERNIE 3.0 released in July, the scale of model parameters has been expanded from 10 billion to 260 billion.

We also proposed a controllable learning algorithm and a credible learning algorithm to ensure the model can formulate reasonable and coherent texts.

The controllable learning algorithm enables the model to compose in a targeted, controllable manner, with given genre, sentiment, length, topic and keywords. The credible learning algorithm trains the model to distinguish fake, synthesized language from real-world human language through a self-supervised adversarial learning framework.

Controllable and credible learning algorithms

Online distillation for environment-friendly AI models

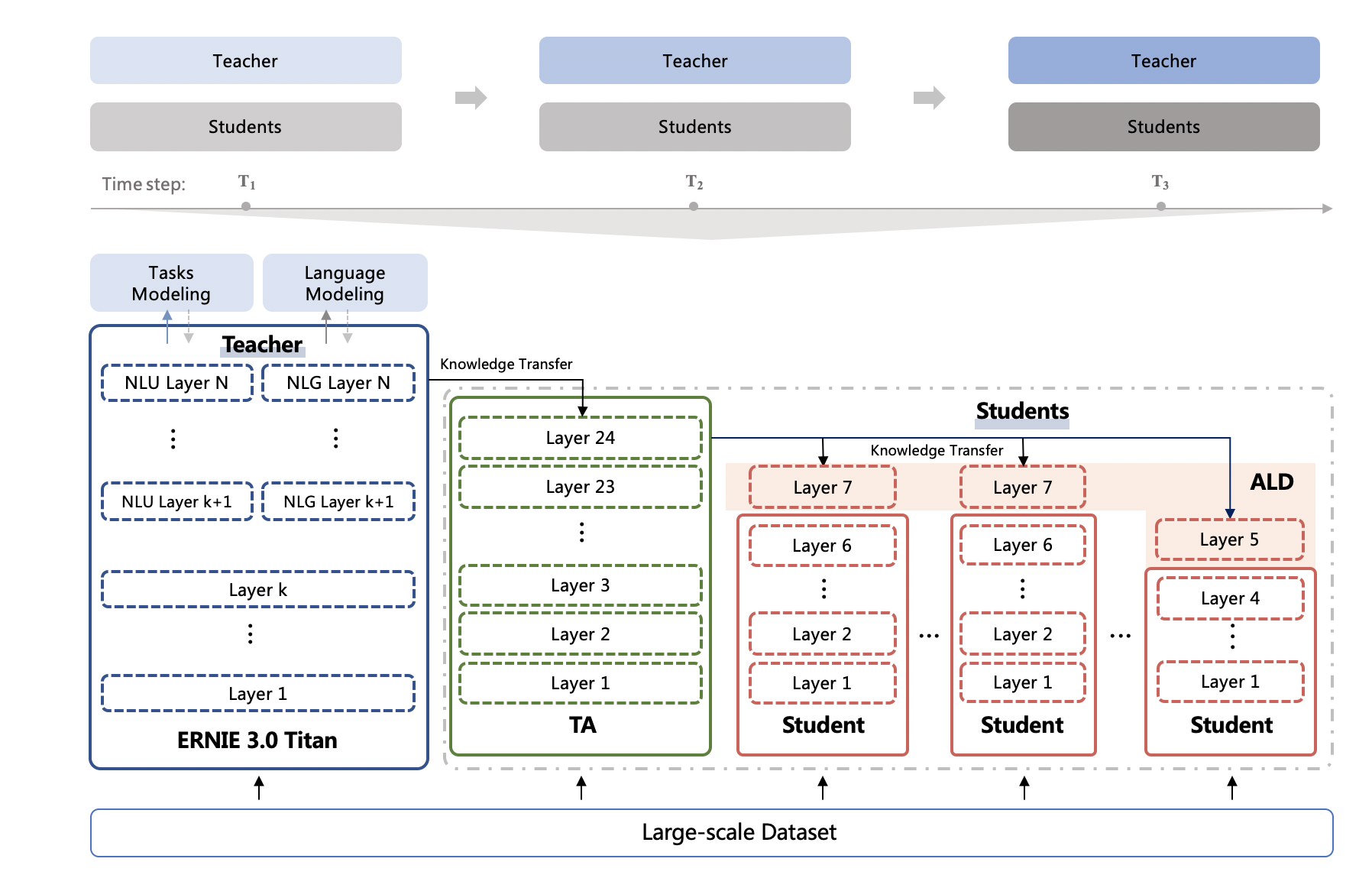

Training and inferencing large-scale models consume an excessive amount of resources. Therefore, we attach great importance to green and sustainable AI and adopted an online distillation technique to compress our model.

Specifically, we used a teacher-student compression (TSC) approach to create a less expensive student model that mimics the teacher model, i.e., ERNIE 3.0 Titan. This technique periodically transfers knowledge signals from the teacher model to multiple student models of different sizes simultaneously. Compared with traditional distillation, this approach can save a significant amount of power consumption caused by extra distillation calculations of the teacher model and the repetitive knowledge transfer of multiple student models.

We also found that the sizes of ERNIE 3.0 Titan and the student model are more than a thousand times different, making the model distillation extremely difficult or even invalid. To this end, we incorporated the so-called teacher-assistant model to bridge the knowledge gap between a very deep teacher and a very shallow student. Compared with the BERT Base model, whose number of parameters is twice as large as the student model, the student version of ERNIE 3.0 Titan has improved the accuracy of 5 tasks by 2.5%. Compared with the RoBERTa Base of the same scale, the accuracy is improved by 3.4%.

Online distillation for ERNIE 3.0 Titan

End-to-end adaptive distributed training on PaddlePaddle

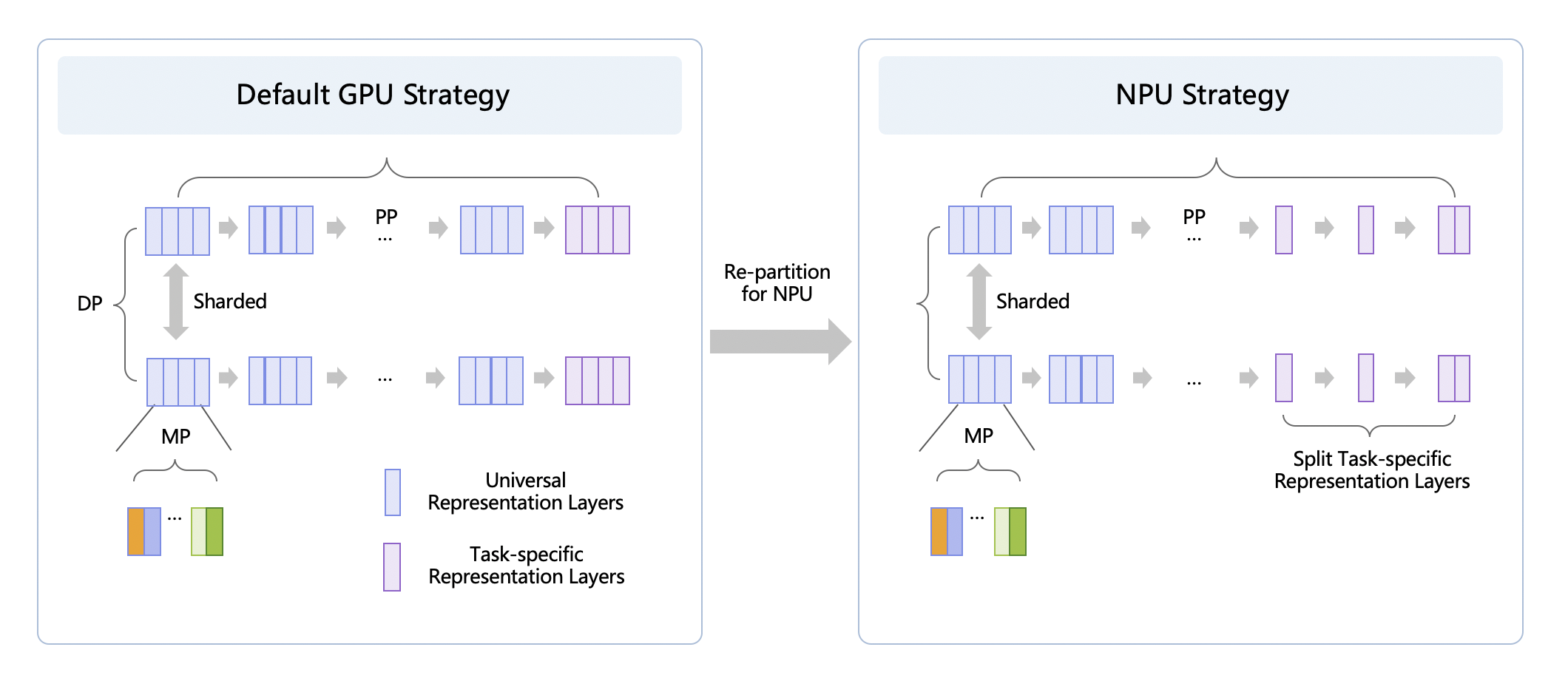

The number of parameters in LLMs has grown exponentially as their performance can be steadily improved by enlarging the scale of the model. However, training and inferencing a model with over a hundred billion parameters poses tremendous challenges and pressure upon the underlying infrastructure.

We designed an end-to-end distributed training framework to satisfy versatile, adaptive requirements on PaddlePaddle. The framework is tailored to industrial applications and production environments as it fully considers resource allocation, model partition, task placement, and distributed execution. Experiments showed that using our framework, ERNIE 3.0 Titan with 260 billion parameters can be efficiently trained in parallel across thousands of AI processors. Furthermore, the training performance of the model has been increased up to 2.1 times by using resource-aware allocation. You can find more here.

End-to-end adaptive distributed training on PaddlePaddle

Peng Cheng Laboratory Cloud Brain II, China’s first E-Level AI computing platform, provided the computing capacity that powers the training of ERNIE 3.0 Titan. Peng Cheng Cloud Brain II ranked first on the IO500 ISC21 List and the 10 Node Challenge List. This May, Cloud Brain II ranked first in the natural language processing category in MLPerf Training V1.0.

Since the creation of ERNIE 1.0, ERNIE models have been widely applied in Baidu’s search, newsfeed, smart speakers, and other Internet products. Through Baidu AI Cloud, ERNIE empowers manufacturing, energy, finance, telecommunications, media, and education.

For example, in the finance industry, ERNIE and Baidu ML, a full-featured AI development platform, have been applied to develop an intelligent analysis model for finance contract terms. The model can intelligently classify different clauses in a contract in just one minute, dozens of times faster than manual processing, significantly improving work efficiency. Baidu AI Cloud’s intelligent customer service has also applied ERNIE to enhance the accuracy of its services. It is currently in use in numerous places across China by enterprises including China Unicom and Shanghai Pudong Development Bank.

Previous research blogs:

ERNIE 2.0: Baidu’s Pre-training Model ERNIE Achieves New NLP Benchmark Record