2019-03-16

Back to listBaidu announced a new language representation model ERNIE (Enhanced Representation through kNowledge IntEgration) and open-sourced its code and pre-trained model based on PaddlePaddle, Baidu’s deep learning open platform (https://github.com/PaddlePaddle/LARK/tree/develop/ERNIE). The model made significant performance on various natural language processing (NLP) datasets for tasks such as natural language inference, semantic similarity, named entity recognition, sentiment analysis and question-answer matching. The results show that ERNIE outperforms Google’s BERT in all Chinese language understanding tasks mentioned above.

In recent years, unsupervised pre-trained language models have made great progress on various NLP tasks. Early work in this field focused on context-independent word embedding. Later, models such as Cove, ELMo, and GPT have constructed sentence-level semantic representation. BERT achieved better results by predicting shielded words and utilizing Transformer's multi-level self-attention bidirectional modeling capability.

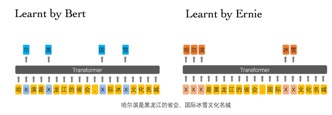

All of these models mainly focused on the original language signals not on semantic units in the text. This problem is more obvious in Chinese language. Take BERT for example, it is difficult for BERT to learn the complete semantic representation of larger semantic units since it processes Chinese language by inferring Chinese characters. When it comes to terms such as 乒[mask]球(a three-character term meaning table tennis with the middle character masked) , [mask]颜六色(an idiomatic phrase meaning colorful and we mask the first character),BERT can easily infer the masked characters based on the character collocations but not on the concepts and knowledge.

We considered that if the model can learn the implicit knowledge from texts, its performances on various tasks will be further improved. Therefore, we train ERNIE with knowledge integration enhancement. ERNIE learns the semantic representation of complete concepts by masking semantic units such as words and entities. ERNIE directly models priori semantic knowledge units and, as a result, enhances the model's ability to learn semantic representation.

Learnt by BERT :哈[mask] 滨是[mask] 龙江的省会,[mask] 际冰[mask] 文化名城。

Learnt by ERNIE:[mask] [mask] [mask] 是黑龙江的省会,国际[mask] [mask] 文化名城。

For example, BERT identified the character 尔(er) by the local co-occurring characters 哈(ha)and 滨(bin)in哈尔滨(Harbin) not by any knowledge related to Harbin. However, ERNIE learned that Harbin is the capital of Heilongjiang Province in China and that Harbin is a city with ice and snow by learning the expression of words and entities.

ERNIE is character-based and it is more versatile and scalable. Compared with word-level modeling, character-level modeling can comprehend the compositional meaning of characters. For example, when modeling words like 红色,蓝色, 绿色(meaning red, blue and green respectively, with the same final character) , it can learn the semantic relationship between words through the compositional meaning of the characters.

In addition, multi-source data containing different knowledge is introduced to ERNIE’s training data. Besides encyclopedia articles, ERNIE has been fed with data from news and dialogues in forums. In forum dialogue modeling, dialogue data is important for semantic representation, since the corresponding query semantics of the same replies are often similar.

Based on this hypothesis, ERNIE uses DLM (Dialogue Language Model) to model the Query-Response dialogue structure. It takes adjacency pairs in dialogues as input, and introduces dialogue embedding to identify the roles in the dialogue. It uses Dialogue Response Loss to learn the implicit relationship in dialogues, which also enhances the model'sability to learn semantic representation.

To verify ERNIE's knowledge learning ability, we tested the model on the machine comprehension task by filling some questions with answers. The experiment setting is that the entity knowledge is removed from the paragraphs and the model need to infer what they are.

| Predicted by ERNIE | Predicted by BERT | The Answer | |

2006年9月, 与张柏芝结婚,两人婚后育有两儿子——大儿子Lucas谢振轩,小儿子Quintus谢振南;2012年5月,二人离婚。 (In September 2006, _____ married Cecilia Cheung. They had two sons, the older one is Zhenxuan Xie and the younger one is Zhennan Xie. In May 2012, they divorced.) | 谢霆锋 (Tingfeng Xie) | 谢振轩 (Zhenxuan Xie, which is the name appeared in the context) | 谢霆锋 (Tingfeng Xie) |

戊戌变法,又称百日维新,是 、梁启超等维新派人士通过光绪帝进行的一场资产阶级改良。 (The Reform Movement of 1898, also known as the Hundred-Day Reform, was a bourgeois reform carried out by the reformists such as ____ and Qichao Liang through Emperor Guangxu.) | 康有为 (Youwei Kang) | 孙世昌 (a person name not related to this event) | 康有为 (Youwei Kang)

|

澳大利亚是一个高度发达的资本主义国家,首都为 。作为南半球经济最发达的国家和全球第12大经济体、全球第四大农产品出口国,其也是多种矿产出口量全球第一的国家。 (Australia is a highly developed capitalist country with _______ as its capital. As the most developed country in the Southern Hemisphere, the 12th largest economy in the world and the fourth largest exporter of agricultural products in the world, it is also the world's largest exporter of various minerals.) | 胰岛素 Insulin | 糖糖内 not a word in Chinese | 胰岛素 Insulin |

___是中国神魔小说的经典之作,达到了古代长篇浪漫主义小说的巅峰,与《三国演义》《水浒传》《红楼梦》并称为中国古典四大名著。 (___________is a classic novel of Chinese gods and demons, which reaching the peak of ancient Romantic novels. It is also known as the four classical works of China with Romance of the Three Kingdoms, Water Margin and Dream of Red Mansions.)

| 西游记 ( the journey to the West) | 《小》 (not a word in Chinese) | 西游记 ( the journey to the West)

|

相对论是关于时空和引力的理论,主要由 创立。 (Relativity is a theory about space-time and gravity, which was founded by _________.) | 爱因斯坦 ( Einstein) | 卡尔斯所 (not a word in Chinese) | 爱因斯坦 ( Einstein) |

向日葵,因花序随 转动而得名。 (Sunflower, named for its inflorescence that rotates with ______.) | 太阳 (the Sun) | 日阳 (not a word in Chinese) | 太阳 (the Sun) |

是太阳系八大行星中体积最大、自转最快的行星,从内向外的第五颗行星。它的质量为太阳的千分之一,是太阳系中其它七大行星质量总和的2.5倍。 (____ is the fifth planet with the largest volume and the fastest rotation among the eight planets in the solar system. Its mass is one thousandth of that of the sun, 2.5 times the mass of the other seven planets in the solar system combined.) | 木星 ( the Jupiter) | 它星 (not a word in Chinese) | 木星 ( the Jupiter) |

地球表面积5.1亿平方公里,其中71%为 ,29%为陆地,在太空上看地球呈蓝色。 (The surface area of the Earth is 510 million square kilometers, which of 71% are ________, 29% are land. the Earth is blue if we look it from the outer space.) | 海洋 (Oceans) | 海空 (the oceans and the sky) | 海洋 (Oceans) |

From the results above, it is clear that ERNIE performs better in context-based knowledge reasoning than BERT.

To verify ERNIE’s ability of knowledge reasoning, we conducted further experiments on natural language inference tasks. XNLI was built by researchers from Facebook and New York University to assess a model's multilingual sentence comprehension. Its goal is to judge the relationship between two sentences (contradiction, neutrality and entailment). We have compared the performance of BERT and ERNIE on its Chinese data:

| Acc in develop | Acc in test | |||

| avg | std | Avg | Std | |

| BERT | 78.1% | 0.0038 | 77.2% | 0.0026 |

| ERNIE | 79.9%(+1.8%) | 0.0041 | 78.4%(+1.2%) | 0.0040 |

The result shows that ERNIE outperforms BERT in natural language inference.

In order to verify the model's capability, we validated its effectiveness on a number of open Chinese language datasets. Compared to BERT, ERNIE has achieved better results.

LCQMC is a question similarity dataset constructed by Harbin Institute of Technology in COLING 2018, one of the top international conferences in Natural Language Processing. Its goal is to determine whether the two questions share the same meaning

| Acc in develop | Acc in test | |||

| avg | std | Avg | Std | |

| BERT | 88.8% | 0.0029 | 87.0% | 0.0060 |

| ERNIE | 89.7%(+0.9%) | 0.0021 | 87.4%(+0.4%) | 0.0019 |

2. Sentimental Analysis Task ChnSentiCorp

| Acc in develop | Acc in test | |||

| avg | std | Avg | Std | |

| BERT | 94.6% | 0.0027 | 94.3% | 0.0058 |

| ERNIE | 95.2%(+0.6%) | 0.0012 | 95.4%(+1.1%) | 0.0044 |

MSRA-NER dataset is designed for the research competition of named entity recognition, published by Microsoft Research Asia.

| F1 in develop | F1 in test | |||

| 均值 | 方差 | 均值 | 方差 | |

| BERT | 94.0% | 0.0024 | 92.6% | 0.0024 |

| ERNIE | 95.0%(+1.0%) | 0.0027 | 93.8%(+1.2%) | 0.0031 |

4.Retrieval Question Answer Matching Task NLPCC-DBQA

NLPCC-DBQA is a task released by NLPCC (an International Conference on natural language processing and Chinese computing) in 2016. Its goal is to select correct sentence which can answer the questions.

| MRR in dev | MRR in test | |||

| Average | std | Average | std | |

| 94.7% | 0.0022 | 94.6% | 0.0014 | |

| BERT | 95.0%(+0.3%) | 0.0011 | 95.1%(+0.5%) | 0.0011 |

| ERNIE | F1 in dev | F1 in test | ||

| Average | std | Average | std | |

| BERT | 80.7% | 0.0158 | 80.8% | 0.0158 |

| ERNIE | 82.3%(+1.6%) | 0.0103 | 82.7%(+1.9%) | 0.0136 |

ERNIE team said that this breakthrough technology will be applied to a variety of products and scenarios to enhance user experience. Next, Baidu will further conduct research on integrating knowledge into pre-training semantic representation models, such as using syntactic parsing or weak supervised signals from other tasks or validating this idea in other languages.

Baidu NLP group aims to "Understand languages, acquire intelligence and change the world". The team will continue to develop cutting-edge NLP technologies, create leading technology platforms and build innovative products to serve users.